Zachary DeVito [Tue, 27 Nov 2018 19:46:17 +0000 (11:46 -0800)]

Print default values and introduce ir view classes (#14176)

Summary:

[Stacked commit, only review the last commit]

This PR adds support for printing default values in python printing as well as the logic

for parsing default values back in using the parser. For simplicity, this PR simply

creates a subgraph of the constant expressions and then runs that graph to generate the defaults.

A more lightweight approach should be possible later, but would require more machinery.

To make reading code in the printer easier, this also add ir_views.h.

Similar to tree_views.h these classes can provide views of some commonly used IR nodes

that have complicated structure and common operations on that structure.

Currently it has only read-only views for prim::If and prim::Loop,

but we should eventually add helpers to manipulate If/Loop nodes as well.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14176

Differential Revision:

D13198455

Pulled By: zdevito

fbshipit-source-id:

dc99ab9692804ccaedb60a55040c0b89ac7a6a6d

Thomas Viehmann [Tue, 27 Nov 2018 19:30:41 +0000 (11:30 -0800)]

Add Type support to the fuser, fuse more (#14336)

Summary:

This adds scalar type support to the fuser, both internally (instead of auto / assuming float) and for the inputs/outputs.

We can now fuse things with input / output of arbitrary scalar type, in particular comparisons and where work well. So it fixes #13384 by returning the right type tensor (and adds a test where byte and double tensors are returned).

The type inference is done by re-calling PropagateTensorShapeOnNode in the compilation, I would venture that it isn't prohibitively expensive compared to the actual compilation. (Propagation was fixed for where to return the second argument's type and amended to handle FusedConcat.)

I'm not sure how to add a check for the code generated by the fuser, but I am not sure we absolutely need to (we'd see if it is invalid / produces wrong results).

Thanks in particular to apaszke, fmassa, mruberry for advice and encouragement! All the errors are my own.

I have discussed order of PRs briefly with mruberry, if this goes in before he submits the PR, he graciously agreed to rebasing his, but I'd happily rebase, too.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14336

Differential Revision:

D13202620

Pulled By: soumith

fbshipit-source-id:

855159e261fa15f21aca3053bfc05fb3f720a8ef

svcscm [Tue, 27 Nov 2018 19:20:46 +0000 (11:20 -0800)]

Updating submodules

Reviewed By: yns88

fbshipit-source-id:

e63160e97550942931bacaa860d91d591d2e1712

David Riazati [Tue, 27 Nov 2018 18:49:14 +0000 (10:49 -0800)]

Add boolean dispatch for function overloading (#14081)

Summary:

This PR allows to overload functions based on the value of a parameter (so long as it is a constant). See `max_pool1d` for an example usage.

This is the first step in enabling the use of `max_pool` functions for the standard library that can return `Tensor` or `Tuple[Tensor, Tensor]` based on the `return_indices` flag. This will give the JIT identical results to the Python versions of the functions.

Depends on #14232 for `Optional[BroadcastingList[T]]`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14081

Differential Revision:

D13192228

Pulled By: driazati

fbshipit-source-id:

fce33c400c1fd06e59747d98507c5fdcd8d4c113

Pieter Noordhuis [Tue, 27 Nov 2018 18:41:06 +0000 (10:41 -0800)]

Barrier synchronizes with prior work before completing (#14386)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14386

See #13573, #14142, and #14271 for discussion.

This change updates ProcessGroupGloo to ensure that all prior

operations have completed before executing the barrier.

Reviewed By: manojkris

Differential Revision:

D13205022

fbshipit-source-id:

673e7e6ca357dc843874d6dd8da590832e1de7fa

Pieter Noordhuis [Tue, 27 Nov 2018 18:41:06 +0000 (10:41 -0800)]

Make ProcessGroup::Work::wait() throw (#14298)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14298

This is a breaking API change for users of the C++ c10d API. The work

object defined wait() to return a boolean. If the work completed

successfully it would return true, if it didn't it would return false.

It was then up to the user to call the exception() function to figure

out what went wrong. This has proven suboptimal as it allows users to

forget about failure handling and errors may be ignored.

The work class is semantically very similar to std::future, where a

call to get() may throw if the underlying std::promise has set an

exception. This commit changes the semantic of the work class to be

similar to this and turns wait() into a void function that throws if

the work completes with an exception.

The exception() function can still be used to retrieve the exception

if isSuccess() returns false, but now returns an std::exception_ptr

instead of a reference to a std::exception.

Reviewed By: manojkris

Differential Revision:

D13158475

fbshipit-source-id:

9cd8569b9e7cbddc867a5f34c6fd0b7be85581b8

Pieter Noordhuis [Tue, 27 Nov 2018 18:41:04 +0000 (10:41 -0800)]

Add option structs and timeout field (#14297)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14297

Adds option structs for allgather and barrier such that we have one

for every collective. Add timeout member field to every one of these

such that we can support per operation timeouts.

Use default constructed options struct for every collective process

group function exposed to Python.

Reviewed By: manojkris

Differential Revision:

D13158474

fbshipit-source-id:

3d28977de2f2bd6fc2f42ba3108b63a429338906

Pieter Noordhuis [Tue, 27 Nov 2018 18:41:04 +0000 (10:41 -0800)]

Refer to all work with ProcessGroup prefix (#14296)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14296

There was mixed usage of "ProcessGroup::Work" and just "Work".

Adding prefix for readability/consistency.

Reviewed By: manojkris

Differential Revision:

D13128977

fbshipit-source-id:

a54a8784fa91cd6023c723cb83e9f626fb896a30

Pieter Noordhuis [Tue, 27 Nov 2018 18:41:04 +0000 (10:41 -0800)]

Remove algorithm caching in ProcessGroupGloo (#14295)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14295

This is no longer used after moving to Gloo new style algorithms.

Closes #11912.

Reviewed By: manojkris

Differential Revision:

D13111781

fbshipit-source-id:

53e347080e29d847cd9da36f2d93af047930690c

Pieter Noordhuis [Tue, 27 Nov 2018 18:41:04 +0000 (10:41 -0800)]

Use new style barrier support in c10d/gloo (#14294)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14294

This is the final collective to be ported to the new style where there

is no longer a need to keep a cached algorithm instance around. There

is a follow up change incoming to remove the algorithm caching

functionality in ProcessGroupGloo.

Reviewed By: manojkris

Differential Revision:

D13111509

fbshipit-source-id:

f3ea0d955a62029fc4e7cfc09055e4957e0943ac

Wei Yang [Tue, 27 Nov 2018 18:22:24 +0000 (10:22 -0800)]

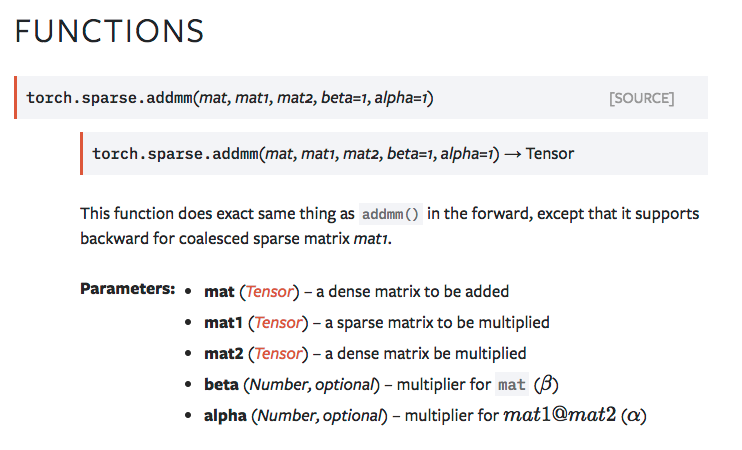

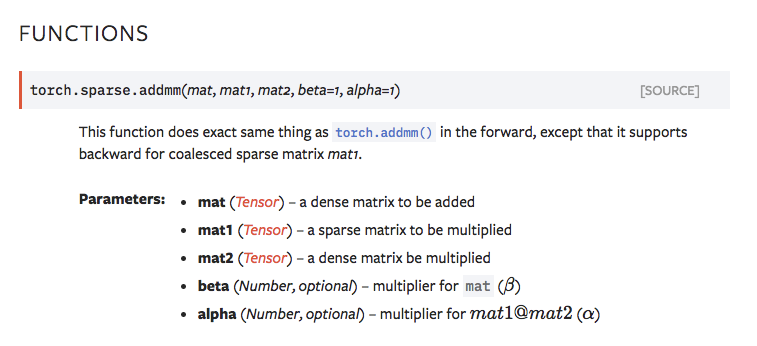

fix doc for sparse.addmm (#14403)

Summary:

- fixing the doc issue in sparse.addmm

================ before change ==================

================ post change ==================

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14403

Differential Revision:

D13216582

Pulled By: weiyangfb

fbshipit-source-id:

52e0a20c6b341c37cfb31f281be3afe2a52ca532

Jongsoo Park [Tue, 27 Nov 2018 18:05:28 +0000 (10:05 -0800)]

per-group and per-channel quantization (#14340)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14340

Pull Request resolved: https://github.com/pytorch/FBGEMM/pull/25

Per-group and per-channel quantization in fbgemm

This diff also cleans up explicit template instantiation using macro expansion

This diff also changes randFill interface which was easy to make mistakes of generating integer random numbers for floating point vectors.

Using this in DNNLOWP operators will be done in a separate diff.

Reviewed By: dskhudia

Differential Revision:

D13176386

fbshipit-source-id:

e46c53e31e21520bded71b8ed86e8b19e010e2dd

Peter Goldsborough [Tue, 27 Nov 2018 18:04:57 +0000 (10:04 -0800)]

Add variable_factories.h to cppdocs (#14381)

Summary:

This will document `torch::from_blob` and such.

soumith ezyang

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14381

Differential Revision:

D13216560

Pulled By: goldsborough

fbshipit-source-id:

112f60e45e4d38a8a9983fa71e9cc56bc1a73465

Jan Schlüter [Tue, 27 Nov 2018 17:36:11 +0000 (09:36 -0800)]

Use integer math to compute output size of pooling operations (#14405)

Summary:

As reported in #13386, the pooling operations can return wrong results for large inputs. The root of the problem is that while the output shape is initially being computed with integer operations, it is converted to float32 for division by the stride and applying either a `ceil` or a `floor` depending on the `ceil_mode`. Since even moderately large integers (the smallest being 16,777,217) cannot be expressed exactly in float32, this leads to wrong result shapes.

This PR relies purely on integer operations to perform the shape computation, including the ceil/floor distinction. Since I could not stand all that duplicated code, I pulled it out into a `pooling_shape.h` header, similar to the existing `linear_upsampling.h` header. I hope this is acceptable, let me know if you'd like to see it solved differently. I've also added tests to `test_nn.py` that fail without my changes and pass with my changes. They cover `{max,avg}_pool{1,2,3}d()` for CPU and GPU.

Fixes #13386.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14405

Differential Revision:

D13215260

Pulled By: soumith

fbshipit-source-id:

802588ce6cba8db6c346448c3b3c0dac14d12b2d

Edward Yang [Tue, 27 Nov 2018 16:23:34 +0000 (08:23 -0800)]

Delete legacy THCStream (long live THCStream). (#14246)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14246

This commit systematically eliminates THCStream entirely from THC, replacing it

with at::cuda::CUDAStream. In places where the previous pointer type showed up

in a public API signature, those functions are now only available to C++

clients. (It would not be too difficult to make a C-compatible version of

CUDAStream, as it's really just a simple struct, but we leave this for

future work.)

All functions in THC that referred to THCStream were expunged in favor of their

modern counterparts.

One annoyance was that I didn't feel like redoing how the torch.cuda.Stream

binding code worked, but I really wanted to get rid of the stored THCStream*

pointer. So I repurposed the bit-packing code I implemented for Stream hashing,

and used that to (reversibly) store streams in a uint64_t cdata field. A perhaps

more future proof solution would be to get rid of cdata entirely, and store the

device and stream ID directly.

Billing of changes:

- All CUDAStream_ pointer API functions are now hidden and anonymously

namespaced (instead of being in the impl namespace). All use sites

rewritten to use the modern C++ API. Since CUDAStreamInternals is no

longer part of the public API, the CUDAStreamInternals constructor and

internals() method have been removed, and replaced with anonymous

functions in the C++ file.

- device_index() returns DeviceIndex rather than int64_t now

- Stream and CUDAStream now have pack/unpack methods. (CUDAStream checks

that the unpacked bit-pattern is for a CUDA device.)

- THCStream.h header is removed entirely

- Most THCStream handling functions in THC API are removed

Reviewed By: gchanan

Differential Revision:

D13121531

fbshipit-source-id:

48873262cc0a37c3eec75a7ba1c93c800da40222

Edward Yang [Tue, 27 Nov 2018 16:23:34 +0000 (08:23 -0800)]

Add hash functions for Stream, CUDAStream; fix Device hash function (#14191)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14191

Previously, Device's hash function only worked for CPU and CUDA. Now

it works for everything.

Implementing the bit concatenation was a bit tricky, and I got it wrong the

first time. See Note [Hazard when concatenating signed integers]

Reviewed By: smessmer

Differential Revision:

D13119624

fbshipit-source-id:

36bfa139cfc739bb0624f52aaf466438c2428207

Owen Anderson [Tue, 27 Nov 2018 06:41:56 +0000 (22:41 -0800)]

Implement NaN-propagating max/min on Vec256.

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/13399

Differential Revision:

D13199957

Pulled By: resistor

fbshipit-source-id:

1565e079b13c5d4f42f2033830a7c997b7d824bc

svcscm [Tue, 27 Nov 2018 03:35:44 +0000 (19:35 -0800)]

Updating submodules

Reviewed By: yns88

fbshipit-source-id:

210f7eec65bea5e31817fb56dec27b0ab8af797a

Ilia Cherniavskii [Tue, 27 Nov 2018 03:07:07 +0000 (19:07 -0800)]

Remove unused executors, part 3 (#14199)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14199

Remove legacy code for dag, async_dag

Reviewed By: salexspb

Differential Revision:

D13019102

fbshipit-source-id:

ff07e45304d9af4be0375215f4b642c4b0edb12d

Ilia Cherniavskii [Tue, 27 Nov 2018 03:07:07 +0000 (19:07 -0800)]

Remove unused executors, part 2 (#14115)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14115

Remove legacy implementation of prof_dag

Reviewed By: salexspb

Differential Revision:

D13019096

fbshipit-source-id:

4f2bf676444d84eaa2cc1effcc3ebdc764e0a016

Ilia Cherniavskii [Tue, 27 Nov 2018 03:07:06 +0000 (19:07 -0800)]

Remove unused executors, part 1 (#14117)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14117

Removing unused legacy executors (htrace)

Reviewed By: salexspb

Differential Revision:

D13019078

fbshipit-source-id:

19d0ed1b47a22cc17c27fdd15d748ced54806132

Edward Yang [Tue, 27 Nov 2018 03:06:06 +0000 (19:06 -0800)]

Delete OPENMP_STUB translation. (#14286)

Summary:

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14286

Differential Revision:

D13205356

Pulled By: ezyang

fbshipit-source-id:

08e9821e4b32f8d7f3c41906e481f280ee6cf2e3

Wei Yang [Tue, 27 Nov 2018 01:43:21 +0000 (17:43 -0800)]

backward for sparse.addmm(D, S, D, alpha, beta) -> D (#13345)

Summary:

- introduce `sparse.addmm()` with backward for sparse matrix input for https://github.com/pytorch/pytorch/issues/12308

Pull Request resolved: https://github.com/pytorch/pytorch/pull/13345

Differential Revision:

D13094070

Pulled By: weiyangfb

fbshipit-source-id:

136c08c3ca9bafb20577b60dd43d31c3e5cd5461

Marat Dukhan [Tue, 27 Nov 2018 01:41:13 +0000 (17:41 -0800)]

Switch Int8ChannelShuffle operator to QNNPACK (#14362)

Summary:

1.8-2.2X better performance on ARM devices

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14362

Reviewed By: jerryzh168

Differential Revision:

D13192312

Pulled By: Maratyszcza

fbshipit-source-id:

0d3dff067e300c7d741c42615b61246cbf09a829

Teng Li [Tue, 27 Nov 2018 01:05:17 +0000 (17:05 -0800)]

Fixed file init_method write/read race (#14388)

Summary:

This should fix the race among multiple processes: https://github.com/pytorch/pytorch/issues/13750

Essentially, the reader is trying to open the file, and will error out if it doesn't exist, we here factor in the timeout option of FileStore to apply a timeout for creating a file (should always be created anyway unless something is wrong), and more importantly, waiting for the file to be created.

Tested on both NFS and local drive, the race disappears when 8 concurrent processes do distributed training.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14388

Differential Revision:

D13207178

Pulled By: teng-li

fbshipit-source-id:

d3d5d62c4c8f01c0522bf1653c8986155c54ff80

Peter Goldsborough [Tue, 27 Nov 2018 01:04:51 +0000 (17:04 -0800)]

Fix dataloader iterator test (#14045)

Summary:

I noticed the test `DataLoaderTest.CanDereferenceIteratorMultipleTimes` doesn't test proper progression of the iterator. I also added a test for using `std::copy`.

Fixes https://github.com/pytorch/pytorch/issues/14276

ebetica ezyang apaszke

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14045

Differential Revision:

D13092187

Pulled By: goldsborough

fbshipit-source-id:

57698ec00fa7b914b159677a4ab38b6b25c2860b

Teng Li [Tue, 27 Nov 2018 00:44:11 +0000 (16:44 -0800)]

Fixed c10d test (#14389)

Summary:

Most likely a typo.

Tested on 8-GPU machine

```

tengli@learnfair062:~/pytorch/test$ python test_c10d.py ProcessGroupNCCLTest.test_barrier

.

----------------------------------------------------------------------

Ran 1 test in 29.341s

OK

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14389

Differential Revision:

D13207207

Pulled By: teng-li

fbshipit-source-id:

aaffe14237076fe19d94e2fa4d9c093397f07bb9

Brennan Vincent [Tue, 27 Nov 2018 00:34:47 +0000 (16:34 -0800)]

fix typo in `torch.sum` documentation (#14250)

Summary:

Notice that an extra colon was added to `:attr:`, so in https://pytorch.org/docs/stable/torch.html#torch.sum , `dim` shows up as ":attr::_dim_". This patch fixes the issue.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14250

Reviewed By: soumith

Differential Revision:

D13146363

Pulled By: umanwizard

fbshipit-source-id:

f7d03dcb0973aae248b56ab407ba8489f2b1fe36

Wanchao Liang [Tue, 27 Nov 2018 00:21:08 +0000 (16:21 -0800)]

More JIT type hierarchy refinement (#14127)

Summary:

JIT type system hierarchy refinement and refactors:

1. Make NumberType be the base type of IntType FloatType

2. Make single type container like OptionalType and FutureType share SingleElementType base type

3. Some refactors to make it more robust, e.g. adding python_str() for some types so that we have proper python_print serialization format

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14127

Differential Revision:

D13112657

Pulled By: wanchaol

fbshipit-source-id:

335c5b25977be2e0a462c7e4a6649c1b653ccb4f

Jesse Hellemn [Mon, 26 Nov 2018 23:55:40 +0000 (15:55 -0800)]

changing some rpath stuff (#14304)

Summary:

See if anything breaks

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14304

Differential Revision:

D13201418

Pulled By: pjh5

fbshipit-source-id:

ac2101b61a23bda37329d4d923c3d9d120e718bf

Kevin Chen [Mon, 26 Nov 2018 23:49:36 +0000 (15:49 -0800)]

Fix caffe2 => onnx exporter for ConvTranspose (#14143)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14143

ConvTranspose has a per-operator attribute rename, which meant that the

global attribute rename for kernels => kernel_shape was not applied.

Changing the behavior so that the global renames always apply, but per-op

renames can override those for specific attributes.

Note: The python frontend path isn't actually used for ConvTranspose, but I

thought it would be good to make it consistent.

Reviewed By: yinghai

Differential Revision:

D13113395

fbshipit-source-id:

cd3f124b4b5c753a506d297138b7d002b51bfb38

Will Feng [Mon, 26 Nov 2018 22:51:57 +0000 (14:51 -0800)]

Revert

D13166669: [pytorch][PR] Allow dataloader to accept a custom memory pinning function

Differential Revision:

D13166669

Original commit changeset:

ca965f9841d4

fbshipit-source-id:

0836b4f50f73ba01c97491a719660f02e36f20ad

andersj [Mon, 26 Nov 2018 22:05:05 +0000 (14:05 -0800)]

remove CAFFE2_API from IdWrapper (#14044)

Summary:

it doesn't really make sense on a template class. Also it breaks if

you try to build in debug on Windows, so this will save someone some

frustration in the future.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14044

Differential Revision:

D13202960

Pulled By: anderspapitto

fbshipit-source-id:

617d78366993d5ecc2ba1f23bb90010f10df41f3

Jerry Zhang [Mon, 26 Nov 2018 21:03:40 +0000 (13:03 -0800)]

FeedTensor returns a Tensor (#14196)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14196

Pull Request resolved: https://github.com/pytorch/pytorch/pull/13641

FeedTensor function used to take a pointer to Tensor and feed the content using Resize

and mutable_data, but since Tensor is a pointer now, we can just return a Tensor instead.

Reviewed By: dzhulgakov

Differential Revision:

D13091163

fbshipit-source-id:

9abf2fd320baca76e050530c500dd29f8e2d0211

Richard Zou [Mon, 26 Nov 2018 20:28:44 +0000 (12:28 -0800)]

Allow graph fuser to move chunks past multiple nodes. (#14055)

Summary:

Fixes #12290. Also speeds up JIT LSTM forward pass from 8.8ms to 7.8ms; previously, each JIT lstm cell used 2 fused kernels. Now, it only uses one fused kernel (which is how many kernels cudnn uses).

Explanation:

Let f, g, h be fusible ops.

```

x = f(v, w)

z = g(x, y)

a, b = chunk(z)

c = h(a, b)

```

becomes (before this PR):

```

x = f(v, w)

x', y' = broadcast_tensors([x, y])

ax, bx = chunk(x')

ay, by = chunk(y')

a = g(ax, ay)

b = g(bx, by)

c = h(a, b)

```

The graph fuser then puts g, g, and h into one FusionGroup and is unable

to move `x = f(v, w)` into the FusionGroup.

This PR lets the graph fuser move `x = f(v, w)` into the FusionGroup.

It does this by abstracting the broadcast_tensors + multiple chunk nodes

into one intermediate `prim::BroadcastingChunk[chunks, dim]` node.

A `BroadcastingChunk[chunks, dim](*inputs)` node is equivalent to:

- broadcasting all of *inputs

- chunk-ing each broadcasted input into `chunks` chunks along dim `dim`.

Abstracting the broadcasting chunk behavior away, it is now a lot easier

for the graph fuser to move (broadcast + chunk) past an operation. After

this PR, the above graph becomes:

```

x = f(v, w)

ax, bx, ay, by = BroadcastingChunk(x, y)

a = g(ax, ay)

b = g(bx, by)

c = h(a, b)

```

Now, to move `x = f(v, w)` after the BroadcastingChunk, one just needs

to add f's operands to the BroadcastingChunk:

```

ay, by, av, bv, aw, bw = BroadcastingChunk(y, v, w)

ax = f(av, aw)

by = f(bv, bw)

a = g(ax, ay)

b = g(bx, by)

c = h(a, b)

```

cc apaszke mruberry zdevito

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14055

Differential Revision:

D13159259

Pulled By: zou3519

fbshipit-source-id:

134e9e645c950384d9be6a06a883a10e17a73d7d

svcscm [Mon, 26 Nov 2018 20:10:45 +0000 (12:10 -0800)]

Updating submodules

Reviewed By: yns88

fbshipit-source-id:

b4d74bf58b5536a0de654dfe73d41b5e1126eec6

Jesse Hellemn [Mon, 26 Nov 2018 20:08:42 +0000 (12:08 -0800)]

Removing Caffe2-specific conda infra

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/11961

Differential Revision:

D10045909

Pulled By: pjh5

fbshipit-source-id:

e9c12124897ee586aeb8b6654b31e4b81687199a

Michael Suo [Mon, 26 Nov 2018 20:02:09 +0000 (12:02 -0800)]

fix tensor advanced indexing with assignment (#14311)

Summary:

Fix a mishandling of `foo[a] = b` when `a` was a tensor. We were assigning to a copy of `foo`, not a view of it.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14311

Differential Revision:

D13196109

Pulled By: suo

fbshipit-source-id:

c929401fda7c4a27622d3fe2b11278b08a7f17f1

Jongsoo Park [Mon, 26 Nov 2018 19:45:55 +0000 (11:45 -0800)]

remove unnecessary zero_point argument from constructors (#14323)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14323

Pull Request resolved: https://github.com/pytorch/FBGEMM/pull/24

As title says.

Reviewed By: dskhudia

Differential Revision:

D13167073

fbshipit-source-id:

6d6c526fd6e29a14e97f71a0881f28ada8703107

svcscm [Mon, 26 Nov 2018 19:24:40 +0000 (11:24 -0800)]

Updating submodules

Reviewed By: yns88

fbshipit-source-id:

06e234f1a0217a268712832f21cb06b7109538a6

Peter Goldsborough [Mon, 26 Nov 2018 19:13:52 +0000 (11:13 -0800)]

Fix -Wreturn-std-move (#14113)

Summary:

On clang-7 (internal) a warning, `-Wreturn-std-move`, is being emitted and raised to an error via `-Werror` for the code this PR fixes. The reason is that `autograd::make_variable` returns an `autograd::Variable`, so returning it from a function that returns `at::Tensor` disallows the compiler from eliding the return value (RVO). So let's explicitly convert the `autograd::Variable` to an `at::Tensor` before returning it.

ezyang

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14113

Differential Revision:

D13105638

Pulled By: goldsborough

fbshipit-source-id:

6e1dc31c6512e105ab2a389d18807422ee29283c

Jongsoo Park [Mon, 26 Nov 2018 19:07:15 +0000 (11:07 -0800)]

minimize code compiled with avx2 and header includes from them (#14313)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14313

Pull Request resolved: https://github.com/pytorch/FBGEMM/pull/22

This diff is an attempt to minimize code compiled with avx2.

Reviewed By: dskhudia

Differential Revision:

D13166591

fbshipit-source-id:

2be241141f6d7478b86a422953791e237ff10268

Peter Goldsborough [Mon, 26 Nov 2018 18:12:50 +0000 (10:12 -0800)]

Add proper from_blob overloads (#13982)

Summary:

There was an overload for `torch::from_blob` missing that allowed passing strides.

ezyang soumith

Pull Request resolved: https://github.com/pytorch/pytorch/pull/13982

Differential Revision:

D13108089

Pulled By: goldsborough

fbshipit-source-id:

b87594ec0bf55b35d106b4438bc18b2ce9fc8f71

Brennan Vincent [Mon, 26 Nov 2018 18:03:24 +0000 (10:03 -0800)]

allow concatenating "hybrid" (sparse/dense) tensors along their dense dimensions (#13761)

Summary:

Follow-up to #13577

The idea is to take each values tensor, concatenate it with zeros before and after itself (along the dimension corresponding to the one we're catting the tensors along), to get a tensor corresponding to the values for that tensor in the result. Then we concatenate all of those together to get the final values tensor. (Hopefully, this will be more clear from the example in the comments).

The indices are more straightforward: since we aren't concatenating along a sparse dimension, they don't change at all, so all we need to do are concatenate the indices from the different tensors together.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/13761

Differential Revision:

D13160343

Pulled By: umanwizard

fbshipit-source-id:

13d7adecd369e0eebdf5bce3d90a51029b66bd1d

Peter Goldsborough [Mon, 26 Nov 2018 17:37:04 +0000 (09:37 -0800)]

Allow torch.utils.cpp_extension.load to load shared libraries that aren't Python modules (#13941)

Summary:

For custom TorchScript operators, `torch.ops.load_library` must be used and passed the path to the shared library containing the custom ops. Our C++ extensions stuff generally is meant to build a Python module and import it. This PR changes `torch.utils.cpp_extension.load` to have an option to just return the shared library path instead of importing it as a Python module, so you can then pass it to `torch.ops.load_library`. This means folks can re-use `torch.utils.cpp_extension.load` and `torch.utils.cpp_extension.load_inline` to even write their custom ops inline. I think t-vi and fmassa will appreciate this.

soumith

Pull Request resolved: https://github.com/pytorch/pytorch/pull/13941

Differential Revision:

D13110592

Pulled By: goldsborough

fbshipit-source-id:

37756307dbf80a81d2ed550e67c8743dca01dc20

Adam Paszke [Mon, 26 Nov 2018 17:18:43 +0000 (09:18 -0800)]

Batch more matrix multiplies (#13456)

Summary:

This handles the input pre-multiplication in RNNs, yielding pretty significant speedups in backward times. This pass depends on loop unrolling, so we'll batch only as many elements as the unrolling factor allows.

cc mruberry ngimel zou3519 zdevito

Pull Request resolved: https://github.com/pytorch/pytorch/pull/13456

Differential Revision:

D12920339

Pulled By: zou3519

fbshipit-source-id:

5bcd6d259c054a6dea02ae09a9fdf9f030856443

Gregory Chanan [Mon, 26 Nov 2018 15:56:43 +0000 (07:56 -0800)]

Enable native wrappers for the remainder of nn functions.

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/14290

Differential Revision:

D13162562

Pulled By: gchanan

fbshipit-source-id:

615e1727988bfeeade48f9b38162333a2e298f7b

Huan Gui [Sat, 24 Nov 2018 10:41:25 +0000 (02:41 -0800)]

Add Recency Weighted into SparseLookup (#14291)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14291

Add RecencyWeighted into SparseLookup.

Reviewed By: Wakeupbuddy

Differential Revision:

D13147738

fbshipit-source-id:

de5dc3aaee8ce7d41c6d30d2ff47e9786a7fa4da

Shuichi KITAGUCHI [Sat, 24 Nov 2018 05:32:10 +0000 (21:32 -0800)]

quote NUMPY_INCLUDE_DIR (#14341)

Summary:

when NUMPY_INCLUDE_DIR contains space character (e.g. "C:\Program Files (x86)\Microsoft Visual Studio\..."), cmake cannot receive correct path name.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14341

Differential Revision:

D13188408

Pulled By: soumith

fbshipit-source-id:

b62127d90e53da94fe6af5d3bdd2ea4fd6546210

Michael Suo [Fri, 23 Nov 2018 19:22:22 +0000 (11:22 -0800)]

shape analysis fix (#14325)

Summary:

This PR is deceptively large because of an indenting change. The actual change is small; I will highlight it inline

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14325

Differential Revision:

D13183296

Pulled By: suo

fbshipit-source-id:

fcbf6d5317954694ec83e6b8cc1c989f2d8ac298

peter [Fri, 23 Nov 2018 16:15:28 +0000 (08:15 -0800)]

Some minor fixes for Windows build script (#14218)

Summary:

1. Fix execution failure when some of the paths are not defined

2. Users can now optionally override install dir by setting `CMAKE_INSTALL_PREFIX`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14218

Differential Revision:

D13180350

Pulled By: soumith

fbshipit-source-id:

8c9680d1285dbf08b49380af1ebfa43ede99babc

Michael Carilli [Fri, 23 Nov 2018 16:08:35 +0000 (08:08 -0800)]

Allow dataloader to accept a custom memory pinning function (#14171)

Summary:

Currently, the `pin_memory_batch` function in the dataloader will return a batch comprised of any unrecognized type without pinning the data, because it doesn't know how.

This behavior was preventing us from overlapping data prefetching in Mask-RCNN, whose custom `collate_fn` returns a custom batch type.

The present PR adds the ability for the user to pass a `pin_fn` alongside any custom `collate_fn` to handle such custom types.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14171

Differential Revision:

D13166669

Pulled By: soumith

fbshipit-source-id:

ca965f9841d4a259b3ca4413c8bd0d8743d433ab

Michael Carilli [Fri, 23 Nov 2018 16:07:51 +0000 (08:07 -0800)]

Option to preserve bitwise accuracy of gradient checkpointed vs non-checkpointed dropout (#14253)

Summary:

This issue was noticed, and fix proposed, by raulpuric.

Checkpointing is implemented by rerunning a forward-pass segment for each checkpointed segment during backward. This can result in the RNG state advancing more than it would without checkpointing, which can cause checkpoints that include dropout invocations to lose end-to-end bitwise accuracy as compared to non-checkpointed passes.

The present PR contains optional logic to juggle the RNG states such that checkpointed passes containing dropout achieve bitwise accuracy with non-checkpointed equivalents.** The user requests this behavior by supplying `preserve_rng_state=True` to `torch.utils.checkpoint` or `torch.utils.checkpoint_sequential`.

Currently, `preserve_rng_state=True` may incur a moderate performance hit because restoring MTGP states can be expensive. However, restoring Philox states is dirt cheap, so syed-ahmed's [RNG refactor](https://github.com/pytorch/pytorch/pull/13070#discussion_r235179882), once merged, will make this option more or less free.

I'm a little wary of the [def checkpoint(function, *args, preserve_rng_state=False):](https://github.com/pytorch/pytorch/pull/14253/files#diff-58da227fc9b1d56752b7dfad90428fe0R75) argument-passing method (specifically, putting a kwarg after a variable argument list). Python 3 seems happy with it.

Edit: It appears Python 2.7 is NOT happy with a [kwarg after *args](https://travis-ci.org/pytorch/pytorch/builds/

457706518?utm_source=github_status&utm_medium=notification). `preserve_rng_state` also needs to be communicated in a way that doesn't break any existing usage. I'm open to suggestions (a global flag perhaps)?

**Batchnorm may still be an issue, but that's a battle for another day.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14253

Differential Revision:

D13166665

Pulled By: soumith

fbshipit-source-id:

240cddab57ceaccba038b0276151342344eeecd7

svcscm [Fri, 23 Nov 2018 05:58:28 +0000 (21:58 -0800)]

Updating submodules

Reviewed By: yns88

fbshipit-source-id:

e92b0c24a56b588dcf30542692cb4bdc2d474825

Sebastian Messmer [Thu, 22 Nov 2018 19:55:07 +0000 (11:55 -0800)]

Remove individual "using c10:xxx" statements (#13168)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/13168

We now have a "using namespace c10" in the at and caffe2 namespaces, we don't need the individual ones anymore

Reviewed By: ezyang

Differential Revision:

D11669870

fbshipit-source-id:

fc2bb1008e533906914188da4b6eb30e7db6acc1

Yinghai Lu [Thu, 22 Nov 2018 08:28:51 +0000 (00:28 -0800)]

Make sure we bind input/output of Onnxifi op positionally (#14214)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14214

This is to pick up the residual task of T36325466 to make sure that input/output binding of c2 Onnxifi op is positional.

Reviewed By: dzhulgakov

Differential Revision:

D13134470

fbshipit-source-id:

d1b916dade65c79133b86507cd54ea5166fa6810

Wanchao Liang [Thu, 22 Nov 2018 07:42:24 +0000 (23:42 -0800)]

Convert gumbel_softmax, lp pooling weak functions and modules (#14232)

Summary:

1. Support `Optional[BroadcastingList1[int]]` like type annotation to accept a int or a list[int]

2. Convert gumbel_softmax, lp pooling weak functions and modules

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14232

Differential Revision:

D13164506

Pulled By: wanchaol

fbshipit-source-id:

6c2a2b9a0613bfe907dbb5934122656ce2b05700

Sebastian Messmer [Thu, 22 Nov 2018 07:04:43 +0000 (23:04 -0800)]

Use ADL to find toString (#14021)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14021

I'm planning to move at::Scalar to c10, and there's a at::toString(Scalar) defined.

Unfortunately, we call it by specifying at::toString() instead of relying on ADL.

This diff changes that to prepare the actual move.

Reviewed By: ezyang

Differential Revision:

D13015239

fbshipit-source-id:

f2a09f43a96bc5ef20ec2c4c88f7790fd5a04870

Sebastian Messmer [Thu, 22 Nov 2018 07:04:42 +0000 (23:04 -0800)]

Fix include paths for intrusive_ptr (#13692)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/13692

This now lives in c10/util, not ATen/core anymore.

Reviewed By: ezyang

Differential Revision:

D12937091

fbshipit-source-id:

ea2d420a15e7941a38d0b4c75e20ca18437c73f8

Sebastian Messmer [Thu, 22 Nov 2018 07:04:42 +0000 (23:04 -0800)]

Move intrusive_ptr to c10/util

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/13691

Reviewed By: ezyang

Differential Revision:

D12937090

fbshipit-source-id:

fe9d21d5f7ea4e78e7e38ac60db13814a9971ed9

Joel Marcey [Thu, 22 Nov 2018 06:28:20 +0000 (22:28 -0800)]

ignore generated caffe2 docs and virtualenvs

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/14309

Reviewed By: soumith

Differential Revision:

D13166626

Pulled By: JoelMarcey

fbshipit-source-id:

4f11228d8b5da85cec222bf11282722a7319581b

svcscm [Thu, 22 Nov 2018 05:59:40 +0000 (21:59 -0800)]

Updating submodules

Reviewed By: yns88

fbshipit-source-id:

20976d595e68a08d746d8806fd0205d810656366

Jongsoo Park [Thu, 22 Nov 2018 05:36:16 +0000 (21:36 -0800)]

removing quantization utility functions moved to fbgemm (#14301)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14301

This diff removes quantization utility functions copied to fbgemm

Reviewed By: Maratyszcza

Differential Revision:

D13159299

fbshipit-source-id:

a7f3cd2af0aa241a8578d532a70a157da70d9289

Achal Shah [Thu, 22 Nov 2018 05:00:22 +0000 (21:00 -0800)]

Cuda version comparison with CUDA_VERSION_STRING (#14302)

Summary:

Cuda headers include cuda version in form of major.minor. But when we do find_package(cuda). CUDA_VERSION variable includes patch number as well which fails following condition.

`

if(NOT ${cuda_version_from_header} STREQUAL ${CUDA_VERSION})

`

**For example:**

I have cuda 10.0 installed. My nvcc output looks like this

`Cuda compilation tools, release 10.0, **V10.0.130**

`

If I compile my application with caffe2. It gives me following error:

```

CMake Error at /usr/share/cmake/Caffe2/public/cuda.cmake:59 (message):

FindCUDA says CUDA version is (usually determined by nvcc), but the CUDA

headers say the version is 10.0. This often occurs when you set both

CUDA_HOME and CUDA_NVCC_EXECUTABLE to non-standard locations, without also

setting PATH to point to the correct nvcc. Perhaps, try re-running this

command again with PATH=/usr/local/cuda/bin:$PATH. See above log messages

for more diagnostics, and see

https://github.com/pytorch/pytorch/issues/8092 for more details.

```

**In this case, it got failed because**

cuda_version_from_header = 10.0

CUDA_VERSION = 10.0.130 (Came from NVCC)

`if(NOT ${cuda_version_from_header} STREQUAL ${CUDA_VERSION})

`

**Fix:**

We should compare header version with **major.minor format** which is given by CUDA_VERSION_STRING

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14302

Differential Revision:

D13166485

Pulled By: soumith

fbshipit-source-id:

1b74e756a76c4cc5aa09978f5850f763ed5469b6

svcscm [Thu, 22 Nov 2018 04:51:26 +0000 (20:51 -0800)]

Updating submodules

Reviewed By: yns88

fbshipit-source-id:

ee60b4dddf688608ef80043b1dc336d120a045d0

svcscm [Thu, 22 Nov 2018 04:29:22 +0000 (20:29 -0800)]

Updating submodules

Reviewed By: yns88

fbshipit-source-id:

366c29d09bec53459e2a4890c7fe8d10f45ff5c3

Teng Li [Thu, 22 Nov 2018 02:21:55 +0000 (18:21 -0800)]

Robust NCCL barrier improvement to cover all devices combinations (#14271)

Summary:

This covers the very edgy case when we run the same NCCL process group with multiple GPU combinations instead of the last GPU combination. We always keep track of what GPUs have been used previously in the NCCL process group and barrier() itself will synchronize on each GPU's NCCL stream.

Test covered as well. Tested on 8-GPU machine

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14271

Differential Revision:

D13164993

Pulled By: teng-li

fbshipit-source-id:

81e04352740ea50b5e943369e74cfcba40bb61c1

Michael Suo [Thu, 22 Nov 2018 01:46:46 +0000 (17:46 -0800)]

alias analysis (#14018)

Summary:

First draft of an alias analysis pass. It's a big PR unfortunately; a rough table of contents/suggested order of review:

1. `AliasAnalysis` pass, which traverses the graph and builds an `AliasDb`. The basic strategy is to assign alias information to every value of mutable type (list/tuple/tensor), and use the alias annotations of each node's schema to assign alias info to the outputs based on the alias info the inputs. Nodes that aren't explicitly schematized have hand-written analysis rules.

2. Integration of aliasing information into `moveBefore/AfterTopologicallyValid()`. Basically, we pass in an alias DB when we ask for moveBefore/After. Similar to how we can boil down dependency analysis to "what nodes use this node", we can boil down mutability analysis to "what nodes write to an alias set input/output'd by this node".

3. Integration of alias analysis to optimization passes that need it. Right now, it is `GraphFuser`, `CreateAutodiffSubgraphs`, constant prop, and CSE. Not sure if any others need it.

- Testing; still figuring out the best way to do this.

- Eventually we want to integrate the alias db into the graph, but we shouldn't do that until we can guarantee that the information can stay up to date with mutations.

- Do the same thing `python_printer` did for operators and force people to register alias analyzers if they can't schematize their op.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14018

Differential Revision:

D13144906

Pulled By: suo

fbshipit-source-id:

1bc964f9121a504c237cef6dfeea6b233694de6a

Ilia Cherniavskii [Thu, 22 Nov 2018 01:19:37 +0000 (17:19 -0800)]

Remove extra include

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/14206

Reviewed By: dzhulgakov

Differential Revision:

D13131318

fbshipit-source-id:

559b55b8d98cdf6b7d1d3e31237c5473edc5e462

Teng Li [Thu, 22 Nov 2018 00:54:36 +0000 (16:54 -0800)]

Removed redundant allreduce options in DDP (#14208)

Summary:

This somehow is not cleaned up after the C++ migration. Unused and can be removed.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14208

Differential Revision:

D13132492

Pulled By: teng-li

fbshipit-source-id:

0f05b6368174664ebb2560c037347c8eb45f7c38

David Riazati [Thu, 22 Nov 2018 00:30:43 +0000 (16:30 -0800)]

Add list inequality operator (#14129)

Summary:

This PR adds `aten::neq` for list inequality comparisons and converts

`nll_loss` to weak script

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14129

Differential Revision:

D13123894

Pulled By: driazati

fbshipit-source-id:

8c1edf7c163217ec00eb653f95d196db3998613f

Yinghai Lu [Wed, 21 Nov 2018 23:43:10 +0000 (15:43 -0800)]

Add onnxifi support to SparseLengthsWeightedSum (#14210)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14210

We left `SparseLengthsWeightedSum` as benchmark is not testing it due to fp16 filler issue. It was flushed out by unit tests. Hence we add the support here.

Reviewed By: bddppq

Differential Revision:

D13132320

fbshipit-source-id:

b21c30c185c9e1fbf3980641bc3cdc39e85af2e1

Gu, Jinghui [Wed, 21 Nov 2018 23:42:29 +0000 (15:42 -0800)]

Add "axis" and "axis_w" arguments in FC to support customized axix to reduce dim. (#12971)

Summary:

Add "axis" and "axis_w" arguments in FC to support customized axix to reduce dim.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/12971

Reviewed By: bddppq

Differential Revision:

D12850675

Pulled By: yinghai

fbshipit-source-id:

f1cde163201bd7add53b8475329db1f038a73019

Viswanath Sivakumar [Wed, 21 Nov 2018 21:42:04 +0000 (13:42 -0800)]

IDEEP fallback for ResizeNearest op (#14212)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14212

TSIA

Reviewed By: yinghai

Differential Revision:

D13134134

fbshipit-source-id:

e3c5c9c8756d6e25b213f8dde9d809a44373d7a3

zrphercule [Wed, 21 Nov 2018 21:12:18 +0000 (13:12 -0800)]

Fix ONNX_ATEN mode (#14239)

Summary:

Fix ONNX_ATEN mode by adding it to the validateBlock method.

Before this pr, validateBlock will throw an exception when using this mode.

I will add related test cases for ONNX_ATEN mode in a different pr once this is merged, since we dont have any currently.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14239

Differential Revision:

D13145443

Pulled By: zrphercule

fbshipit-source-id:

60e7942aa126acfe67bdb428ef231ac3066234b1

Pieter Noordhuis [Wed, 21 Nov 2018 19:25:42 +0000 (11:25 -0800)]

Bump gloo (#14281)

Summary:

Includes more robust error handling and timeout support.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14281

Differential Revision:

D13158232

Pulled By: pietern

fbshipit-source-id:

e80432799a020576d5abdcd9a21d66b629479caf

Jongsoo Park [Wed, 21 Nov 2018 17:37:58 +0000 (09:37 -0800)]

fix comment on dnnlowp op arguments (#14265)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14265

Fix comment

Reviewed By: hx89

Differential Revision:

D13152106

fbshipit-source-id:

fbe98906963cbd5cb20a583a737a792fbc38292e

Gregory Chanan [Wed, 21 Nov 2018 17:04:59 +0000 (09:04 -0800)]

native NN wrappers, including with buffers.

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/14256

Differential Revision:

D13148783

Pulled By: gchanan

fbshipit-source-id:

4b6179033cf1df26061b6731eaaa4e008692e592

Pieter Noordhuis [Wed, 21 Nov 2018 16:43:14 +0000 (08:43 -0800)]

Remove header generated at configuration time (#14244)

Summary:

The build was picking up the empty stub header instead of the generated

one. Because of the large number of include paths we end up passing to

the compiler it is brittle to have both an empty stub file and a

generated file and expect the compiler to pick up the right one.

With the recent change to compile everything from a single CMake run we

can now use native CMake facilities to propagate macros that indicate

backend support. The stanzas target_compile_definitions with the

INTERFACE flag ensure that these macros are set only for downstream

consumers of the c10d target.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14244

Reviewed By: teng-li

Differential Revision:

D13144293

Pulled By: pietern

fbshipit-source-id:

f49324220db689c68c126b159f4f00a8b9bc1252

Zachary DeVito [Wed, 21 Nov 2018 14:36:26 +0000 (06:36 -0800)]

Address jittering issues in python_print (#14064)

Summary:

export - print a method with python_print

import - import a method with import_method

We want to ensure:

export(g) == export(import(export(g)))

That is after after exporting/importing once, the graph will stay exactly

the same. This is less strict that g == import(export(g)) which would

require us to maintain a lot more information about the structure of the

IR and about the names of debug symbols.

This PR addresses this with the following fixes:

* print out double-precision numbers with high enough precision such

that they always parse in the same way

* when creating loop-carried dependencies, sort them

by variable name, ensuring a consistent order

* parse nan correctly

* DCE: remove unused outputs of if statements, and loop-carried dependencies

in loops that are dead both after the loop and inside the body of the

loop.

* Do not set uniqueName for variables whose names are _[0-9]+, these

are probably rare in user code, and we need a way to communicate

that we do not care about a variable name when re-parsing the graph.

Otherwise temporary variable names will jitter around.

* Expand the definition of a constant in printing code to None,

and family.

* Allow re-treeing to work as long as the only thing in its way is a

constant node. These do not have side effects but are sometimes

inserted in a different order when tracing compared to how we print them.

* Print all constant nodes out first in the order in which they are used_val

(or, if they are inlined, ensure they get assigned CONSTANT.cX number

in a consistent order). Cleanup tuples (this is done in the compiler,

but not in the tracer, leading to some tuple indexing jitter if not

done).

* use strtod_l, not std::stod which can throw exceptions

Other:

* Add REL_WITH_DEB_INFO to setup.py. It already existed for the

cmake files. Threading it into setup.py allows us to turn on

debug symbols with optimization everywhere.

* enable round trip testing for all generated graphs. This only adds

~6 seconds to total build time but tests printing for every graph.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14064

Differential Revision:

D13094637

Pulled By: zdevito

fbshipit-source-id:

0a1c6912194d965f15d6b0c6cf838ccc551f161d

svcscm [Wed, 21 Nov 2018 10:16:29 +0000 (02:16 -0800)]

Updating submodules

Reviewed By: cdelahousse

fbshipit-source-id:

27838fb2dad82c78906faf3cc2d124557c30e88f

svcscm [Wed, 21 Nov 2018 08:25:17 +0000 (00:25 -0800)]

Updating submodules

Reviewed By: cdelahousse

fbshipit-source-id:

3c17e12a579245a84e9a56b1d8a1641232150675

Lu Fang [Wed, 21 Nov 2018 07:33:30 +0000 (23:33 -0800)]

Add tensor table in ModelDef and use it for jit script serialization and deserialization (#13861)

Summary:

As we discussed, the tensors in the torch script will be associated with the tensor data in the serialized file. So let's add a table of tensor (actually it's a repeated TensorProto filed) in the ModelDef. TensorProto.name will be the id.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/13861

Reviewed By: dzhulgakov

Differential Revision:

D13036940

Pulled By: zrphercule

fbshipit-source-id:

ecb91b062ac4bc26af2a8d6d12c91d5614efd559

Tongzhou Wang [Wed, 21 Nov 2018 07:27:16 +0000 (23:27 -0800)]

c10d Automatically retry on EINTR (#14180)

Summary:

Probably fixes https://github.com/pytorch/pytorch/issues/14170

Actually I probably shouldn't retry all `SYSCHECK` calls. I'll leave to the reviewers to decide.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14180

Reviewed By: pietern

Differential Revision:

D13144741

Pulled By: SsnL

fbshipit-source-id:

d73288f76b18cae14b1b43dad4e5e8d010a96d95

Teng Li [Wed, 21 Nov 2018 05:10:18 +0000 (21:10 -0800)]

Make NCCL backend support barrier op (#14142)

Summary:

This is a feature request from: https://github.com/pytorch/pytorch/issues/13573

As the title says, this PR makes NCCL backend support barrier op.

There are a couple scenarios that need to be addressed:

(1) When there is already a NCCL op happened, we need to record what GPU device(s) the previous op happened and queue the allreduce barrier op on the same GPU device

(2) When there is no NCCL op yet, we will try to use a single GPU and separate each process from a single GPU as the best effort.

As for the async work, during wait, we would like not just wait on the NCCL kernel to be completed, but also block the thread until the current stream and nccl stream return.

`test_distributed` should cover the test. I also manually tested both scenarios.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14142

Differential Revision:

D13113391

Pulled By: teng-li

fbshipit-source-id:

96c33d4d129e2977e6892d85d0fc449424c35499

Yinghai Lu [Wed, 21 Nov 2018 02:00:14 +0000 (18:00 -0800)]

Fix memory leakage in onnxifi transformer (#14245)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14245

tsia

Reviewed By: bddppq, rdzhabarov

Differential Revision:

D13144783

fbshipit-source-id:

5e07bb7ab883ba1af68547a26272cd320967b9e3

David Riazati [Wed, 21 Nov 2018 00:42:00 +0000 (16:42 -0800)]

Allow undefined tensors as constants (#14120)

Summary:

This PR inserts `prim::None` constants for undefined tensors. This comes in the standard library if an `Optional[Tensor]` is statically determined to be `None`:

```python

torch.jit.script

def fn(x=None):

# type: (Optional[Tensor]) -> Tensor

return torch.jit._unwrap_optional(x)

torch.jit.script

def fn2():

# type: () -> Tensor

return fn()

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14120

Differential Revision:

D13124625

Pulled By: driazati

fbshipit-source-id:

9eaa82e478c49c503f68ed89d8c770e8273ea569

Wanchao Liang [Tue, 20 Nov 2018 22:09:27 +0000 (14:09 -0800)]

Export BatchNorm functional and module, add necessary JIT support (#14016)

Summary:

This PR did three things:

1. It export the BatchNorm functional and module, and rewrite some of the components to stay align with the current supported JIT features

2. In the process of export, add necessary compiler support for in_place op aug assign

4. change the test_jit behavior in add_module_test to utilize a single rng state during module initialization

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14016

Differential Revision:

D13112064

Pulled By: wanchaol

fbshipit-source-id:

31e3aee5fbb509673c781e7dbb6d8884cfa55d91

Thomas Viehmann [Tue, 20 Nov 2018 20:43:23 +0000 (12:43 -0800)]

Have PYTORCH_FUSION_DEBUG print C kernel source (#14213)

Summary:

- Move up handling the environment variable from CPU only to all

- Introduce two levels to be enabled with PYTORCH_FUSION_DEBUG=n:

1: print C source

2: print CPU assembly, too (previous effect of PYTORCH_FUSION_DEBUG)

apaszke

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14213

Differential Revision:

D13135393

Pulled By: soumith

fbshipit-source-id:

befa4ebea3b3c97e471393a9f6402b93a6b24031

Tugrul Ates [Tue, 20 Nov 2018 20:23:14 +0000 (12:23 -0800)]

Delete backwards compatibility StorageImpl.h and TensorImpl.h (#14230)

Summary:

Since they directly include the real ones in core.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14230

Differential Revision:

D13140323

Pulled By: tugrulates

fbshipit-source-id:

d7e3b94e891b2d7fa273d01c0b7edfebdbd7e368

Jongsoo Park [Tue, 20 Nov 2018 08:53:29 +0000 (00:53 -0800)]

remove unused parameters from caffe2_dnnlowp_utils.cc (#14164)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14164

See title

Reviewed By: csummersea

Differential Revision:

D13115470

fbshipit-source-id:

d754f558cd06e5f4c1cd00315e912cdb7b50731a

Jongsoo Park [Tue, 20 Nov 2018 08:53:29 +0000 (00:53 -0800)]

use pragma once (#14163)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14163

Some of the names we were using to guard the header file was too short (e.g. DYNAMIC_HISTOGRAM_H).

Reviewed By: csummersea

Differential Revision:

D13115451

fbshipit-source-id:

cef8c84c62922616ceea17effff7bdf8d67302a2

Jongsoo Park [Tue, 20 Nov 2018 08:53:29 +0000 (00:53 -0800)]

format python files (#14161)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14161

Formatting using Nuclide

Reviewed By: hx89

Differential Revision:

D13115348

fbshipit-source-id:

7432ce6072a1822d7287b4ebcfcb6309282e15ac

Jongsoo Park [Tue, 20 Nov 2018 08:53:29 +0000 (00:53 -0800)]

clang-format (#14160)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14160

clang-format of C++ files

Reviewed By: hx89

Differential Revision:

D13115201

fbshipit-source-id:

d2ad65f66209e00578ef90f87f41272de2d24aa9

Hui Wu [Tue, 20 Nov 2018 06:54:19 +0000 (22:54 -0800)]

Add sigmoid op based on MKL-DNN

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/13097

Differential Revision:

D13105366

Pulled By: yinghai

fbshipit-source-id:

d156e8fd519baeecf61c25dcd8fa2c2fa7351ef4

Daya S Khudia [Tue, 20 Nov 2018 06:45:00 +0000 (22:45 -0800)]

OSS build fix (#14192)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14192

We can only use C10_* in OSS. The build is only broken if built with USE_FBGEMM=ON

Reviewed By: jianyuh

Differential Revision:

D13121781

fbshipit-source-id:

f0ee9a75997766e63e1da8a53de7ddb98296a171

Lu Fang [Tue, 20 Nov 2018 06:12:16 +0000 (22:12 -0800)]

Make EncodeMethod in jit script serialization return a string (#14167)

Summary:

Nit

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14167

Reviewed By: ezyang

Differential Revision:

D13116584

Pulled By: dzhulgakov

fbshipit-source-id:

c0e7e71a81004031564bd2fc59f393041e1283d5

Jongsoo Park [Tue, 20 Nov 2018 05:44:29 +0000 (21:44 -0800)]

Create README.md of caffe2/quantization/server

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/14217

Reviewed By: csummersea

Differential Revision:

D13135086

Pulled By: jspark1105

fbshipit-source-id:

bddf4f1c2dc5ec8ea6ebe9e265956f367e082d52

Will Feng [Tue, 20 Nov 2018 05:28:29 +0000 (21:28 -0800)]

CircleCI: fix NCCL install (#14172)

Summary:

The `$BUILD_ENVIRONMENT` checks work in `test.sh` but not `build.sh`, this PR fixes the issue.

This replaces https://github.com/pytorch/pytorch/pull/14124.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14172

Differential Revision:

D13135087

Pulled By: yf225

fbshipit-source-id:

42fff3926734778713d483d74ba0a89e5502dd9e

zrphercule [Tue, 20 Nov 2018 02:43:58 +0000 (18:43 -0800)]

Fix a bug in test case of onnx::If

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/14209

Differential Revision:

D13132607

Pulled By: zrphercule

fbshipit-source-id:

b7f7ccc6a6cbdeb57a7f88a1971d15dd81e6fc81