Ailing Zhang [Tue, 19 Mar 2019 17:20:06 +0000 (10:20 -0700)]

specialized CUDA impl for dropout in AD (#17756)

Summary:

In aten we have a _fused_dropout implementation for CUDA case. As ngimel suggested if we discard it in JIT AD, it hurts performance.

It doesn't seem ideal to include backend specific implementation in AD, but this is helpful to prevent performance regression atm.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17756

Differential Revision:

D14368999

Pulled By: ailzhang

fbshipit-source-id:

9a371c5020f630e8f6e496849ec9772b6f196169

Neeraj Pradhan [Tue, 19 Mar 2019 17:18:12 +0000 (10:18 -0700)]

Fix underflow issue with dirichlet sample (#17488)

Summary:

Addresses #15738, using fritzo's suggestion. This adds a `torch._sample_dirichlet` method in `Distributions.cpp` and `Distributions.cu`.

- For CPU, this leads to no perf hit since all we do is to promote the `alpha` to double when getting the gamma samples (the gamma sampler anyways uses `accscalar_t`(double for CPU)) and cast it back to float32 on return.

- I have added an analogous method for CUDA as well, but the default sampler for CUDA uses scalar_t for efficiency, so I have kept it as that. With this, I do not see the bias towards 1 as reported in #15738 with `float32`, but there is a spurious mode at 0.5, as would be expected. Users would need to explicitly use `float64` for GPU to not see the spurious mode at 0.5. (EDIT: see note below, it appears that the bias issue is still there for certain builds).

Added some tests and checked that there is no perf regression. My experience with C++ is very limited, so apologies in advance if I missed something basic. cc. ailzhang, fritzo, fmassa

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17488

Differential Revision:

D14410301

Pulled By: ezyang

fbshipit-source-id:

62b2f694b4642685eab06db96d74ce28e05c3992

Gregory Chanan [Tue, 19 Mar 2019 14:57:21 +0000 (07:57 -0700)]

Kill Backend constructor of TensorOptions. (#18137)

Summary:

It's wrong and unused. Use one of the many other constructors instead :).

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18137

Differential Revision:

D14508364

Pulled By: gchanan

fbshipit-source-id:

19c6ff78ad9d9221d0874425edd02b78627c4ca7

Gregory Chanan [Tue, 19 Mar 2019 14:50:31 +0000 (07:50 -0700)]

Remove deviceTypeToBackend, which is underspecified. (#18135)

Summary:

There are multiple backends for a device type, so we just kill this function.

Also, kill an getNonVariableType instance which was also underspecified.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18135

Differential Revision:

D14507474

Pulled By: gchanan

fbshipit-source-id:

fc791a76d4b851b23d09a070725f3838621eb13d

Gregory Chanan [Tue, 19 Mar 2019 14:36:58 +0000 (07:36 -0700)]

Stop generating unimplemented type methods. (#18144)

Summary:

This gets rid of 'aten_sparse' which was used at one time with legacy THS code, but is now only overloaded in native_parse.py.

The way that 'aten_sparse' worked was wonky -- it extended all backends (default [CPU, CUDA]) to include sparse.

But this is totally unnecessary; we already have the backends we need to generate for from type_method_definition_dispatch.

codegen changes: https://github.com/gchanan/pytorch/blob/

fc37c8e171b7ebd1b1755469cf6a146a2abedc13/diff.txt

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18144

Reviewed By: ezyang

Differential Revision:

D14511324

Pulled By: gchanan

fbshipit-source-id:

8bb4ac4cf0985f8756790779a22bc229e18e8e7f

Bharat Raghunathan [Tue, 19 Mar 2019 14:05:29 +0000 (07:05 -0700)]

Corrected type of 'swap' in torch.nn.TripletMarginLoss (#18115)

Summary:

Fix #16428 by correcting type of 'swap' from `float` to `bool`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18115

Differential Revision:

D14516615

Pulled By: ezyang

fbshipit-source-id:

c61a45d533f3a443edf3c31c1ef3d9742bf46d2b

Deepali Chourasia [Tue, 19 Mar 2019 06:06:03 +0000 (23:06 -0700)]

handle scenario when GPU support is not available and p2p_access_pattern is empty (#17974)

Summary:

Observed that when there is no GPU support available `workspace `sets `GetGpuPeerAccessPattern `to `[]` in

https://github.com/pytorch/pytorch/blob/master/caffe2/python/workspace.py#L79

and this case is not handled in https://github.com/pytorch/pytorch/blob/master/caffe2/python/data_parallel_model.py#L1065.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17974

Differential Revision:

D14517066

Pulled By: ezyang

fbshipit-source-id:

186911d95c07e9a55ab82a41d0c7c919e4281bb4

Lutz Roeder [Tue, 19 Mar 2019 03:51:12 +0000 (20:51 -0700)]

Fix Caffe2 operator schemas (#15462) (#13229) (#18109)

Summary:

Maratyszcza harouwu yinghai

This is broken since #13065. `c_str()` returns a pointer that isn't permanent.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18109

Differential Revision:

D14516622

Pulled By: ezyang

fbshipit-source-id:

7113d92eac4f61479c4c7b323cf78cc8aa00b17e

Junji Hashimoto [Tue, 19 Mar 2019 03:44:05 +0000 (20:44 -0700)]

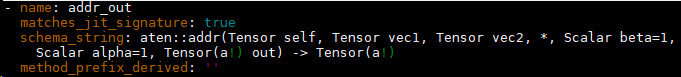

Increase line-width of Declarations.yaml (#18050)

Summary:

There are some line breaks in schema_string of Declarations.yaml.

Is this valid yaml? I am reading yaml-spec.

It seems that the “|” indicator or single/double quote is required to insert line-break.

https://yaml.org/spec/1.2/spec.html

Could you increase line-width of yaml to avoid newline?

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18050

Differential Revision:

D14516694

Pulled By: ezyang

fbshipit-source-id:

1db9f3bf131b54a783d668de973915892603189e

svcscm [Tue, 19 Mar 2019 03:33:38 +0000 (20:33 -0700)]

Updating submodules

Reviewed By: yns88

fbshipit-source-id:

eeeec4229e05916f2c17e525aee5ac4465ef52db

Zhang Dong [Tue, 19 Mar 2019 03:22:20 +0000 (20:22 -0700)]

delete unnecessary file .gitkeep (#18136)

Summary:

delete unnecessary file .gitkeep in /pytorch/tree/master/torch/csrc/nn

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18136

Differential Revision:

D14516584

Pulled By: ezyang

fbshipit-source-id:

a7555693cb3df1c5e37fcd3ca9bb379a2258f2d1

David Riazati [Tue, 19 Mar 2019 01:15:17 +0000 (18:15 -0700)]

Attribute serialization (#17423)

Summary:

Allows serialization/loading of attributes (`IValue`s of any type).

* metadata (attribute name, type) is stored in the `model.json`

* The binary format is a subset of the `pickle` module that supports the operations necessary for `IValue`s

* Attributes are serialized in the order they are defined on a module to a list in a single `attributes` file, with submodule attributes coming first. This order directly matches the order attributes are listed in `model.json`

* This can be inspected in Python with `pickle.load()` or with `pickletools` (PyTorch need not be installed for this to work)

* A class is used to store a tensor's index into the tensor table of the model, so to unpickle the file you have to use a custom Unpickler:

```python

class TensorID(object):

def __setstate__(self, id):

self.id = id

class JitUnpickler(pickle.Unpickler):

def find_class(self, module, name):

if module == '__main__' and name == 'TensorID':

return TensorID

JitUnpickler(open("my_model/attributes.pkl", "rb")).load()

```

* pickle format: https://svn.python.org/projects/python/trunk/Lib/pickletools.py

* It currently does not support/guarantee that anything saved out with `pickle` (i.e. if you edit `attributes` with `pickle` directly) instead of our tools will be imported correctly

Also will fix #17683 and fix #16367

Followup Work:

* document format / choice of pickle: #17951

* create an example

* list specializations

* int size specializations, large binputs

* do a first pass over attributes to output only necessary `BINPUT` ops

* attribute reassignment (e.g `self.my_attribute = new_value`)

* `tensor.save("some_checkpoint.pkl")` support with tensors embedded in Pickle file

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17423

Differential Revision:

D14470965

Pulled By: driazati

fbshipit-source-id:

6a21a9939efdbe59b4bc57fd31d6d630bab5297e

Jongsoo Park [Mon, 18 Mar 2019 23:28:47 +0000 (16:28 -0700)]

fix bug in pool_dnnlowp_op_avx2.cc (#18141)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18141

VLEN should've been 32

Reviewed By: jianyuh

Differential Revision:

D14510780

fbshipit-source-id:

ddf12746e1c69677a268432432ddb088cc210084

svcscm [Mon, 18 Mar 2019 23:16:51 +0000 (16:16 -0700)]

Updating submodules

Reviewed By: yns88

fbshipit-source-id:

ed297c07c681f5f45d3f99edf48680015ca5b138

Vishwak Srinivasan [Mon, 18 Mar 2019 23:01:02 +0000 (16:01 -0700)]

Rename gesv to solve (#18060)

Summary:

Changelog:

- Renames `gesv` to `solve` to remain consistent with `cholesky_solve`.

- Rename all tests, fix callsites

- Create a tentative alias for `solve` under the name `gesv`, and add a deprecated warning to not promote usage.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18060

Differential Revision:

D14503117

Pulled By: zou3519

fbshipit-source-id:

99c16d94e5970a19d7584b5915f051c030d49ff5

James Reed [Mon, 18 Mar 2019 21:06:31 +0000 (14:06 -0700)]

Modify BeamSearch to support CharSourceEncoder (#18107)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18107

Pull Request resolved: https://github.com/pytorch/translate/pull/396

also:

1. fix issues with OptionalType not having a createWithContainedType (PyTorch diff)

2. Delete tests for ONNX full beam search export (nobody is using it and it just makes things harder. Currently ONNX doesn't support `_unwrap_optional`)

Reviewed By: jmp84

Differential Revision:

D14483771

fbshipit-source-id:

0e37ef1cb5a16d03a535eef808b0488b98802128

Narine Kokhlikyan [Mon, 18 Mar 2019 19:21:52 +0000 (12:21 -0700)]

Circular Convolution Function via circular padding (#17240)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17240

Added circular padding in addition to zero padding to Conv1D, Conv2D and Conv3D based on the solution suggested in: https://github.com/pytorch/pytorch/issues/3858

Reviewed By: ezyang

Differential Revision:

D14126416

fbshipit-source-id:

a2f1587503ee0cfff98d5cb0d5b0a600ef8aaeb4

Thomas Viehmann [Mon, 18 Mar 2019 19:07:38 +0000 (12:07 -0700)]

don't include /usr/include when nvcc is in /usr/bin (#18127)

Summary:

...because gcc will have failures with very strange error messages

if you do.

This affects people with Debian/Ubuntu-provided NVCC, the PR should

not change anything for anyone else.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18127

Differential Revision:

D14504386

Pulled By: soumith

fbshipit-source-id:

1aea168723cdc71cdcfffb3193ee116108ae755e

Michael Suo [Mon, 18 Mar 2019 16:56:25 +0000 (09:56 -0700)]

fix double free in test_jit (#18121)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18121

ghimport-source-id:

70c273bfbcb68f7b25cf87f5614c662960864758

Stack from [ghstack](https://github.com/ezyang/ghstack):

* **#18121 [jit] fix double free in test_jit**

These definitions used to be in anonymous namespace so they weren't exported from the translation unit. #18071 put those in a `test` namespace so I guess they were getting their destructors called twice on exit somehow. Making them static again fixes the problem.

Reviewed By: ezyang

Differential Revision:

D14498349

fbshipit-source-id:

f969781695dcbebdfcfce667fce5b986222a373e

Huitong Qiu [Mon, 18 Mar 2019 15:49:44 +0000 (08:49 -0700)]

Replace resize_dim with set_sizes_and_strides in THTensor_(squeeze) (#18059)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18059

Replace resize_dim() with set_sizes_and_strides() in `THTensor_(squeeze)` in aten/src/TH/generic/THTensor.cpp and `THCTensor_(squeeze)` in aten/src/THC/generic/THCTensor.cpp

Reviewed By: ezyang

Differential Revision:

D14471066

fbshipit-source-id:

1c8c412ff09246c4df6843736e3bf0279bfadea8

Tongzhou Wang [Mon, 18 Mar 2019 04:36:02 +0000 (21:36 -0700)]

update exp. family doc (#18118)

Summary:

sphinx doesn't understand hyphen. it does not merge the two halves together in html.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18118

Differential Revision:

D14498012

Pulled By: mrshenli

fbshipit-source-id:

d6f4cfddc0a8e3a8f91578da43c26ca9c6fff3ce

Gregory Chanan [Sun, 17 Mar 2019 22:37:42 +0000 (15:37 -0700)]

Change one_hot from IndexTensor to Tensor. (#18073)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18073

ghimport-source-id:

f4dadebafa0423c4c5a0e46c15b38129402d830a

Stack:

* #18072 properly device_guard IndexTensor and BoolTensor.

* **#18073 Change one_hot from IndexTensor to Tensor.**

There is no codegen change.

Reviewed By: ezyang

Differential Revision:

D14485248

fbshipit-source-id:

ee2ba8e5dcbbbaf0214a026c8e7ed4e6712becb0

Gregory Chanan [Sun, 17 Mar 2019 22:37:41 +0000 (15:37 -0700)]

properly device_guard IndexTensor and BoolTensor. (#18072)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18072

ghimport-source-id:

9653731602c72f299e095dd50e3afe6bcc8b01d6

Stack:

* **#18072 properly device_guard IndexTensor and BoolTensor.**

* #18073 Change one_hot from IndexTensor to Tensor.

Currently IndexTensor and BoolTensors do not have device_guards applied to them.

This is bad in the case where the only tensor(s) are IndexTensors or BoolTensors, because no device guard is present.

The only case this currently happens is with one_hot which ends up not mattering because of the way the implementation is written. But I wanted to make sure we are covered here.

Reviewed By: ezyang

Differential Revision:

D14485249

fbshipit-source-id:

e57b28086fa1ad2fdd248bb1220e8a2e42da03e1

Michael Suo [Sun, 17 Mar 2019 21:53:41 +0000 (14:53 -0700)]

fix corner case for optional aliasing (#18093)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18093

ghimport-source-id:

021adc52aa7bfe5fff74531c76a8cd28cab30b2a

Stack:

* **#18093 [jit] fix corner case for optional aliasing**

Occasionally the compiler can insert constant Nones to make types line

up. In that case, don't try to make a pointer from the optional type to

None, since we know statically that None won't be mutated or whatever.

Reviewed By: shannonzhu

Differential Revision:

D14493004

fbshipit-source-id:

6564065f39d99ee5af664f3a0fe235892973d9be

Jianyu Huang [Sat, 16 Mar 2019 22:04:02 +0000 (15:04 -0700)]

Typo fix (#18089)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18089

Typo fix for the fully connected layer documentation.

Reviewed By: jspark1105

Differential Revision:

D14488632

fbshipit-source-id:

ca0271ca0250c1d653ed7f250e8588f7b2ce1056

Duc Ngo [Sat, 16 Mar 2019 19:21:55 +0000 (12:21 -0700)]

Caffe2 - Add flag to fails if float point exceptions is detected in operator runs (#18040)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18040

Add flag to fails if float point exceptions is detected in operator runs

Sample exception

Exception [enforce fail at operator.h:837] !std::fetestexcept(FE_DIVBYZERO). Division by zero floating point exception (FE_DIVBYZERO) reported.

Error from operator:

input: "1" input: "0" output: "out" name: "" type: "Div"

Reviewed By: jspark1105

Differential Revision:

D14467731

fbshipit-source-id:

fad030b1d619a5a661ff2114edb947e4562cecdd

Junjie Bai [Sat, 16 Mar 2019 16:03:17 +0000 (09:03 -0700)]

Remove ComputeLibrary submodule

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18052

Reviewed By: ezyang

Differential Revision:

D14477355

fbshipit-source-id:

c56b802f6d69701596c327cf9af6782f30e335fa

Jongsoo Park [Sat, 16 Mar 2019 01:02:53 +0000 (18:02 -0700)]

remove unused parameters in optimizer tests (#18084)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18084

data_strategy parameter was not used in some of unit tests for optimizers

Reviewed By: hyuen

Differential Revision:

D14487830

fbshipit-source-id:

d757cd06aa2965f4c0570a4a18ba090b98820ef4

Sebastian Messmer [Fri, 15 Mar 2019 23:54:11 +0000 (16:54 -0700)]

Specify overload name in function schema (#18037)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18037

The FunctionSchema can now store an overload name and the parser knows how to parse it. Specify like this:

my_func.overload1(arg1: Tensor) -> Tensor

my_func.overload2(arg1: Tensor, arg2: Tensor) -> Tensor

Reviewed By: zdevito

Differential Revision:

D14467497

fbshipit-source-id:

8832b32f07351bb61090357b17b77a6a2fed3650

Sebastian Messmer [Fri, 15 Mar 2019 23:54:11 +0000 (16:54 -0700)]

Expose c10 cuda ops to caffe2 (#18036)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18036

- Add macros to export c10 cuda operators to caffe2 frontend

- Instead of having a separate caffe2 registry for the c10 operator wrappers, use the existing caffe2 registries

Reviewed By: ezyang

Differential Revision:

D14467495

fbshipit-source-id:

7715ed2e38d2bbe16f1446ae82c17193a3fabcb9

Jack Montgomery [Fri, 15 Mar 2019 23:43:18 +0000 (16:43 -0700)]

update of fbcode/foxi to

2bcc4064c90e87b9638615c733485f07c47b7558 (#18070)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18070

Previous import was

d1f45b1a2b1585d0e9bc65e15e463db344fc3ff6

Included changes:

- **[2bcc406](https://github.com/houseroad/foxi/commit/2bcc406)**: Merge pull request #7 from jackm321/tracing_fixes <Jack Montgomery>

- **[c39033c](https://github.com/houseroad/foxi/commit/c39033c)**: Fixes for tracing events <Jack Montgomery>

- **[50912cf](https://github.com/houseroad/foxi/commit/50912cf)**: Merge pull request #5 from jackm321/add_trace_events <Jack Montgomery>

- **[ba2fdcb](https://github.com/houseroad/foxi/commit/ba2fdcb)**: Merge pull request #5 from jackm321/add_trace_events <Jack Montgomery>

- **[7d42b12](https://github.com/houseroad/foxi/commit/7d42b12)**: address comments <Jack Montgomery>

- **[dcabd8d](https://github.com/houseroad/foxi/commit/dcabd8d)**: Add trace events interface <Jack Montgomery>

Reviewed By: houseroad

Differential Revision:

D14483201

fbshipit-source-id:

f51ed869c9a89521079df89903abc0ac0a45ac7b

Gregory Chanan [Fri, 15 Mar 2019 21:16:22 +0000 (14:16 -0700)]

Add backwards compatibility and other fixes to Dispatch macros. (#17996)

Summary:

Changes:

1) https://github.com/pytorch/pytorch/pull/17527 changed dispatch macros to be ScalarType based instead of at::Type based. This broke cpp extensions that relied on dispatch macros. Since IMO these should be ScalarType based (and some extensions have already updated), we allow either at::Type or at::ScalarType to be passed, but passing at::Type will result in a deprecated warning.

2) Reintroduce macros that were deleted (AT_DISPATCH_ALL_TYPES_AND_HALF, AT_DISPATCH_COMPLEX_TYPES, AT_DISPATCH_ALL_TYPES_AND_HALF_AND_COMPLEX, AT_DISPATCH_ALL_TYPES_AND_COMPLEX); the AND_HALF ones now give a deprecated warning because there are more extensible macros that were introduced in their place.

3) Makes AT_DISPATCH_ALL_TYPES_AND_COMPLEX_AND into a ScalarType based macro (and updates usages). This was the result of a logical merge conflicts.

4) Adds a new macro, C10_DEPRECATED_MESSAGE for passing a deprecated message to the compiler. I didn't spend much time seeing if this can be enabled for versions before C++14.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17996

Reviewed By: ezyang

Differential Revision:

D14446203

Pulled By: gchanan

fbshipit-source-id:

1da56e2e9c15aa8f913ebbf6bf1110c5b6dc375e

Elias Ellison [Fri, 15 Mar 2019 20:53:23 +0000 (13:53 -0700)]

Breakup Test Misc (batch 1/2) (#18071)

Summary:

Breakup test_misc so that a test for a file is in test_filename. I think we might want to wait on moving test files into the source directory, since that would involve moving some tests over to the C10 folder, and this goes 99% of the way for test discoverability IMO anyway.

I added a file test_utils for common functions invoked in the tests.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18071

Differential Revision:

D14485787

Pulled By: eellison

fbshipit-source-id:

dcb20d1978d490999d435ea20c1d0503413a5c80

yuanhaoxie [Fri, 15 Mar 2019 20:09:18 +0000 (13:09 -0700)]

Remove the identical if branch (#18019)

Summary:

elif branch and else branch have the same content.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18019

Differential Revision:

D14475107

Pulled By: ezyang

fbshipit-source-id:

5075cc938f57649af7537de1a7c9d76ea976cafc

Roy Li [Fri, 15 Mar 2019 19:52:57 +0000 (12:52 -0700)]

Remove Type::elementSizeInBytes

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/17785

Reviewed By: ezyang

Differential Revision:

D14379074

fbshipit-source-id:

60727f187d61eb571b144bd6eed4dd4908da0b51

Michael Kösel [Fri, 15 Mar 2019 19:39:04 +0000 (12:39 -0700)]

add index and count to list (#17446)

Summary:

see https://github.com/pytorch/pytorch/issues/16662

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17446

Differential Revision:

D14461293

Pulled By: Krovatkin

fbshipit-source-id:

03572467cdf85efc909c1864c0558a93085c8ff3

Lara Haidar-Ahmad [Fri, 15 Mar 2019 19:10:32 +0000 (12:10 -0700)]

ONNX Export IsNan op

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/17698

Reviewed By: zrphercule

Differential Revision:

D14470646

Pulled By: houseroad

fbshipit-source-id:

d3e6adc83c4f9fa288c5fe0ae4c6af71fdd47905

Michael Suo [Fri, 15 Mar 2019 19:00:50 +0000 (12:00 -0700)]

support serialization of classes (#17856)

Summary:

Stack:

:black_circle: **#17856 [jit] support serialization of classes** [:yellow_heart:](https://our.intern.facebook.com/intern/diff/

D14402599/)

Add support for saving/loading TorchScript modules that depend on user-defned classes.

We track class dependencies the same we track tensor constants, then write them

all out such that we can just compile them in order before compiling the module

hierarchy.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17856

Reviewed By: shannonzhu

Differential Revision:

D14461599

Pulled By: suo

fbshipit-source-id:

7115f87e069fd00dc8381d7de9997864fef7ea9f

Michael Kösel [Fri, 15 Mar 2019 18:43:33 +0000 (11:43 -0700)]

add reverse to list (#17001)

Summary:

Add reverse functionality to list. See https://github.com/pytorch/pytorch/issues/16662

```python

import torch

torch.jit.script

def foo():

a = [1, 2, 3, 4]

a.reverse()

return a

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17001

Reviewed By: eellison

Differential Revision:

D14092019

Pulled By: driazati

fbshipit-source-id:

b353c763677c22312b64dde0db268e2988610ba1

Lu Fang [Fri, 15 Mar 2019 18:41:31 +0000 (11:41 -0700)]

1/2 Add Tracing support for C2 Ops (#17899)

Summary:

The C10 ops are not registered as custom ops in PyTorch. So we have to add the explicit support for it, too.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17899

Reviewed By: dzhulgakov

Differential Revision:

D14436999

Pulled By: houseroad

fbshipit-source-id:

a31fdf13a5c84f9b156a7288e0ffa57deb23b83f

Richard Zou [Fri, 15 Mar 2019 14:41:08 +0000 (07:41 -0700)]

Delete dead code in THTensorMoreMath.cpp (#17993)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17993

ghimport-source-id:

5427773f6306bdeddffd9a3ae032acc3f253f458

Stack:

* #17926 Implement at::has_internal_overlap helper function

* #17927 Error out on in-place (unary) ops on tensors that have internal overlap

* **#17993 [easy] Delete dead code in THTensorMoreMath.cpp**

We seem to have new implementations already for these in ATen.

Reviewed By: ezyang

Differential Revision:

D14457838

fbshipit-source-id:

8481aad74b2127bd28c0f3e09740889fc0488a31

Richard Zou [Fri, 15 Mar 2019 14:41:08 +0000 (07:41 -0700)]

Error out on in-place (unary) ops on tensors that have internal overlap (#17927)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17927

ghimport-source-id:

626d321e430b6b5c0ea3aa1eb9df8c1e2d058bf8

Stack:

* #17926 Implement at::has_internal_overlap helper function

* **#17927 Error out on in-place (unary) ops on tensors that have internal overlap**

On the way to #17935.

Works for CPU and CUDA on the following ops:

- abs_, acos_, asin_, atan_, ceil_, cos_, erf_, erfc_, exp_, expm1_

- floor_, log_, log10_, log1p_, log2_, round_, rsqrt_,

- sin_, sqrt_, tan_, tanh_, trunc_

This PR adds a check to see if the out/result tensor has internal

overlap. If it does, then we error out because the result **may** be

incorrect.

This is overly conservative; there are some cases where if the result is

the same as the input, the inplace operation is OK (such as floor_,

round_, and trunc_). However, the current code isn't organized in such a

way that this is easy to check, so enabling those will come in the future.

Reviewed By: ezyang

Differential Revision:

D14438871

fbshipit-source-id:

15e12bf1fdb2ab7f74bb806e22bc74840bd6abd1

Richard Zou [Fri, 15 Mar 2019 14:41:08 +0000 (07:41 -0700)]

Implement at::has_internal_overlap helper function (#17926)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17926

ghimport-source-id:

9f7572b5d43e474492363fa17dcb86a6c27ca13c

Stack:

* **#17926 Implement at::has_internal_overlap helper function**

* #17927 Error out on in-place (unary) ops on tensors that have internal overlap

On the way to #17935.

Checks if a tensor's sizes/strides indicate that multiple elements share

the same memory location. This problem in general is hard so

at::has_internal_overlap implements two heuristics and avoids solving

the general problem:

if a tensor is contiguous, it cannot have internal overlap

if a tensor has any zero strides, it does have internal overlap

otherwise, return MemOverlap::kTooHard to indicate that there might be

overlap, but we don't know.

Reviewed By: ezyang

Differential Revision:

D14438858

fbshipit-source-id:

607ab31771315921ab6165b2a1f072ac3e75925a

Gregory Chanan [Fri, 15 Mar 2019 14:36:13 +0000 (07:36 -0700)]

Fix truncation of default float values in JIT signatures. (#18044)

Summary:

In python2, float values get truncated. We are storing default float values as floats (not 100% sure why?), which results in the defaults being truncated in the JIT and not matching the (specified) native function signatures.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18044

Reviewed By: ezyang

Differential Revision:

D14469868

Pulled By: gchanan

fbshipit-source-id:

a456de599e8dab106966bcac7a6033f02ce3cdd2

Choongwoo Han [Fri, 15 Mar 2019 14:33:31 +0000 (07:33 -0700)]

Allow None for checkpoint (#17969)

Summary:

Currently, we cannot run a checkpointed function with None argument.

```python

out = torch.utils.checkpoint.checkpoint(run_fn, input_var, None)

```

```

File "/home/tunz/anaconda3/envs/torchdev/lib/python3.7/site-packages/torch/utils/checkpoint.py", line 14, in detach_variable

x = inp.detach()

AttributeError: 'NoneType' object has no attribute 'detach'

```

This PR makes checkpoint function to safely handle None argument.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17969

Differential Revision:

D14475148

Pulled By: ezyang

fbshipit-source-id:

9afe9e9aac511a6df1e1620e9ac341536890d451

ttup7777 [Fri, 15 Mar 2019 14:26:38 +0000 (07:26 -0700)]

Fix unclosed files in download.py, test_onnxifi.py, test_trt.py (#18017)

Summary:

According to https://docs.python.org/3/tutorial/inputoutput.html, it is good practice to use the "with" keyword when dealing with file objects. If not, you should call f.close() to close the file and immediately free up any system resources used by it. Thus, I adjust the open file function to "with open() as f".

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18017

Differential Revision:

D14475112

Pulled By: ezyang

fbshipit-source-id:

d1c0821e39cb8a09f86d6d08b437b4a99746416c

Junjie Bai [Fri, 15 Mar 2019 09:49:02 +0000 (02:49 -0700)]

Run multi-gpu (single host) resnet50 and resnext101 training in bench (#18043)

Summary:

This is now working in rocm 2.2

cc xw285cornell

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18043

Differential Revision:

D14477493

Pulled By: bddppq

fbshipit-source-id:

4d2dab1d5dbdbd4d6189162c074b19c4e9882c7d

BowenBao [Fri, 15 Mar 2019 05:13:11 +0000 (22:13 -0700)]

Update nonzero onnx export (#18047)

Summary:

The output format of NonZero in ONNX(numpy https://docs.scipy.org/doc/numpy/reference/generated/numpy.nonzero.html) differs from that in PyTorch:

In ONNX: `[rank_of_input, num_of_nonzeros]`, whereas in PyTorch: `[num_of_nonzeros, rank_of_input]`.

To resolve the difference a Transpose op after the nonzero output is added in the exporter.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18047

Differential Revision:

D14475081

Pulled By: ezyang

fbshipit-source-id:

7a3e4899f3419766b6145d3e9261e92859e81dc4

Jongsoo Park [Fri, 15 Mar 2019 05:07:58 +0000 (22:07 -0700)]

more careful use of auto in sparse operations (#17958)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17958

In some places, we need 64-bit for corner cases even though it's going to be rare.

In some places, we were using 64-bit unnecessarily.

Reviewed By: hyuen

Differential Revision:

D14435523

fbshipit-source-id:

e01ab73029ff780133af7ff4bbbe2e17926ed5a2

Junjie Bai [Fri, 15 Mar 2019 03:49:54 +0000 (20:49 -0700)]

Update caffe2 docker images tag to 253 (#18031)

Summary:

To use ROCm 2.2

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18031

Reviewed By: ezyang

Differential Revision:

D14469242

Pulled By: bddppq

fbshipit-source-id:

c969bcf95dabe067d7b1a2cf6e07209e11148ec1

Johannes M Dieterich [Fri, 15 Mar 2019 03:06:06 +0000 (20:06 -0700)]

Fix typo (#17949)

Summary:

Fix a very common typo in my name.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17949

Differential Revision:

D14475162

Pulled By: ezyang

fbshipit-source-id:

91c2c364c56ecbbda0bd530e806a821107881480

J M Dieterich [Fri, 15 Mar 2019 01:44:24 +0000 (18:44 -0700)]

Update to ROCm2.2 (#18007)

Summary:

ROCm 2.2 was released today, if we respin the CI docker images with the attached, PyTorch/Caffe2 will support ROCm 2.2

Changes necessary:

* for the Ubuntu target, HIP PR 934 needs to be applied to fix the forceinline definition. ROCm 2.3 will contain this.

* two unit tests proof flaky on different platforms, disable them defensively.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18007

Differential Revision:

D14473903

Pulled By: bddppq

fbshipit-source-id:

b1939f11d1c765a3bf71bb244b15f6ceb0e816d3

Michael Suo [Fri, 15 Mar 2019 00:27:22 +0000 (17:27 -0700)]

fix clang-tidy (#18030)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18030

ghimport-source-id:

d68781226eee923c90be862ef54693feef5f1c1a

Stack:

* **#18030 [jit] fix clang-tidy**

fix the following complaint

```

pytorch/torch/csrc/jit/ir.cpp:84:7: error: pass by value and use std::move [modernize-pass-by-value,-warnings-as-errors]

const std::string& delim = ", ")

^~~~~~~~~~~~~~~~~~

std::string

```

Reviewed By: shannonzhu

Differential Revision:

D14466714

fbshipit-source-id:

195cba335ae656db28fc6230b9e56ad208c88c29

David Riazati [Thu, 14 Mar 2019 23:45:31 +0000 (16:45 -0700)]

Allow fewer arguments than the max in ArgumentSpec (#17826)

Summary:

Fixes #17558

The flattened tuple `Optional[Tuple[int, int]]` could either result in 1 (`None`) or 2 (`int` and `int`) values, so allow this case in `ArgumentSpec`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17826

Differential Revision:

D14415290

Pulled By: driazati

fbshipit-source-id:

971bfa39502cfb8f08a991f16ffed6d138e48dc9

Lu Fang [Thu, 14 Mar 2019 22:39:25 +0000 (15:39 -0700)]

update of fbcode/foxi to

d1f45b1a2b1585d0e9bc65e15e463db344fc3ff6 (#18028)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18028

Previous import was

520e8e135f1ad75959bf9b5bd15c361b8caeb8d6

Included changes:

- **[d1f45b1](https://github.com/houseroad/foxi/commit/d1f45b1)**: update the gitignore (#6) <Lu Fang>

- **[398135c](https://github.com/houseroad/foxi/commit/398135c)**: Remove static variable in header (#3) <Lu Fang>

- **[f817be1](https://github.com/houseroad/foxi/commit/f817be1)**: sync to ONNX

cb544d07cc022e3fe83622fda9b2b1fa00b75b89 (#2) <Lu Fang>

Reviewed By: zrphercule

Differential Revision:

D14464213

fbshipit-source-id:

b5d166f05f7fd503dec11d676e219cc6c6a373f9

Edward Yang [Thu, 14 Mar 2019 22:25:35 +0000 (15:25 -0700)]

Use std::isnan instead of self-comparison. (#18021)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18021

ghimport-source-id:

03423ba47ba5900c2b400c4457b148147ce8b35e

Stack:

* **#18021 Use std::isnan instead of self-comparison.**

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Reviewed By: soumith

Differential Revision:

D14460699

fbshipit-source-id:

d8feb7f3f0e93996bd1b4f4aea163548b1d12437

Yinghai Lu [Thu, 14 Mar 2019 21:45:28 +0000 (14:45 -0700)]

Unroll If ops when doing ONNXIFI transform (#18039)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18039

We basically flatten the whole net in order to ease the ONNXIFI transform. An alternative way is to ONNXIFI the internal net of the If op, which can be done by adding interfacing inputs/outputs that the internal then_net or else_net referred to the inputs/outputs of the If op. This will be left as an TODO option.

Reviewed By: zrphercule

Differential Revision:

D14452132

fbshipit-source-id:

00ad48d40da6fb8eabf9cca36701bcf61cbe4edc

Yinghai Lu [Thu, 14 Mar 2019 21:45:27 +0000 (14:45 -0700)]

Minor improvements to ONNXIFI transform (#17964)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17964

1. Make the output of TensorFill outputs CONSTANT during shape inference

2. Add option to avoid adding BatchAdjust ops

3. Add option to avoid lowering subgraph that's smaller than a limit

Reviewed By: hyuen

Differential Revision:

D14360903

fbshipit-source-id:

b3c5966b44e7cd0d56428acd6cc97f529b36b171

Junjie Bai [Thu, 14 Mar 2019 21:35:31 +0000 (14:35 -0700)]

Run fp16 resnext101 training in bench script (#17963)

Summary:

cc xw285cornell

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17963

Differential Revision:

D14453445

Pulled By: bddppq

fbshipit-source-id:

7ca0e0c33ae89d4d4cf6ddba321daf4d6b2d5ed6

Jie [Thu, 14 Mar 2019 21:01:45 +0000 (14:01 -0700)]

Tensor Iterator loop unrolling (#17667)

Summary:

Modified Tensor Iterator gpu reduction kernel.

Creating multiple accumulator during thread reduce, this removes data dependency

between unrolled loops, expose instruction level parallelism that benefits

latency bounded kernels (e.g. welford used by `torch.std`)

This approach increases register usage, such that we need to tune unrolling

factors to prevent register spilling.

Current implementation tune down the unrolling factor to 2 for welford (register

heavy kernel), while keeping it unchanged (4) for the rest of reduction kernels.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17667

Differential Revision:

D14368325

Pulled By: umanwizard

fbshipit-source-id:

9d64c0dccabdb1b7c3922a6557224af704a1974e

Xiaomeng Yang [Thu, 14 Mar 2019 20:48:13 +0000 (13:48 -0700)]

Temp fix for TileOp backward compatibility (#18026)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18026

Temp fix for TileOp backward compatibility

Reviewed By: kittipatv

Differential Revision:

D14463672

fbshipit-source-id:

1f3ec550245cb63f1bc4f26196b9334cfe5d0705

Michael Suo [Thu, 14 Mar 2019 19:16:30 +0000 (12:16 -0700)]

add a dump method to TreeViews (#17965)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17965

ghimport-source-id:

0d3d6340141d8413ce524a8d8ed0d308854ee7ef

Stack:

* (to be filled)

Also added it to the python bindings. Not for any particular reason,

just because otherwise the function gets elided (even in debug mode!)

and thus can't be called from the debugger

Reviewed By: eellison

Differential Revision:

D14442654

fbshipit-source-id:

2868bb32ccb80b04f9483883faa702f63a7948bf

Duc Ngo [Thu, 14 Mar 2019 19:16:12 +0000 (12:16 -0700)]

JIT IR - Make valueMapPtr optional in convertNetDefToIR (#17942)

Summary:

Make valueMapPtr optional in convertNetDefToIR, and add tests

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17942

Differential Revision:

D14429687

Pulled By: duc0

fbshipit-source-id:

3a5a72bbb5acc1bfd7144a987688c599016fbf7a

Yanghan Wang [Thu, 14 Mar 2019 18:49:31 +0000 (11:49 -0700)]

register RoIAlign with C10

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/17889

Reviewed By: smessmer

Differential Revision:

D14411630

fbshipit-source-id:

c3b7941d725ae2c78e8d79f52a7983db92b75807

Wanchao Liang [Thu, 14 Mar 2019 18:17:18 +0000 (11:17 -0700)]

add tanh to AD and fix layernorm schema

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/17816

Differential Revision:

D14453048

Pulled By: wanchaol

fbshipit-source-id:

45815db964a4d9ee85d8933e335b47f215e3c467

peter [Thu, 14 Mar 2019 17:05:53 +0000 (10:05 -0700)]

Add magma debug version for Windows

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18008

Differential Revision:

D14455117

Pulled By: soumith

fbshipit-source-id:

29d9a2e0b36d72bece0bb1870bbdc740c4d1f9d6

peter [Thu, 14 Mar 2019 17:05:04 +0000 (10:05 -0700)]

Simplify env creation when running Windows tests (#17916)

Summary:

Fixes https://github.com/pytorch/pytorch/issues/13465.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17916

Differential Revision:

D14460589

Pulled By: soumith

fbshipit-source-id:

e952d08648b833cfd4a8551355ecd68045fea25c

Edward Yang [Thu, 14 Mar 2019 16:53:05 +0000 (09:53 -0700)]

Fix lint in test_multiprocessing.

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18016

Reviewed By: eellison

Differential Revision:

D14458177

fbshipit-source-id:

f17b3e06223ab399e9ce24be6988effe04dad636

Gregory Chanan [Thu, 14 Mar 2019 16:21:02 +0000 (09:21 -0700)]

Remove ArgcountSortPlugin, which doesn't seem to be used.

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/17977

Reviewed By: ezyang

Differential Revision:

D14438842

Pulled By: gchanan

fbshipit-source-id:

9b1746880fd7e3bd2b76a2559face34940ce7570

Edward Yang [Thu, 14 Mar 2019 15:52:55 +0000 (08:52 -0700)]

Fix lint in test_nn.py (#18006)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18006

ghimport-source-id:

e267ece1ac03e0d17e01dddf4a77f52421859435

Stack:

* **#18006 Fix lint in test_nn.py**

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Reviewed By: eellison

Differential Revision:

D14458108

fbshipit-source-id:

18ee6199447efed55a922cff5b3ad940a21c0536

Sebastian Messmer [Thu, 14 Mar 2019 15:50:46 +0000 (08:50 -0700)]

Simplify macros for exposing c10 ops to c2 (#17781)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17781

The wrapper for calling a c10 operator from caffe2 is now based on a runtime FunctionSchema instead of compile time information. This way, it can be created for any c10 operator schema with just one invocation to a simple macro instead of having to define arguments and more as compile time structures.

Furthermore, previously, the wrapper assumed there's an argument present for preallocated outputs, but that was only true for caffe2 operators exported to c10. So the wrapper only worked correctly for calling caffe2->c10->caffe2. Now with the new implementation, it works for any c10 operator.

Also, binary size for this should be much smaller.

Reviewed By: ezyang

Differential Revision:

D14375054

fbshipit-source-id:

bac7ab8e63929e6e2a148eacac41ed092009aa86

Sebastian Messmer [Thu, 14 Mar 2019 15:50:45 +0000 (08:50 -0700)]

Improve caffe2 operator wrapping (#17743)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17743

- caffe2::Operator::SetOutputTensor() can now be used in operators that are called from c10/PyTorch.

- If the operator uses SetOutputTensor() instead of XOutput(), the wrapper doesn't preallocate an empty tensor for the operator anymore. Only outputs accessed in XOutput() will get an output tensor preallocated.

- Remove the copying of the vector with output tensors into a vector with pointer to output tensors.

- Preallocated outputs are now passed in as one TensorList argument on the stack. This TensorList argument has a well-defined name so other wrappers (i.e. the wrapper calling from c2 into c10) can recognize and use it).

- Macros for exporting caffe2 operators to c10 are simplified. Instead of having `c10_op_handle_for_c2_op`, we now pass in the operator handle as a template argument.

- `SetOutputTensor` and `OutputTensorOrUndefined` now work with operators exported to c10

Reviewed By: ezyang

Differential Revision:

D14362434

fbshipit-source-id:

44a5e717204f21ea8e9728437429d9b84906f9f5

Gregory Chanan [Thu, 14 Mar 2019 15:00:51 +0000 (08:00 -0700)]

Remove unused KwargsPlugin.

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/17980

Reviewed By: ezyang

Differential Revision:

D14438877

Pulled By: gchanan

fbshipit-source-id:

f93764b00999effb5c8f852f8eda3a6da32dc767

vaeksare [Thu, 14 Mar 2019 14:19:49 +0000 (07:19 -0700)]

Disable btri tests on Windows if MAGMA is not found (#17989)

Summary:

Fixes #17988

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17989

Reviewed By: ezyang

Differential Revision:

D14454571

Pulled By: soumith

fbshipit-source-id:

fc39a807a597d3574f4ca4e22cea12194e4693c0

bhushan [Thu, 14 Mar 2019 13:29:03 +0000 (06:29 -0700)]

Report convolution size mismatch (#17436)

Summary:

1. Kernel size is larger than input

2. Expected output size to be less than zero

Test case added:

- invalid_conv1d

- Relevant test cases for conv2d and conv3d exists

Fixes #17247

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17436

Reviewed By: mrshenli

Differential Revision:

D14354272

Pulled By: fmassa

fbshipit-source-id:

94b98621aa03b1f60d151ef9399ed3da55d41b42

Jongsoo Park [Thu, 14 Mar 2019 10:12:08 +0000 (03:12 -0700)]

make momentum non negative in adagrad test (#18009)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18009

momentum should be initialized with non-negative values

Reviewed By: hyuen

Differential Revision:

D14450841

fbshipit-source-id:

5bbbd11645db9e6f2dc42b26a00ff3caf378c59f

Lu Fang [Thu, 14 Mar 2019 00:26:01 +0000 (17:26 -0700)]

Fix the CI

Summary: https://github.com/pytorch/pytorch/pull/17995 's CI has verified it should fix the CI.

Reviewed By: bddppq

Differential Revision:

D14447674

fbshipit-source-id:

50085db9ae7421b5be216ed0a2216234babfdf6c

Junjie Bai [Wed, 13 Mar 2019 23:01:45 +0000 (16:01 -0700)]

Fix missing return in HIPStreamMasqueradingAsCUDA::operator<< (#17961)

Summary:

```

In file included from /var/lib/jenkins/workspace/aten/src/ATen/native/hip/BatchLinearAlgebra.hip:3:

In file included from /var/lib/jenkins/workspace/aten/src/ATen/hip/HIPContext.h:5:

/var/lib/jenkins/workspace/aten/src/ATen/hip/impl/HIPStreamMasqueradingAsCUDA.h:107:1: warning: control reaches end of non-void function [-Wreturn-type]

}

^

1 warning generated.

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17961

Reviewed By: houseroad

Differential Revision:

D14436421

Pulled By: bddppq

fbshipit-source-id:

962665602178699d7c7b55f4ca7ff1eb72ee0349

Gregory Chanan [Wed, 13 Mar 2019 22:07:49 +0000 (15:07 -0700)]

Remove AssertNDim, which doesn't seem to be used.

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/17978

Reviewed By: colesbury

Differential Revision:

D14438845

Pulled By: gchanan

fbshipit-source-id:

106650c37fb1885201eaef27cb6d86b49ef27976

Gregory Chanan [Wed, 13 Mar 2019 20:28:08 +0000 (13:28 -0700)]

Remove unused BoolOption.

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/17979

Reviewed By: zou3519

Differential Revision:

D14438876

Pulled By: gchanan

fbshipit-source-id:

a6aeab0261ce6926ed82a81edee4564a8dd341ed

Elliot Waite [Wed, 13 Mar 2019 16:18:34 +0000 (09:18 -0700)]

Fix some typos in distributed.py.

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/17959

Differential Revision:

D14437347

Pulled By: soumith

fbshipit-source-id:

4c33571f56e9da687666516a310f91924cddd4d9

peter [Wed, 13 Mar 2019 16:07:57 +0000 (09:07 -0700)]

Fix Windows test CI

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/17954

Differential Revision:

D14437473

Pulled By: soumith

fbshipit-source-id:

f0d79ff0c5d735f822be3f42bbca91c1928dacaf

Edward Yang [Wed, 13 Mar 2019 15:35:26 +0000 (08:35 -0700)]

Fix lint in test_utils.py (#17944)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17944

ghimport-source-id:

5b45086428b5a36e737882c78f285141121fd1bc

Stack:

* **#17944 Fix lint in test_utils.py**

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Differential Revision:

D14430132

fbshipit-source-id:

b00de7b4c685645ad5a4dc8c5fe6ce7e1893a3eb

Guanheng Zhang [Wed, 13 Mar 2019 15:22:56 +0000 (08:22 -0700)]

Speed up gemm by reordering the for loops (#17730)

Summary:

Optimize the order of the "for" loops.

Note: For "transa = true" cases, the order of the "for" loops has been optimzied in the original code. Therefore, no significant improvement is observed in those case (i.e. "transa && transb" and "transa && !transb")

mode/opt (i.e. static libary)

//////////////////////////////////////////////////////////////////////////////

transa && transb

after:

loops: 2229 x: 128 y: 128 z: 128 time: 2243ns => acceleration multiplier: 0.90

loops: 124 x: 128 y: 1024 z: 128 time: 40381ns => acceleration multiplier: 0.97

loops: 121 x: 1024 y: 128 z: 128 time: 41651ns => acceleration multiplier: 0.96

loops: 15 x: 1024 y: 1024 z: 128 time: 333771ns => acceleration multiplier: 0.98

loops: 4610 x: 128 y: 128 z: 64 time: 1084ns => acceleration multiplier: 0.95

loops: 252 x: 128 y: 1024 z: 64 time: 19860ns => acceleration multiplier: 0.98

loops: 248 x: 1024 y: 128 z: 64 time: 20232ns => acceleration multiplier: 0.98

loops: 30 x: 1024 y: 1024 z: 64 time: 167338ns => acceleration multiplier: 0.99

before:

loops: 2468 x: 128 y: 128 z: 128 time: 2026ns

loops: 128 x: 128 y: 1024 z: 128 time: 39338ns

loops: 126 x: 1024 y: 128 z: 128 time: 39930ns

loops: 16 x: 1024 y: 1024 z: 128 time: 327549ns

loops: 4840 x: 128 y: 128 z: 64 time: 1033ns

loops: 258 x: 128 y: 1024 z: 64 time: 19441ns

loops: 252 x: 1024 y: 128 z: 64 time: 19854ns

loops: 31 x: 1024 y: 1024 z: 64 time: 166254ns

//////////////////////////////////////////////////////////////////////////////

transa && !transb

after:

loops: 4880 x: 128 y: 128 z: 128 time: 1024ns => acceleration multiplier: 0.98

loops: 638 x: 128 y: 1024 z: 128 time: 7839ns => acceleration multiplier: 1.04

loops: 605 x: 1024 y: 128 z: 128 time: 8276ns => acceleration multiplier: 1.01

loops: 77 x: 1024 y: 1024 z: 128 time: 65713ns => acceleration multiplier: 1.00

loops: 9935 x: 128 y: 128 z: 64 time: 503ns => acceleration multiplier: 1.00

loops: 1252 x: 128 y: 1024 z: 64 time: 3994ns => acceleration multiplier: 1.00

loops: 1183 x: 1024 y: 128 z: 64 time: 4226ns => acceleration multiplier: 0.98

loops: 153 x: 1024 y: 1024 z: 64 time: 32766ns => acceleration multiplier: 0.99

before:

loops: 4985 x: 128 y: 128 z: 128 time: 1003ns

loops: 615 x: 128 y: 1024 z: 128 time: 8140ns

loops: 599 x: 1024 y: 128 z: 128 time: 8357ns

loops: 76 x: 1024 y: 1024 z: 128 time: 65934ns

loops: 9897 x: 128 y: 128 z: 64 time: 505ns

loops: 1248 x: 128 y: 1024 z: 64 time: 4008ns

loops: 1203 x: 1024 y: 128 z: 64 time: 4159ns

loops: 154 x: 1024 y: 1024 z: 64 time: 32499ns

//////////////////////////////////////////////////////////////////////////////

!transa && transb

after:

loops: 3919 x: 128 y: 128 z: 128 time: 1276ns => acceleration multiplier: 2.97

loops: 497 x: 128 y: 1024 z: 128 time: 10069ns => acceleration multiplier: 7.85

loops: 449 x: 1024 y: 128 z: 128 time: 11145ns => acceleration multiplier: 4.77

loops: 57 x: 1024 y: 1024 z: 128 time: 88595ns => acceleration multiplier: 7.12

loops: 7575 x: 128 y: 128 z: 64 time: 660ns => acceleration multiplier: 3.00

loops: 967 x: 128 y: 1024 z: 64 time: 5173ns => acceleration multiplier: 7.66

loops: 877 x: 1024 y: 128 z: 64 time: 5702ns => acceleration multiplier: 4.76

loops: 111 x: 1024 y: 1024 z: 64 time: 45232ns => acceleration multiplier: 7.03

before:

loops: 1320 x: 128 y: 128 z: 128 time: 3789ns

loops: 64 x: 128 y: 1024 z: 128 time: 79061ns

loops: 95 x: 1024 y: 128 z: 128 time: 53107ns

loops: 8 x: 1024 y: 1024 z: 128 time: 631161ns

loops: 2521 x: 128 y: 128 z: 64 time: 1983ns

loops: 127 x: 128 y: 1024 z: 64 time: 39604ns

loops: 185 x: 1024 y: 128 z: 64 time: 27128ns

loops: 16 x: 1024 y: 1024 z: 64 time: 318155ns

//////////////////////////////////////////////////////////////////////////////

!transa && !transb

after:

loops: 3895 x: 128 y: 128 z: 128 time: 1283ns => acceleration multiplier: 1.73

loops: 393 x: 128 y: 1024 z: 128 time: 12746ns => acceleration multiplier: 3.36

loops: 411 x: 1024 y: 128 z: 128 time: 12170ns => acceleration multiplier: 1.93

loops: 46 x: 1024 y: 1024 z: 128 time: 110116ns => acceleration multiplier: 3.17

loops: 7404 x: 128 y: 128 z: 64 time: 675ns => acceleration multiplier: 1.58

loops: 636 x: 128 y: 1024 z: 64 time: 7872ns => acceleration multiplier: 2.70

loops: 724 x: 1024 y: 128 z: 64 time: 6911ns => acceleration multiplier: 1.32

loops: 73 x: 1024 y: 1024 z: 64 time: 68502ns => acceleration multiplier: 2.49

before:

loops: 2253 x: 128 y: 128 z: 128 time: 2219ns

loops: 117 x: 128 y: 1024 z: 128 time: 42788ns

loops: 214 x: 1024 y: 128 z: 128 time: 23465ns

loops: 15 x: 1024 y: 1024 z: 128 time: 349076ns

loops: 4694 x: 128 y: 128 z: 64 time: 1065ns

loops: 236 x: 128 y: 1024 z: 64 time: 21251ns

loops: 549 x: 1024 y: 128 z: 64 time: 9108ns

loops: 30 x: 1024 y: 1024 z: 64 time: 170799ns

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17730

Differential Revision:

D14325149

Pulled By: zhangguanheng66

fbshipit-source-id:

a7a5a83890fdf99fee6eb87a3a5060b7b6bd862f

livc [Wed, 13 Mar 2019 15:06:56 +0000 (08:06 -0700)]

fix punctuation

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/17973

Differential Revision:

D14438725

Pulled By: zou3519

fbshipit-source-id:

30a5485b508b4ae028057e0b66a8abb2b163d66b

Thomas Viehmann [Wed, 13 Mar 2019 10:44:16 +0000 (03:44 -0700)]

fixes for AVX detection (#17915)

Summary:

Our AVX2 routines use functions such as _mm256_extract_epi64

that do not exist on 32 bit systems even when they have AVX2.

This disables AVX2 when _mm256_extract_epi64 does not exist.

This fixes the "local" part of #17901 (except disabling FBGEMM),

but there also is sleef to be updated and NNPACK to be fixed,

see the bug report for further discussion.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17915

Differential Revision:

D14437338

Pulled By: soumith

fbshipit-source-id:

d4ef7e0801b5d1222a855a38ec207dd88b4680da

Thomas Viehmann [Wed, 13 Mar 2019 10:43:58 +0000 (03:43 -0700)]

Disable FBGEMM when building under x86 32bit (#17922)

Summary:

FBGEMM doesn't work on x86 32bit and prior to this patch, it will

generate x86_64 objects in a build that is supposed to be x86 32bit.

FBGEMM actually relies on registers not available on x86_32, so

we disable it.

This takes of one element of #17901. There are more dependencies

and a separate PR (#17915) regarding AVX detection for the code in the

main repository.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17922

Differential Revision:

D14437340

Pulled By: soumith

fbshipit-source-id:

bd9fc98cf607d9b0bc28127fbbc8b04fa10eecbe

serhii-havrylov [Wed, 13 Mar 2019 10:16:40 +0000 (03:16 -0700)]

Update docs for `mark_non_differentiable` method (#17891)

Summary:

The current documentation doesn't reflect the real values of tensors during the backward pass.

This issue is mentioned in https://github.com/pytorch/pytorch/issues/12631

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17891

Differential Revision:

D14419949

Pulled By: soumith

fbshipit-source-id:

8b495628c3f017bc880f8096682cd176a53974e5

Sebastian Messmer [Wed, 13 Mar 2019 08:20:57 +0000 (01:20 -0700)]

Simplify OpKernel (#17925)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17925

There's no need for OpKernel to keep the cache creator around if we initialize cache on construction.

This basically means, kernel caches are now constructed when the kernel is looked up from the dispatcher, and not delayed to the first call anymore.

This gives us the benefit of cheaper calling because now kernel calling doesn't have to check if the cache is already initialized.

Also, this improves thread-safety. Now, OpKernel is thread-safe if the kernel is thread-safe.

Reviewed By: ezyang

Differential Revision:

D14424907

fbshipit-source-id:

a0d09a3a560dfe78aab53d558c9ebb91b57722df

Junjie Bai [Wed, 13 Mar 2019 08:01:13 +0000 (01:01 -0700)]

Mark DispatchTable move ctor and move assignment operator as deleted (#17948)

Summary:

```

21:39:50 /var/lib/jenkins/workspace/aten/src/ATen/core/dispatch/DispatchTable.h:125:3: warning: explicitly defaulted move constructor is implicitly deleted [-Wdefaulted-function-deleted]

21:39:50 DispatchTable(DispatchTable&&) = default;

21:39:50 ^

21:39:50 /var/lib/jenkins/workspace/aten/src/ATen/core/dispatch/DispatchTable.h:212:36: note: move constructor of 'DispatchTable' is implicitly deleted because field 'kernels_' has a deleted move constructor

21:39:50 detail::ThreadsafeOperatorTable_ kernels_;

21:39:50 ^

21:39:50 /var/lib/jenkins/workspace/aten/src/ATen/core/dispatch/DispatchTable.h:105:68: note: copy constructor of 'ThreadsafeOperatorTable_' is implicitly deleted because field 'map_' has a deleted copy constructor

21:39:50 LeftRight<ska::flat_hash_map<TensorTypeId, DispatchTableEntry>> map_;

21:39:50 ^

21:39:50 /var/lib/jenkins/workspace/c10/util/LeftRight.h:152:16: note: copy constructor of 'LeftRight<ska::flat_hash_map<c10::TensorTypeId, c10::DispatchTableEntry, std::hash<c10::TensorTypeId>, std::equal_to<c10::TensorTypeId>, std::allocator<std::pair<c10::TensorTypeId, c10::DispatchTableEntry> > > >' is implicitly deleted because field '_writeMutex' has a deleted copy constructor

21:39:50 std::mutex _writeMutex;

21:39:50 ^

21:39:50 /usr/lib/gcc/x86_64-linux-gnu/5.4.0/../../../../include/c++/5.4.0/mutex:129:5: note: 'mutex' has been explicitly marked deleted here

21:39:50 mutex(const mutex&) = delete;

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17948

Reviewed By: ezyang

Differential Revision:

D14431344

Pulled By: bddppq

fbshipit-source-id:

b1c6593b73cb467a58b09a3470b8899b82564d5e

Lu Fang [Wed, 13 Mar 2019 07:49:07 +0000 (00:49 -0700)]

Add more hint in the JIT tracer (#17957)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17957

So developer knows what action should be taken when model contains nondeterministic node

Reviewed By: dzhulgakov

Differential Revision:

D14435923

fbshipit-source-id:

12d930185852f78c54efc8e90c51aa7c7c7faab5

Andrey Malevich [Wed, 13 Mar 2019 05:57:44 +0000 (22:57 -0700)]

Fix half-float conversion ops to handle tensors larger than 2B of params (#17952)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17952

As desc.

Reviewed By: hyuen

Differential Revision:

D14435092

fbshipit-source-id:

dc614ba16ad531101d04d01aec8f1fbd534ebec5

Lu Fang [Wed, 13 Mar 2019 05:06:25 +0000 (22:06 -0700)]

Override the resolve_library_path in FBCode (#17497)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17497

The following problems have been addressed: 1) import torch.ops correctly, 2) make realpath call optional

Reviewed By: dzhulgakov

Differential Revision:

D14094358

fbshipit-source-id:

2f9a6fca656867287a7c82c465a4554384ff7323

Karl Ostmo [Wed, 13 Mar 2019 04:40:13 +0000 (21:40 -0700)]

update ccache guide (#17938)

Summary:

closes #17937

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17938

Differential Revision:

D14435791

Pulled By: kostmo

fbshipit-source-id:

b1d0db8902f78bde51150606e2a67fb9ddfe7812

Michael Suo [Wed, 13 Mar 2019 04:31:59 +0000 (21:31 -0700)]

unify cpp tests (#17947)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17947

Instead of having a gtest and a no-gtest file that you have to remember to register tests in, add a single registration point and use some macro magic to make it work for both gtest and non-gtest builds

Reviewed By: eellison

Differential Revision:

D14431302

fbshipit-source-id:

e1abac135992577a943eaa7abcc81a6ed31fa6e5

svcscm [Wed, 13 Mar 2019 03:28:59 +0000 (20:28 -0700)]

Updating submodules

Reviewed By: zpao

fbshipit-source-id:

7d454d0f58898741f293b356dfc10d7fc31fd55c

Duc Ngo [Wed, 13 Mar 2019 03:05:36 +0000 (20:05 -0700)]

Remove sinkMaxPool transformation (#17694)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17694

Remove sinkMaxPool transformation as it's unused

Differential Revision:

D14328624

fbshipit-source-id:

bd245403b756157120faa61a0f9253c15120e7a8

Alexey Kozhevnikov [Wed, 13 Mar 2019 02:48:11 +0000 (19:48 -0700)]

Fix Windows build (#17917)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17917

D14375995 introduced instantiation of the following templates with `bool` type (more specifically `To` is `int64_t`, `From` is `bool`):

```

template <typename To, typename From>

typename std::enable_if<std::is_integral<From>::value, bool>::type overflows(

From f) {

using limit = std::numeric_limits<typename scalar_value_type<To>::type>;

if (!limit::is_signed && std::numeric_limits<From>::is_signed) {

// allow for negative numbers to wrap using two's complement arithmetic.

// For example, with uint8, this allows for `a - b` to be treated as

// `a + 255 * b`.

return f > limit::max() ||

(f < 0 && -static_cast<uint64_t>(f) > limit::max());

} else {

return f < limit::lowest() || f > limit::max();

}

}

template <typename To, typename From>

typename std::enable_if<std::is_floating_point<From>::value, bool>::type

overflows(From f) {

using limit = std::numeric_limits<typename scalar_value_type<To>::type>;

if (limit::has_infinity && std::isinf(static_cast<double>(f))) {

return false;

}

if (!limit::has_quiet_NaN && (f != f)) {

return true;

}

return f < limit::lowest() || f > limit::max();

}

```

MSVC gives C4804 warning and because "treat warnings as errors" is on it fails to build on Windows. Disabling such warning for those 2 templates.

Reviewed By: mingzhe09088

Differential Revision:

D14421157

fbshipit-source-id:

e72ba34406628c84da48518b32a46f851819bad1

Jongsoo Park [Wed, 13 Mar 2019 01:14:50 +0000 (18:14 -0700)]

fix overly restrictive assertion (#17939)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17939

Instead of just asserting min <= 0 and max >= 0 , we adjust histogram to include 0 in the range.

We need to include 0 in the range during norm error minimization to correctly represent our quantization method that includes 0.

Reviewed By: csummersea

Differential Revision:

D14428732

fbshipit-source-id:

6669a9d2c7d409ec3b31aee0afe48071986b9b71

Owen Anderson [Wed, 13 Mar 2019 01:00:23 +0000 (18:00 -0700)]

Enable threadpool threads to greedily acquire new tasks if available. (#17808)

Summary:

This improves locality and affinity by keeping work on the same

threads preferentially to starting work on new ones, and reduces

contention on the threadpool lock more generally.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17808

Differential Revision:

D14391282

Pulled By: resistor

fbshipit-source-id:

3aec81656a50460a725aa4187c61864295d4f46e