Sebastian Messmer [Thu, 18 Apr 2019 07:57:44 +0000 (00:57 -0700)]

String-based schemas in op registration API (#19283)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19283

Now that the function schema parser is available in ATen/core, we can use it from the operator registration API to register ops based on string schemas.

This does not allow registering operators based on only the name yet - the full schema string needs to be defined.

A diff stacked on top will add name based registration.

Reviewed By: dzhulgakov

Differential Revision:

D14931919

fbshipit-source-id:

71e490dc65be67d513adc63170dc3f1ce78396cc

Sebastian Messmer [Thu, 18 Apr 2019 07:57:44 +0000 (00:57 -0700)]

Move function schema parser to ATen/core build target (#19282)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19282

This is largely a hack because we need to use the function schema parser from ATen/core

but aren't clear yet on how the final software architecture should look like.

- Add function schema parser files from jit to ATen/core build target.

- Also move ATen/core build target one directory up to allow this.

We only change the build targets and don't move the files yet because this is likely

not the final build set up and we want to avoid repeated interruptions

for other developers. cc zdevito

Reviewed By: dzhulgakov

Differential Revision:

D14931922

fbshipit-source-id:

26462e2e7aec9e0964706138edd3d87a83b964e3

Lu Fang [Thu, 18 Apr 2019 07:33:00 +0000 (00:33 -0700)]

update of fbcode/onnx to

ad7313470a9119d7e1afda7edf1d654497ee80ab (#19339)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19339

Previous import was

971311db58f2fa8306d15e1458b5fd47dbc8d11c

Included changes:

- **[

ad731347](https://github.com/onnx/onnx/commit/

ad731347)**: Fix shape inference for matmul (#1941) <Bowen Bao>

- **[

3717dc61](https://github.com/onnx/onnx/commit/

3717dc61)**: Shape Inference Tests for QOps (#1929) <Ashwini Khade>

- **[

a80c3371](https://github.com/onnx/onnx/commit/

a80c3371)**: Prevent unused variables from generating warnings across all platforms. (#1930) <Pranav Sharma>

- **[

be9255c1](https://github.com/onnx/onnx/commit/

be9255c1)**: add title (#1919) <Prasanth Pulavarthi>

- **[

7a112a6f](https://github.com/onnx/onnx/commit/

7a112a6f)**: add quantization ops in onnx (#1908) <Ashwini Khade>

- **[

6de42d7d](https://github.com/onnx/onnx/commit/

6de42d7d)**: Create working-groups.md (#1916) <Prasanth Pulavarthi>

Reviewed By: yinghai

Differential Revision:

D14969962

fbshipit-source-id:

5ec64ef7aee5161666ed0c03e201be0ae20826f9

Roy Li [Thu, 18 Apr 2019 07:18:35 +0000 (00:18 -0700)]

Remove copy and copy_ special case on Type (#18972)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18972

ghimport-source-id:

b5d3012b00530145fa24ab0cab693a7e80cb5989

Differential Revision:

D14816530

Pulled By: li-roy

fbshipit-source-id:

9c7a166abb22d2cd1f81f352e44d9df1541b1774

Spandan Tiwari [Thu, 18 Apr 2019 07:06:59 +0000 (00:06 -0700)]

Add constant folding to ONNX graph during export (Resubmission) (#18698)

Summary:

Rewritten version of https://github.com/pytorch/pytorch/pull/17771 using graph C++ APIs.

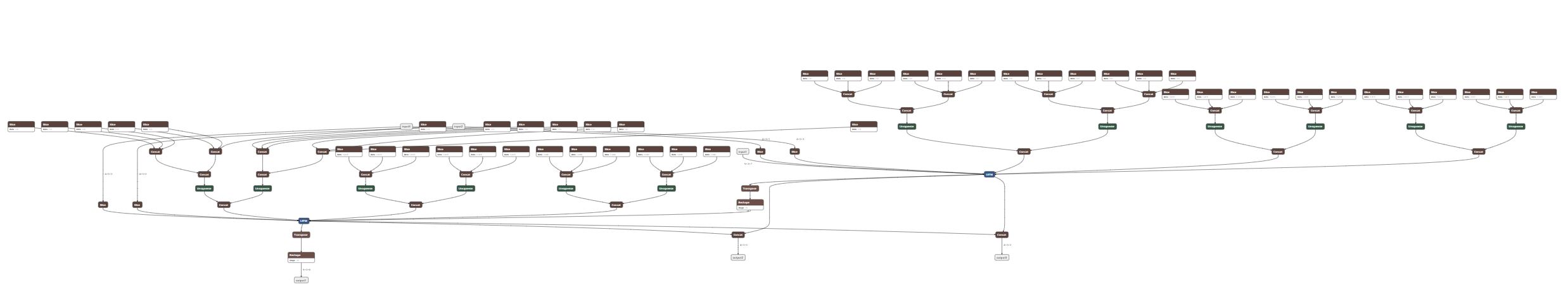

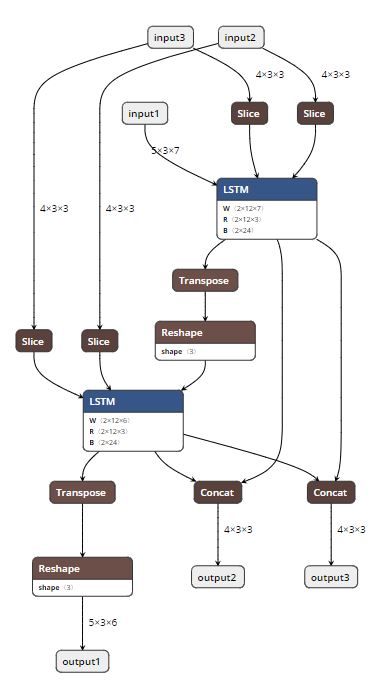

This PR adds the ability to do constant folding on ONNX graphs during PT->ONNX export. This is done mainly to optimize the graph and make it leaner. The two attached snapshots show a multiple-node LSTM model before and after constant folding.

A couple of notes:

1. Constant folding is by default turned off for now. The goal is to turn it on by default once we have validated it through all the tests.

2. Support for folding in nested blocks is not in place, but will be added in the future, if needed.

**Original Model:**

**Constant-folded model:**

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18698

Differential Revision:

D14889768

Pulled By: houseroad

fbshipit-source-id:

b6616b1011de9668f7c4317c880cb8ad4c7b631a

Roy Li [Thu, 18 Apr 2019 06:52:44 +0000 (23:52 -0700)]

Remove usages of TypeID (#19183)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19183

ghimport-source-id:

9af190b072523459fa61e5e79419b88ac8586a4d

Differential Revision:

D14909203

Pulled By: li-roy

fbshipit-source-id:

d716179c484aebfe3ec30087c5ecd4a11848ffc3

Sebastian Messmer [Thu, 18 Apr 2019 06:41:22 +0000 (23:41 -0700)]

Fixing function schema parser for Android (#19281)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19281

String<->Number conversions aren't available in the STL used in our Android environment.

This diff adds workarounds for that so that the function schema parser can be compiled for android

Reviewed By: dzhulgakov

Differential Revision:

D14931649

fbshipit-source-id:

d5d386f2c474d3742ed89e52dff751513142efad

Sebastian Messmer [Thu, 18 Apr 2019 06:41:21 +0000 (23:41 -0700)]

Split function schema parser from operator (#19280)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19280

We want to use the function schema parser from ATen/core, but with as little dependencies as possible.

This diff moves the function schema parser into its own file and removes some of its dependencies.

Reviewed By: dzhulgakov

Differential Revision:

D14931651

fbshipit-source-id:

c2d787202795ff034da8cba255b9f007e69b4aea

Ailing Zhang [Thu, 18 Apr 2019 06:38:26 +0000 (23:38 -0700)]

fix hub doc format

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/19396

Differential Revision:

D14993859

Pulled By: ailzhang

fbshipit-source-id:

bdf94e54ec35477cfc34019752233452d84b6288

Mikhail Zolotukhin [Thu, 18 Apr 2019 05:05:49 +0000 (22:05 -0700)]

Clang-format torch/csrc/jit/passes/quantization.cpp. (#19385)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19385

ghimport-source-id:

67f808db7dcbcb6980eac79a58416697278999b0

Differential Revision:

D14991917

Pulled By: ZolotukhinM

fbshipit-source-id:

6c2e57265cc9f0711752582a04d5a070482ed1e6

Shen Li [Thu, 18 Apr 2019 04:18:49 +0000 (21:18 -0700)]

Allow DDP to wrap multi-GPU modules (#19271)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19271

allow DDP to take multi-gpu models

Reviewed By: pietern

Differential Revision:

D14822375

fbshipit-source-id:

1eebfaa33371766d3129f0ac6f63a573332b2f1c

Jiyan Yang [Thu, 18 Apr 2019 04:07:42 +0000 (21:07 -0700)]

Add validator for optimizers when parameters are shared

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18497

Reviewed By: kennyhorror

Differential Revision:

D14614738

fbshipit-source-id:

beddd8349827dcc8ccae36f21e5d29627056afcd

Ailing Zhang [Thu, 18 Apr 2019 04:01:36 +0000 (21:01 -0700)]

hub minor fixes (#19247)

Summary:

A few improvements while doing bert model

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19247

Differential Revision:

D14989345

Pulled By: ailzhang

fbshipit-source-id:

f4846813f62b6d497fbe74e8552c9714bd8dc3c7

Elias Ellison [Thu, 18 Apr 2019 02:52:16 +0000 (19:52 -0700)]

fix wrong schema (#19370)

Summary:

Op was improperly schematized previously. Evidently checkScript does not test if the outputs are the same type.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19370

Differential Revision:

D14985159

Pulled By: eellison

fbshipit-source-id:

feb60552afa2a6956d71f64801f15e5fe19c3a91

Mikhail Zolotukhin [Thu, 18 Apr 2019 01:34:50 +0000 (18:34 -0700)]

Fix printing format in examples in jit/README.md. (#19323)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19323

ghimport-source-id:

74a01917de70c9d59099cf601b24f3cb484ab7be

Differential Revision:

D14990100

Pulled By: ZolotukhinM

fbshipit-source-id:

87ede08c8ca8f3027b03501fbce8598379e8b96c

Eric Faust [Wed, 17 Apr 2019 23:59:34 +0000 (16:59 -0700)]

Allow for single-line deletions in clang_tidy.py (#19082)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19082

When you have just one line of deletions, just as with additions, there is no count printed.

Without this fix, we ignore all globs with single-line deletions when selecting which lines were changed.

When all the changes in the file were single-line, this meant no line-filtering at all!

Differential Revision:

D14860426

fbshipit-source-id:

c60e9d84f9520871fc0c08fa8c772c227d06fa27

Michael Suo [Wed, 17 Apr 2019 23:48:28 +0000 (16:48 -0700)]

Revert

D14901379: [jit] Add options to Operator to enable registration of alias analysis passes

Differential Revision:

D14901379

Original commit changeset:

d92a497e280f

fbshipit-source-id:

51d31491ab90907a6c95af5d8a59dff5e5ed36a4

Michael Suo [Wed, 17 Apr 2019 23:48:28 +0000 (16:48 -0700)]

Revert

D14901485: [jit] Only require python print on certain namespaces

Differential Revision:

D14901485

Original commit changeset:

4b02a66d325b

fbshipit-source-id:

93348056c00f43c403cbf0d34f8c565680ceda11

Yinghai Lu [Wed, 17 Apr 2019 23:40:58 +0000 (16:40 -0700)]

Remove unused template parameter in OnnxifiOp (#19362)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19362

`float` type is never used in OnnxifiOp....

Reviewed By: bddppq

Differential Revision:

D14977970

fbshipit-source-id:

8fee02659dbe408e5a3e0ff95d74c04836c5c281

Jerry Zhang [Wed, 17 Apr 2019 23:10:05 +0000 (16:10 -0700)]

Add empty_quantized (#18960)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18960

empty_affine_quantized creates an empty affine quantized Tensor from scratch.

We might need this when we implement quantized operators.

Differential Revision:

D14810261

fbshipit-source-id:

f07d8bf89822d02a202ee81c78a17aa4b3e571cc

Elias Ellison [Wed, 17 Apr 2019 23:01:41 +0000 (16:01 -0700)]

Cast not expressions to bool (#19361)

Summary:

As part of implicitly casting condition statements, we should be casting not expressions as well.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19361

Differential Revision:

D14984275

Pulled By: eellison

fbshipit-source-id:

f8dae64f74777154c25f7a6bcdac03cf44cbb60b

Owen Anderson [Wed, 17 Apr 2019 22:33:07 +0000 (15:33 -0700)]

Eliminate type dispatch from copy_kernel, and use memcpy directly rather than implementing our own copy. (#19198)

Summary:

It turns out that copying bytes is the same no matter what type

they're interpreted as, and memcpy is already vectorized on every

platform of note. Paring this down to the simplest implementation

saves just over 4KB off libtorch.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19198

Differential Revision:

D14922656

Pulled By: resistor

fbshipit-source-id:

bb03899dd8f6b857847b822061e7aeb18c19e7b4

Bram Wasti [Wed, 17 Apr 2019 21:17:01 +0000 (14:17 -0700)]

Only require python print on certain namespaces (#18846)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18846

ghimport-source-id:

b211e15d24c88fdc32d79222d9fce2fa9c291541

Differential Revision:

D14901485

Pulled By: bwasti

fbshipit-source-id:

4b02a66d325ba5391d1f838055aea13b5e4f6485

Bram Wasti [Wed, 17 Apr 2019 20:07:12 +0000 (13:07 -0700)]

Add options to Operator to enable registration of alias analysis passes (#18589)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18589

ghimport-source-id:

dab203f6be13bf41963848f5315235b6bbe45c08

Differential Revision:

D14901379

Pulled By: bwasti

fbshipit-source-id:

d92a497e280f1b0a63b11a9fd8ae9b48bf52e6bf

Richard Zou [Wed, 17 Apr 2019 19:58:04 +0000 (12:58 -0700)]

Error out on in-place binops on tensors with internal overlap (#19317)

Summary:

This adds checks for `mul_`, `add_`, `sub_`, `div_`, the most common

binops. See #17935 for more details.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19317

Differential Revision:

D14972399

Pulled By: zou3519

fbshipit-source-id:

b9de331dbdb2544ee859ded725a5b5659bfd11d2

Vitaly Fedyunin [Wed, 17 Apr 2019 19:49:37 +0000 (12:49 -0700)]

Update for #19326

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/19367

Differential Revision:

D14981835

Pulled By: VitalyFedyunin

fbshipit-source-id:

e8a97986d9669ed7f465a7ba771801bdd043b606

Zafar Takhirov [Wed, 17 Apr 2019 18:19:19 +0000 (11:19 -0700)]

Decorator to make sure we can import `core` from caffe2 (#19273)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19273

Some of the CIs are failing if the protobuf is not installed. Protobuf is imported as part of the `caffe2.python.core`, and this adds a skip decorator to avoid running tests that depend on `caffe2.python.core`

Reviewed By: jianyuh

Differential Revision:

D14936387

fbshipit-source-id:

e508a1858727bbd52c951d3018e2328e14f126be

Yinghai Lu [Wed, 17 Apr 2019 16:37:37 +0000 (09:37 -0700)]

Eliminate AdjustBatch ops (#19083)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19083

As we have discussed, there are too many of AdjustBatch ops and they incur reallocation overhead and affects the performance. We will eliminate these ops by

- inling the input adjust batch op into Glow

- inling the output adjust batch op into OnnxifiOp and do that only conditionally.

This is the C2 part of the change and requires change from Glow side to work e2e.

Reviewed By: rdzhabarov

Differential Revision:

D14860582

fbshipit-source-id:

ac2588b894bac25735babb62b1924acc559face6

Bharat123rox [Wed, 17 Apr 2019 11:31:46 +0000 (04:31 -0700)]

Add rst entry for nn.MultiheadAttention (#19346)

Summary:

Fix #19259 by adding the missing `autoclass` entry for `nn.MultiheadAttention` from [here](https://github.com/pytorch/pytorch/blob/master/torch/nn/modules/activation.py#L676)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19346

Differential Revision:

D14971426

Pulled By: soumith

fbshipit-source-id:

ceaaa8ea4618c38fa2bff139e7fa0d6c9ea193ea

Sebastian Messmer [Wed, 17 Apr 2019 06:59:02 +0000 (23:59 -0700)]

Delete C10Tensor (#19328)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19328

Plans changed and we don't want this class anymore.

Reviewed By: dzhulgakov

Differential Revision:

D14966746

fbshipit-source-id:

09ea4c95b352bc1a250834d32f35a94e401f2347

Junjie Bai [Wed, 17 Apr 2019 04:44:22 +0000 (21:44 -0700)]

Fix python lint (#19331)

Summary:

VitalyFedyunin jerryzh168

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19331

Differential Revision:

D14969435

Pulled By: bddppq

fbshipit-source-id:

c1555c52064758ecbe668f92b837f2d7524f6118

Nikolay Korovaiko [Wed, 17 Apr 2019 04:08:38 +0000 (21:08 -0700)]

Profiling pipeline part1

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18772

Differential Revision:

D14952781

Pulled By: Krovatkin

fbshipit-source-id:

1e99fc9053c377291167f0b04b0f0829b452dbc4

Tongzhou Wang [Wed, 17 Apr 2019 03:25:11 +0000 (20:25 -0700)]

Fix lint

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/19310

Differential Revision:

D14952046

Pulled By: soumith

fbshipit-source-id:

1bbaaad6f932a832ea8e5e804d0d9cd9140a5071

Jerry Zhang [Wed, 17 Apr 2019 03:02:04 +0000 (20:02 -0700)]

Add slicing and int_repr() to QTensor (#19296)

Summary:

Stack:

:black_circle: **#19296 [pt1][quant] Add slicing and int_repr() to QTensor** [:yellow_heart:](https://our.intern.facebook.com/intern/diff/

D14756833/)

:white_circle: #18960 [pt1][quant] Add empty_quantized [:yellow_heart:](https://our.intern.facebook.com/intern/diff/

D14810261/)

:white_circle: #19312 Use the QTensor with QReLU [:yellow_heart:](https://our.intern.facebook.com/intern/diff/

D14819460/)

:white_circle: #19319 [RFC] Quantized SumRelu [:yellow_heart:](https://our.intern.facebook.com/intern/diff/

D14866442/)

Methods added to pytorch python frontend:

- int_repr() returns a CPUByte Tensor which copies the data of QTensor.

- Added as_strided for QTensorImpl which provides support for slicing a QTensor(see test_torch.py)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19296

Differential Revision:

D14756833

Pulled By: jerryzh168

fbshipit-source-id:

6f4c92393330e725c4351d6ff5f5fe9ac7c768bf

Jerry Zhang [Wed, 17 Apr 2019 02:55:48 +0000 (19:55 -0700)]

move const defs of DeviceType to DeviceType.h (#19185)

Summary:

Stack:

:black_circle: **#19185 [c10][core][ez] move const defs of DeviceType to DeviceType.h** [:yellow_heart:](https://our.intern.facebook.com/intern/diff/

D14909415/)

att

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19185

Differential Revision:

D14909415

Pulled By: jerryzh168

fbshipit-source-id:

876cf999424d8394f5ff20e6750133a4e43466d4

Xiaoqiang Zheng [Wed, 17 Apr 2019 02:22:13 +0000 (19:22 -0700)]

Add a fast path for batch-norm CPU inference. (#19152)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19152

Adding a fast path for batch-norm CPU inference when all tensors are contiguous.

* Leverage vectorization through smiple loops.

* Folding linear terms before computation.

* For resnext-101, this version gets 18.95 times faster.

* Add a microbenchmark:

* (buck build mode/opt -c python.package_style=inplace --show-output //caffe2/benchmarks/operator_benchmark:batchnorm_benchmark) && \

(OMP_NUM_THREADS=1 MKL_NUM_THREADS=1 buck-out/gen/caffe2/benchmarks/operator_benchmark/batchnorm_benchmark#binary.par)

* batch_norm: data shape: [1, 256, 3136], bandwidth: 22.26 GB/s

* batch_norm: data shape: [1, 65536, 1], bandwidth: 5.57 GB/s

* batch_norm: data shape: [128, 2048, 1], bandwidth: 18.21 GB/s

Reviewed By: soumith, BIT-silence

Differential Revision:

D14889728

fbshipit-source-id:

20c9e567e38ff7dbb9097873b85160eca2b0a795

Jerry Zhang [Wed, 17 Apr 2019 01:57:13 +0000 (18:57 -0700)]

Testing for folded conv_bn_relu (#19298)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19298

Proper testing for conv_bn_relu folding

Differential Revision:

D13998891

fbshipit-source-id:

ceb58ccec19885cbbf38964ee0d0db070e098b4a

Ilia Cherniavskii [Wed, 17 Apr 2019 01:21:36 +0000 (18:21 -0700)]

Initialize intra-op threads in JIT thread pool (#19058)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19058

ghimport-source-id:

53e87df8d93459259854a17d4de3348e463622dc

Differential Revision:

D14849624

Pulled By: ilia-cher

fbshipit-source-id:

5043a1d4330e38857c8e04c547526a3ba5b30fa9

Mikhail Zolotukhin [Tue, 16 Apr 2019 22:38:08 +0000 (15:38 -0700)]

Fix ASSERT_ANY_THROW. (#19321)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19321

ghimport-source-id:

9efffc36950152105bd0dc13f450161367101410

Differential Revision:

D14962184

Pulled By: ZolotukhinM

fbshipit-source-id:

22d602f50eb5e17a3e3f59cc7feb59a8d88df00d

David Riazati [Tue, 16 Apr 2019 22:03:47 +0000 (15:03 -0700)]

Add len() for strings (#19320)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19320

ghimport-source-id:

62131cb24e9bf65f0ef3e60001cb36509a1f4163

Reviewed By: bethebunny

Differential Revision:

D14961078

Pulled By: driazati

fbshipit-source-id:

08b9a4b10e4a47ea09ebf55a4743defa40c74698

Xiang Gao [Tue, 16 Apr 2019 20:55:37 +0000 (13:55 -0700)]

Step 3: Add support for return_counts to torch.unique for dim not None (#18650)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18650

ghimport-source-id:

75759c95e6c48e27c172b919097dbc40c6bfb5e6

Differential Revision:

D14892319

Pulled By: VitalyFedyunin

fbshipit-source-id:

ec5d1b80fc879d273ac5a534434fd648468dda1e

Karl Ostmo [Tue, 16 Apr 2019 19:59:27 +0000 (12:59 -0700)]

invoke NN smoketests from a python loop instead of a batch file (#18756)

Summary:

I tried first to convert the `.bat` script to a Bash `.sh` script, but I got this error:

```

[...]/build/win_tmp/ci_scripts/test_python_nn.sh: line 3: fg: no job control

```

Line 3 was where `%TMP_DIR%/ci_scripts/setup_pytorch_env.bat` was invoked.

I found a potential workaround on stack overflow of adding the `monitor` (`-m`) flag to the script, but hat didn't work either:

```

00:58:00 /bin/bash: cannot set terminal process group (3568): Inappropriate ioctl for device

00:58:00 /bin/bash: no job control in this shell

00:58:00 + %TMP_DIR%/ci_scripts/setup_pytorch_env.bat

00:58:00 /c/Jenkins/workspace/pytorch-builds/pytorch-win-ws2016-cuda9-cudnn7-py3-test1/build/win_tmp/ci_scripts/test_python_nn.sh: line 3: fg: no job control

```

So instead I decided to use Python to replace the `.bat` script. I believe this is an improvement in that it's both "table-driven" now and cross-platform.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18756

Differential Revision:

D14957570

Pulled By: kostmo

fbshipit-source-id:

87794e64b56ffacbde4fd44938045f9f68f7bc2a

Vitaly Fedyunin [Tue, 16 Apr 2019 17:50:48 +0000 (10:50 -0700)]

Adding pin_memory kwarg to zeros, ones, empty, ... tensor constructors (#18952)

Summary:

Make it possible to construct a pinned memory tensor without creating a storage first and without calling pin_memory() function. It is also faster, as copy operation is unnecessary.

Supported functions:

```python

torch.rand_like(t, pin_memory=True)

torch.randn_like(t, pin_memory=True)

torch.empty_like(t, pin_memory=True)

torch.full_like(t, 4, pin_memory=True)

torch.zeros_like(t, pin_memory=True)

torch.ones_like(t, pin_memory=True)

torch.tensor([10,11], pin_memory=True)

torch.randn(3, 5, pin_memory=True)

torch.rand(3, pin_memory=True)

torch.zeros(3, pin_memory=True)

torch.randperm(3, pin_memory=True)

torch.empty(6, pin_memory=True)

torch.ones(6, pin_memory=True)

torch.eye(6, pin_memory=True)

torch.arange(3, 5, pin_memory=True)

```

Part of the bigger: `Remove Storage` plan.

Now compatible with both torch scripts:

` _1 = torch.zeros([10], dtype=6, layout=0, device=torch.device("cpu"), pin_memory=False)`

and

` _1 = torch.zeros([10], dtype=6, layout=0, device=torch.device("cpu"))`

Same checked for all similar functions `rand_like`, `empty_like` and others

It is fixed version of #18455

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18952

Differential Revision:

D14801792

Pulled By: VitalyFedyunin

fbshipit-source-id:

8dbc61078ff7a637d0ecdb95d4e98f704d5450ba

J M Dieterich [Tue, 16 Apr 2019 17:50:28 +0000 (10:50 -0700)]

Enable unit tests for ROCm 2.3 (#19307)

Summary:

Unit tests that hang on clock64() calls are now fixed.

test_gamma_gpu_sample is now fixed.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19307

Differential Revision:

D14953420

Pulled By: bddppq

fbshipit-source-id:

efe807b54e047578415eb1b1e03f8ad44ea27c13

Jerry Zhang [Tue, 16 Apr 2019 17:41:03 +0000 (10:41 -0700)]

Fix type conversion in dequant and add a test (#19226)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19226

Type conversoin was wrong previously. Thanks zafartahirov for finding it!

Differential Revision:

D14926610

fbshipit-source-id:

6824f9813137a3d171694d743fbb437a663b1f88

Alexandr Morev [Tue, 16 Apr 2019 17:19:04 +0000 (10:19 -0700)]

math module support (#19115)

Summary:

This PR refer to issue [#19026](https://github.com/pytorch/pytorch/issues/19026)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19115

Differential Revision:

D14936053

Pulled By: driazati

fbshipit-source-id:

68d5f33ced085fcb8c10ff953bc7e99df055eccc

Shen Li [Tue, 16 Apr 2019 16:35:36 +0000 (09:35 -0700)]

Revert replicate.py to disallow replicating multi-device modules (#19278)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19278

Based on discussion in https://github.com/pytorch/pytorch/pull/19278 and https://github.com/pytorch/pytorch/pull/18687, changes to replicate.py will be reverted to disallow replicating multi-device modules.

Reviewed By: pietern

Differential Revision:

D14940018

fbshipit-source-id:

7504c0f4325c2639264c52dcbb499e61c9ad2c26

Zachary DeVito [Tue, 16 Apr 2019 16:01:03 +0000 (09:01 -0700)]

graph_for based on last_optimized_executed_graph (#19142)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19142

ghimport-source-id:

822013fb7e93032c74867fc77c6774c680aef6d1

Differential Revision:

D14888703

Pulled By: zdevito

fbshipit-source-id:

a2ad65a042d08b1adef965c2cceef37bb5d26ba9

Richard Zou [Tue, 16 Apr 2019 15:51:01 +0000 (08:51 -0700)]

Enable half for CUDA dense EmbeddingBag backward. (#19293)

Summary:

I audited the relevant kernel and saw it accumulates a good deal into float

so it should be fine.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19293

Differential Revision:

D14942274

Pulled By: zou3519

fbshipit-source-id:

36996ba0fbb29fbfb12b27bfe9c0ad1eb012ba3c

Mingzhe Li [Tue, 16 Apr 2019 15:47:25 +0000 (08:47 -0700)]

calculate execution time based on final iterations (#19299)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19299

I saw larger than 5% performance variation with small operators, this diff aims to reduce the variation by avoiding python overhead. Previously, in the benchmark, we run the main loop for 100 iterations then look at the time. If it's not significant, we will double the number of iterations to rerun and look at the result. We continue this process until it becomes significant. We calculate the time by total_time / number of iterations. The issue is that we are including multiple python trigger overhead.

Now, I change the logic to calculate execution time based on the last run instead of all runs, the equation is time_in_last_run/number of iterations.

Reviewed By: hl475

Differential Revision:

D14925287

fbshipit-source-id:

cb646298c08a651e27b99a5547350da367ffff47

Ilia Cherniavskii [Tue, 16 Apr 2019 07:13:50 +0000 (00:13 -0700)]

Move OMP/MKL thread initialization into ATen/Parallel (#19011)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19011

ghimport-source-id:

432e31eccfd0e59fa21a790f861e6b2ff4fdbac6

Differential Revision:

D14846034

Pulled By: ilia-cher

fbshipit-source-id:

d9d03c761d34bac80e09ce776e41c20fd3b04389

Mark Santaniello [Tue, 16 Apr 2019 06:40:21 +0000 (23:40 -0700)]

Avoid undefined symbol error when building AdIndexer LTO (#19009)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19009

Move the definition of `MulFunctor<>::Backward()` into a header file.

Reviewed By: BIT-silence

Differential Revision:

D14823230

fbshipit-source-id:

1efaec01863fcc02dcbe7e788d376e72f8564501

Nikolay Korovaiko [Tue, 16 Apr 2019 05:05:20 +0000 (22:05 -0700)]

Ellipsis in subscript

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/17763

Differential Revision:

D14893533

Pulled By: Krovatkin

fbshipit-source-id:

c46b4e386d3aa30e6dc03e3052d2e5ff097fa74b

Ilia Cherniavskii [Tue, 16 Apr 2019 03:24:10 +0000 (20:24 -0700)]

Add input information in RecordFunction calls (#18717)

Summary:

Add input information into generated RecordFunction calls in

VariableType wrappers, JIT operators and a few more locations

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18717

Differential Revision:

D14729156

Pulled By: ilia-cher

fbshipit-source-id:

811ac4cbfd85af5c389ef030a7e82ef454afadec

Summer Deng [Mon, 15 Apr 2019 23:43:58 +0000 (16:43 -0700)]

Add NHWC order support in the cost inference function of 3d conv (#19170)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19170

As title

The quantized resnext3d model in production got the following failures without the fix:

```

Caffe2 operator Int8ConvRelu logging error: [enforce fail at conv_pool_op_base.h:463] order == StorageOrder::NCHW. 1 vs 2. Conv3D only supports NCHW on the production quantized model

```

Reviewed By: jspark1105

Differential Revision:

D14894276

fbshipit-source-id:

ef97772277f322ed45215e382c3b4a3702e47e59

Jongsoo Park [Mon, 15 Apr 2019 21:35:25 +0000 (14:35 -0700)]

unit test with multiple op invocations (#19118)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19118

A bug introduced by

D14700576 reported by Yufei (fixed by

D14778810 and

D14785256) was not detected by our units tests.

This diff improves unit tests to catch such errors (with this diff and without

D14778810, we can reproduce the bug Yufei reported).

This improvement also revealed a bug that affects the accuracy when we pre-pack weight and bias together and the pre-packed weight/bias are used by multiple nets. We were modifying the pre-packed bias in-place which was supposed to be constants.

Reviewed By: csummersea

Differential Revision:

D14806077

fbshipit-source-id:

aa9049c74b6ea98d21fbd097de306447a662a46d

Karl Ostmo [Mon, 15 Apr 2019 19:26:50 +0000 (12:26 -0700)]

Run shellcheck on Jenkins scripts (#18874)

Summary:

closes #18873

Doesn't fail the build on warnings yet.

Also fix most severe shellcheck warnings

Limited to `.jenkins/pytorch/` at this time

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18874

Differential Revision:

D14936165

Pulled By: kostmo

fbshipit-source-id:

1ee335695e54fe6c387ef0f6606ea7011dad0fd4

Pieter Noordhuis [Mon, 15 Apr 2019 19:24:43 +0000 (12:24 -0700)]

Make DistributedDataParallel use new reducer (#18953)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18953

This removes Python side bucketing code from DistributedDataParallel

and replaces it with calls to the new C++ based bucketing and reducing

code. To confirm this is working well, we ran a test with both the

previous implementation and the new implementation, and confirmed they

are numerically equivalent.

Performance is improved by a couple percent or more, including the

single machine multiple GPU runs.

Closes #13273.

Reviewed By: mrshenli

Differential Revision:

D14580911

fbshipit-source-id:

44e76f8b0b7e58dd6c91644e3df4660ca2ee4ae2

Gemfield [Mon, 15 Apr 2019 19:23:36 +0000 (12:23 -0700)]

Fix the return value of ParseFromString (#19262)

Summary:

Fix the return value of ParseFromString.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19262

Differential Revision:

D14937605

Pulled By: ezyang

fbshipit-source-id:

3f441086517186a075efb3d74f09160463b696b3

vishwakftw [Mon, 15 Apr 2019 18:53:44 +0000 (11:53 -0700)]

Modify Cholesky derivative (#19116)

Summary:

The derivative of the Cholesky decomposition was previously a triangular matrix.

Changelog:

- Modify the derivative of Cholesky from a triangular matrix to symmetric matrix

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19116

Differential Revision:

D14935470

Pulled By: ezyang

fbshipit-source-id:

1c1c76b478c6b99e4e16624682842cb632e8e8b9

Karl Ostmo [Mon, 15 Apr 2019 18:38:44 +0000 (11:38 -0700)]

produce diagram for caffe2 build matrix (#18517)

Summary:

This PR splits the configuration tree data from the logic used to construct the tree, for both `pytorch` and `caffe2` build configs.

Caffe2 configs are also now illustrated in a diagram.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18517

Differential Revision:

D14936170

Pulled By: kostmo

fbshipit-source-id:

7b40a88512627377c5ea0f24765dabfef76ca279

Sam Gross [Mon, 15 Apr 2019 18:13:33 +0000 (11:13 -0700)]

Free all blocks with outstanding events on OOM-retry (#19222)

Summary:

The caching allocator tries to free all blocks on an out-of-memory

error. Previously, it did not free blocks that still had outstanding

stream uses. This change synchronizes on the outstanding events and

frees those blocks.

See #19219

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19222

Differential Revision:

D14925071

Pulled By: colesbury

fbshipit-source-id:

a2e9fe957ec11b00ea8e6c0468436c519667c558

Vitaly Fedyunin [Mon, 15 Apr 2019 16:13:49 +0000 (09:13 -0700)]

Make sure that any of the future versions can load and execute older models. (#19174)

Summary:

Helps to test #18952

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19174

Differential Revision:

D14899474

Pulled By: VitalyFedyunin

fbshipit-source-id:

a4854ad44da28bd0f5115ca316e6078cbfe29d0d

Sebastian Messmer [Sun, 14 Apr 2019 04:42:28 +0000 (21:42 -0700)]

Sync fbcode/caffe2 and xplat/caffe2 (1) (#19218)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19218

Sync some contents between fbcode/caffe2 and xplat/caffe2 to move closer towards a world where they are identical.

Reviewed By: dzhulgakov

Differential Revision:

D14919916

fbshipit-source-id:

29c6b6d89ac556d58ae3cd02619aca88c79591c1

Ailing Zhang [Sun, 14 Apr 2019 03:13:52 +0000 (20:13 -0700)]

upgrade bazel version in CI [xla ci] (#19246)

Summary:

The latest TF requires upgrading bazel version.

This PR should fix xla tests in CI.

[xla ci]

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19246

Differential Revision:

D14929533

Pulled By: ailzhang

fbshipit-source-id:

f6deb31428ed39f267d96bb9814d06f76641e73b

Junjie Bai [Sat, 13 Apr 2019 20:05:12 +0000 (13:05 -0700)]

Update docker images to use ROCm 2.3 (#19231)

Summary:

xw285cornell petrex iotamudelta

https://ci.pytorch.org/jenkins/job/caffe2-builds/job/py2-clang7-rocmdeb-ubuntu16.04-trigger-test/24676/

https://ci.pytorch.org/jenkins/job/caffe2-builds/job/py2-devtoolset7-rocmrpm-centos7.5-trigger-test/17679/

https://ci.pytorch.org/jenkins/job/pytorch-builds/job/py2-clang7-rocmdeb-ubuntu16.04-trigger/24652/

https://ci.pytorch.org/jenkins/job/pytorch-builds/job/py2-devtoolset7-rocmrpm-centos7.5-trigger/9943/

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19231

Differential Revision:

D14928580

Pulled By: bddppq

fbshipit-source-id:

025b0affa6bcda6ee9f823dfc6c2cf8b92e71027

Zachary DeVito [Sat, 13 Apr 2019 17:01:34 +0000 (10:01 -0700)]

fix flake8 (#19243)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19243

ghimport-source-id:

ae80aed3a5742df21afb6e55979686220a27cce7

Differential Revision:

D14928670

Pulled By: zdevito

fbshipit-source-id:

20ec0d5c8d6f1c515beb55e2e63eddf3b2fc12dd

Zachary DeVito [Sat, 13 Apr 2019 15:28:13 +0000 (08:28 -0700)]

Remove GraphExecutor's python bindings (#19141)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19141

ghimport-source-id:

796a41f5514d29959af052fcf5391a2834850a80

Reviewed By: jamesr66a

Differential Revision:

D14888702

Pulled By: zdevito

fbshipit-source-id:

c280145f08e7bc210434d1c99396a3257b626cf9

Zachary DeVito [Sat, 13 Apr 2019 15:28:11 +0000 (08:28 -0700)]

Cleanup ScriptModule bindings (#19138)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19138

ghimport-source-id:

10f810f5e7551c1cb65fc4799744083bd7ffd1ee

Reviewed By: jamesr66a

Differential Revision:

D14886945

Pulled By: zdevito

fbshipit-source-id:

a5e5bb08694d03166a7516ec038656c2a02e7896

Zachary DeVito [Sat, 13 Apr 2019 15:28:11 +0000 (08:28 -0700)]

get propagate_shape logic out of module.h (#19137)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19137

ghimport-source-id:

2394765f2d401e68ffdfa4c985bfab4cca2517f8

Reviewed By: jamesr66a

Differential Revision:

D14885946

Pulled By: zdevito

fbshipit-source-id:

daa2894ed9761107e9d273bb172840dc23ace072

Zachary DeVito [Sat, 13 Apr 2019 15:28:11 +0000 (08:28 -0700)]

Make debug subgraph inlining thread local (#19136)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19136

ghimport-source-id:

3a24ab36aa753ce5cce7bba3467bdbe88e5c7f60

Reviewed By: jamesr66a

Differential Revision:

D14885051

Pulled By: zdevito

fbshipit-source-id:

b39c6ceef73ad9caefcbf8f40dd1b9132bba03c2

Zachary DeVito [Sat, 13 Apr 2019 15:28:10 +0000 (08:28 -0700)]

Support Kwargs in C++ Function/Method calls (#19086)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19086

ghimport-source-id:

7790a5cc6e32f6f72e92add0b9f76dfa49ad9859

Reviewed By: jamesr66a

Differential Revision:

D14875729

Pulled By: zdevito

fbshipit-source-id:

ad1e4542381d9c33722155459e794f1ba4660dbb

Johannes M Dieterich [Sat, 13 Apr 2019 04:42:10 +0000 (21:42 -0700)]

Enable working ROCm tests (#19169)

Summary:

Enable multi-GPU tests that work with ROCm 2.2. Have been run three times on CI to ensure stability.

While there, remove skipIfRocm annotations for tests that depend on MAGMA. They still skip but now for the correct reason (no MAGMA) to improve our diagnostics.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19169

Differential Revision:

D14924812

Pulled By: bddppq

fbshipit-source-id:

8b88f58bba58a08ddcd439e899a0abc6198fef64

Ailing Zhang [Sat, 13 Apr 2019 04:26:27 +0000 (21:26 -0700)]

import warnings in torch.hub & fix master CI travis (#19181)

Summary:

fix missing import in #18758

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19181

Differential Revision:

D14908198

Pulled By: ailzhang

fbshipit-source-id:

31e0dc4a27521103a1b93f72511ae1b64a36117f

Jerry Zhang [Sat, 13 Apr 2019 01:10:37 +0000 (18:10 -0700)]

fix lint errors in gen.py (#19221)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19221

att

Reviewed By: colesbury

Differential Revision:

D14923858

fbshipit-source-id:

4793d7794172d401455c5ce72dfc27dddad515d4

Bram Wasti [Fri, 12 Apr 2019 21:53:17 +0000 (14:53 -0700)]

Add pass registration mechanism (#18587)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18587

ghimport-source-id:

80d753f7046a2a719e0c076684f44fa2059a0921

Differential Revision:

D14901227

Pulled By: bwasti

fbshipit-source-id:

56511d0313419b63945a36b80e9ea51abdef2bd4

Wanchao Liang [Fri, 12 Apr 2019 21:24:37 +0000 (14:24 -0700)]

JIT Layernorm fusion (#18266)

Summary:

Partially fuse layer_norm by decomposing layer_norm into the batchnorm kernel that computes the stats, and then fusing the affine operations after the reduce operations, this is similar to the batchnorm fusion that apaszke did, it also only works in inference mode now.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18266

Differential Revision:

D14879877

Pulled By: wanchaol

fbshipit-source-id:

0197d8f2a17ec438d3e53f4c411d759c1ae81efe

Yinghai Lu [Fri, 12 Apr 2019 21:23:06 +0000 (14:23 -0700)]

Add more debugging helper to net transformer (#19176)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19176

Add some amenities for debugging.

Reviewed By: llyfacebook

Differential Revision:

D14901740

fbshipit-source-id:

2c4018fdbf7e3aba2a754b6b4103a72893c229c2

Jerry Zhang [Fri, 12 Apr 2019 19:47:39 +0000 (12:47 -0700)]

Add Quantized Backend (#18546)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18546

We'll expose all combinations of various ways of quantization in the top level dispatch key, that is we have AffineCPUTensor, PerChannelAffineCUDATensor, etc.

QTensor method added:

- is_quantized()

- item()

Differential Revision:

D14637671

fbshipit-source-id:

346bc6ef404a570f0efd34e8793056ad3c7855f5

Xiang Gao [Fri, 12 Apr 2019 19:34:29 +0000 (12:34 -0700)]

Step 2: Rename _unique_dim2_temporary_will_remove_soon to unique_dim (#18649)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18649

ghimport-source-id:

3411d240a6af5fe299a889667964730184e30645

Differential Revision:

D14888292

Pulled By: VitalyFedyunin

fbshipit-source-id:

80da83c264598f74ab8decb165da4a1ce2b352bb

Lu Fang [Fri, 12 Apr 2019 18:58:06 +0000 (11:58 -0700)]

Fix onnx ints (#19102)

Summary:

If JIT constant propagation doesn't work, we have to handle the ListConstructor in symbolic.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19102

Reviewed By: zrphercule

Differential Revision:

D14875588

Pulled By: houseroad

fbshipit-source-id:

d25c847d224d2d32db50aae1751100080e115022

Huamin Li [Fri, 12 Apr 2019 18:38:02 +0000 (11:38 -0700)]

use C10_REGISTER for GELU op

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/19090

Reviewed By: BIT-silence

Differential Revision:

D14864737

fbshipit-source-id:

8debd53171f7068726f0ab777a13ca46becbfbdf

Edward Yang [Fri, 12 Apr 2019 18:13:39 +0000 (11:13 -0700)]

Fix tabs lint. (#19196)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19196

ghimport-source-id:

c10b1b19b087d7650e1614f008a9c2db21dfec2f

Differential Revision:

D14913428

Pulled By: ezyang

fbshipit-source-id:

815b919d8e4516d0e5d89ebbdc4dff6d1d08da47

Will Feng [Fri, 12 Apr 2019 16:57:51 +0000 (09:57 -0700)]

Pin nvidia-container-runtime version (#19195)

Summary:

This PR is to fix the CI error:

```

nvidia-docker2 : Depends: nvidia-container-runtime (= 2.0.0+docker18.09.4-1) but 2.0.0+docker18.09.5-1 is to be installed

E: Unable to correct problems, you have held broken packages.

Exited with code 100

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19195

Differential Revision:

D14913104

Pulled By: yf225

fbshipit-source-id:

d151205f5ffe9cac7320ded3c25baa7e051c3623

peter [Fri, 12 Apr 2019 16:25:55 +0000 (09:25 -0700)]

One more fix for #18790

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/19187

Differential Revision:

D14913100

Pulled By: ezyang

fbshipit-source-id:

bf147747f933a2c9a35f3ff00bf6b83a4f29286c

Jerry Zhang [Fri, 12 Apr 2019 02:38:21 +0000 (19:38 -0700)]

Fix promoteTypes for QInt types (#19182)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19182

This is a bug discovered by zafartahirov, right now if one of the tensor is QInt

type we'll return undefined, but actually we want to allow ops that accepts

Tensors of the same QInt type to work.

Reviewed By: zafartahirov

Differential Revision:

D14909172

fbshipit-source-id:

492fd6403da8c56e180efe9d632a3b7fc879aecf

Roy Li [Thu, 11 Apr 2019 23:55:39 +0000 (16:55 -0700)]

Replace more usages of Type with DeprecatedTypeProperties (#19093)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19093

ghimport-source-id:

a82e3dce912a173b42a6a7e35eb1302d9f334e03

Differential Revision:

D14865520

Pulled By: li-roy

fbshipit-source-id:

b1a8bf32f87920ce8d82f990d670477bc79d0ca7

David Riazati [Thu, 11 Apr 2019 22:33:51 +0000 (15:33 -0700)]

Support attributes when copying modules (#19040)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19040

ghimport-source-id:

37933efd717795751283cae8141e2e2caaae2e95

Reviewed By: eellison

Differential Revision:

D14895573

Pulled By: driazati

fbshipit-source-id:

bc2723212384ffa673d2a8df2bb57f38c62cc104

Will Feng [Thu, 11 Apr 2019 22:09:35 +0000 (15:09 -0700)]

Move version_counter_ to TensorImpl (#18223)

Summary:

According to https://github.com/pytorch/pytorch/issues/13638#issuecomment-

468055428, after the Variable/Tensor merge, we may capture variables without autograd metadata inside an autograd function, and we need a working version counter in these cases. This PR makes it possible by moving `version_counter_` out of autograd metadata and into TensorImpl, so that variables without autograd metadata still have version counters.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18223

Differential Revision:

D14735123

Pulled By: yf225

fbshipit-source-id:

15f690311393ffd5a53522a226da82f5abb6c65b

Iurii Zdebskyi [Thu, 11 Apr 2019 21:25:21 +0000 (14:25 -0700)]

Enable comp ops for bool tensor (#19109)

Summary:

Enabled comparison ops for bool tensors

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19109

Differential Revision:

D14871187

Pulled By: izdeby

fbshipit-source-id:

cf9951847d69124a93e5e21dd0a39c9568b1037d

Will Feng [Thu, 11 Apr 2019 20:32:45 +0000 (13:32 -0700)]

Change is_variable() to check existence of AutogradMeta, and remove is_variable_ (#19139)

Summary:

Currently, a TensorImpl's `is_variable_` is true if and only if the TensorImpl has AutogradMeta. This PR unifies these two concepts by removing `is_variable_` and change `is_variable()` to check existence of AutogradMeta instead.

Removing `is_variable_` is part of the work in Variable/Tensor merge.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19139

Differential Revision:

D14893339

Pulled By: yf225

fbshipit-source-id:

ceb5e22c3c01f79b5d21d5bdbf4a7d1bc397796a

Zachary DeVito [Thu, 11 Apr 2019 20:30:42 +0000 (13:30 -0700)]

First class modules in the compiler, round 2 (#19167)

Summary:

This PR propagates where we use first-class modules objects into the compiler. This creates a transitionary state where:

* compiler.cpp creates Graphs where `self` is a Module class and attributes/parameters/buffers/submodules are looked up with `prim::GetAttr`

* GraphExecutor still runs "lowered graphs" where the self object has been removed by a compiler pass `lower_first_class_method`.

* Tracing still creates "lowered graphs", and a pass "lift_lowered_method" creates a first-class method graph for things.

* This PR separates out Method and Function. A script::Function is a pure Graph with no `self` bound. Similar to Python, a script::Method is just a bound `self` and its underlying `script::Function`.

* This PR also separates CompilationUnit from Module. A CompilationUnit is just a list of named script::Functions. Class's have a CompilationUnit holding the class methods, and Modules also have a CompilationUnit holding their Methods. This avoids the weird circular case Module --has a-> Class -> has a -> Module ...

Details:

* In this transitionary state, we maintain two copies of a Graph, first-class module and lowered. Th first-class one has a self argument that is the module's class type. The lowered one is the lowered graph that uses the initial_ivalues inputs.

* When defining lowered methods using `_defined_lowered` we immediately create the first-class equivalent. The reverse is done lazily, creating lowered_methods on demand from the class.

* The two way conversions will be deleted in a future PR when the executor itself runs first-class objects. However this requires more changes to (1) the traces, (2) the python bindings, and (3) the onnx export pass and would make this PR way to large.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19167

Differential Revision:

D14891966

Pulled By: zdevito

fbshipit-source-id:

0b5f03118aa65448a15c7a7818e64089ec93d7ea

Gregory Chanan [Thu, 11 Apr 2019 20:22:49 +0000 (13:22 -0700)]

Materialize a non-default device for C2 legacy storage. (#18605)

Summary:

It's not intended that Storages have 'default' CUDA devices, but this is allowable via the Storage::create_legacy codepath.

This also messages with device_caching, because the initial cache is obtained from the Storage, which may have a 'default' device.

Instead, we materialize a device by allocating 0 bytes via the allocator.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18605

Differential Revision:

D14680620

Pulled By: gchanan

fbshipit-source-id:

6d43383d836e90beaf12bfe37c3f0506843f5432

Yinghai Lu [Thu, 11 Apr 2019 19:28:32 +0000 (12:28 -0700)]

Allow empty net type (#19154)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19154

I recently saw some weird workflow error due to empty but set net_type. Maybe we should just fallback to simple net in this case.

Reviewed By: dzhulgakov

Differential Revision:

D14890072

fbshipit-source-id:

4e9edf8232298000713bebb0bfdec61e9c5df17d

Lu Fang [Thu, 11 Apr 2019 19:23:30 +0000 (12:23 -0700)]

Skip Slice if it's no op (#19155)

Summary:

If it's identity op, just skip the slice and return the input.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19155

Reviewed By: zrphercule

Differential Revision:

D14890238

Pulled By: houseroad

fbshipit-source-id:

f87b93df2cca0cb0e8ae2a1d95ba148044eafd4a

Lu Fang [Thu, 11 Apr 2019 18:15:47 +0000 (11:15 -0700)]

Rename ONNX util test names (#19153)

Summary:

Rename test cases.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19153

Reviewed By: zrphercule

Differential Revision:

D14890095

Pulled By: houseroad

fbshipit-source-id:

37a787398c88d9cc92b411c2355b43200cf1c4b0

Pieter Noordhuis [Thu, 11 Apr 2019 16:14:31 +0000 (09:14 -0700)]

Remove ProcessGroup::getGroupRank (#19147)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19147

After #14809 was merged there is no longer a need for getGroupRank.

Every ProcessGroup object has its own rank and size fields which are

accurate for the global group as well as subgroups.

Strictly speaking removing a function in a minor version bump is a big

no-no, but I highly doubt this was ever used outside of

`torch.distributed` itself. This will result in a compile error for

folks who have subclassed the ProcessGroup class though.

If this is a concern we can delay merging until a later point in time,

but eventually this will need to be cleaned up.

Differential Revision:

D14889736

fbshipit-source-id:

3846fe118b3265b50a10ab8b1c75425dad06932d

Zafar Takhirov [Thu, 11 Apr 2019 15:29:52 +0000 (08:29 -0700)]

Basic implementation of QRelu in C10 (#19091)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19091

Implements a basic quantized ReLU (uint8). This is a temporary solution before using the `QTensor` type instead of the tuple.

Reviewed By: dzhulgakov

Differential Revision:

D14565413

fbshipit-source-id:

7d53cf5628cf9ec135603d6a1fb7c79cd9383019

Guanheng Zhang [Thu, 11 Apr 2019 15:04:32 +0000 (08:04 -0700)]

Import MultiheadAttention to PyTorch (#18334)

Summary:

Import MultiheadAttention into the core pytorch framework.

Users now can import MultiheadAttention directly from torch.nn.

See "Attention Is All You Need" for more details related to MultiheadAttention function.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18334

Differential Revision:

D14577966

Pulled By: zhangguanheng66

fbshipit-source-id:

756c0deff623f3780651d9f9a70ce84516c806d3

Xing Wang [Thu, 11 Apr 2019 14:27:46 +0000 (07:27 -0700)]

try to enable uncertainty for lr loss (#17236)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17236

Following the paper in https://papers.nips.cc/paper/7141-what-uncertainties-do-we-need-in-bayesian-deep-learning-for-computer-vision.pdf, approximate the classification case with the regression formulation. For the LRLoss, add penalty based on the variance and regularization on the variance with a tunable parameter lambda.

Reviewed By: chocjy

Differential Revision:

D14077106

fbshipit-source-id:

4405d8995cebdc7275a0dd07857d32a8915d78ef