BBuf [Fri, 27 Aug 2021 17:42:24 +0000 (10:42 -0700)]

fix resize bug (#61166)

Summary:

I think the original intention here is to only take effect in the case of align_corners (because output_size = 1 and the divisor will be 0), but it affects non-align_corners too. For example:

```python

input = torch.tensor(

np.arange(1, 5, dtype=np.int32).reshape((1, 1, 2, 2)) )

m = torch.nn.Upsample(scale_factor=0.5, mode="bilinear")

of_out = m(input)

```

The result we expect should be [[[[2.5]]]]

but pytorch get [[[[1.0]]]] which is different from OpenCV and PIL, this pr try to fixed it。

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61166

Reviewed By: malfet

Differential Revision:

D30543178

Pulled By: heitorschueroff

fbshipit-source-id:

21a4035483981986b0ae4a401ef0efbc565ccaf1

Pierluigi Taddei [Fri, 27 Aug 2021 17:36:08 +0000 (10:36 -0700)]

[caffe2] fixes to allow stricter compilation flag (#64016)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64016

In order to increase the strictness of the compilation for some target depending on caffe2 we need to fix some errors uncovered when rising such flags.

This change introduces the required override tokens for virtual destructors

Test Plan: CI. Moreover targets depending on caffe2 using clang strict warnings now compile

Reviewed By: kalman5

Differential Revision:

D30541714

fbshipit-source-id:

564af31b4a9df3536d7d6f43ad29e1d0c7040551

Heitor Schueroff [Fri, 27 Aug 2021 17:16:02 +0000 (10:16 -0700)]

Added reference tests to ReductionOpInfo (#62900)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/62900

Test Plan: Imported from OSS

Reviewed By: mruberry

Differential Revision:

D30408815

Pulled By: heitorschueroff

fbshipit-source-id:

6a1f82ac281920ff7405a42f46ccd796e60af9d6

Mike Iovine [Fri, 27 Aug 2021 17:10:48 +0000 (10:10 -0700)]

[JIT] Add aten::slice optimization (#63049)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63049

Given a graph produced from a function like this:

```

def foo():

li = [1, 2, 3, 4, 5, 6]

return li[0:2]

```

This pass produces a graph like this:

```

def foo():

li = [1, 2]

return li

```

These changes are mostly adapted from https://github.com/pytorch/pytorch/pull/62297/

Test Plan: `buck test //caffe2/test:jit -- TestPeephole`

Reviewed By: eellison

Differential Revision:

D30231044

fbshipit-source-id:

d12ee39f68289a574f533041a5adb38b2f000dd5

Jonathan Chang [Fri, 27 Aug 2021 16:49:39 +0000 (09:49 -0700)]

Add doc for nn.MultiMarginLoss (shape, example) (#63760)

Summary:

Fixes https://github.com/pytorch/pytorch/issues/63747

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63760

Reviewed By: malfet

Differential Revision:

D30541581

Pulled By: jbschlosser

fbshipit-source-id:

99560641e614296645eb0e51999513f57dfcfa98

Peter Bell [Fri, 27 Aug 2021 16:37:10 +0000 (09:37 -0700)]

Refactor structured set_output in Register{DispatchKey}.cpp (#62188)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62188

These parts of the `set_output` code are identical for all operators in the

kernel registration files. So, this moves them from being copied into every

class to two helper functions at the top of the file.

Test Plan: Imported from OSS

Reviewed By: soulitzer

Differential Revision:

D29962045

Pulled By: albanD

fbshipit-source-id:

753b8aac755f3c91b77ffa2c30a89ac91a84b7c4

Sergei Vorobev [Fri, 27 Aug 2021 16:31:36 +0000 (09:31 -0700)]

[bazel] GPU-support: add @local_config_cuda and @cuda (#63604)

Summary:

## Context

We take the first step at tackling the GPU-bazel support by adding bazel external workspaces `local_config_cuda` and `cuda`, where the first one has some hardcoded values and lists of files, and the second one provides a nicer, high-level wrapper that maps into the already expected by pytorch bazel targets that are guarded with `if_cuda` macro.

The prefix `local_config_` signifies the fact that we are breaking the bazel hermeticity philosophy by explicitly relaying on the CUDA installation that is present on the machine.

## Testing

Notice an important scenario that is unlocked by this change: compilation of cpp code that depends on cuda libraries (i.e. cuda.h and so on).

Before:

```

sergei.vorobev@cs-sv7xn77uoy-gpu-

1628706590:~/src/pytorch4$ bazelisk build --define=cuda=true //:c10

ERROR: /home/sergei.vorobev/src/pytorch4/tools/config/BUILD:12:1: no such package 'tools/toolchain': BUILD file not found in any of the following directories. Add a BUILD file to a directory to mark it as a package.

- /home/sergei.vorobev/src/pytorch4/tools/toolchain and referenced by '//tools/config:cuda_enabled_and_capable'

ERROR: While resolving configuration keys for //:c10: Analysis failed

ERROR: Analysis of target '//:c10' failed; build aborted: Analysis failed

INFO: Elapsed time: 0.259s

INFO: 0 processes.

FAILED: Build did NOT complete successfully (2 packages loaded, 2 targets configured)

```

After:

```

sergei.vorobev@cs-sv7xn77uoy-gpu-

1628706590:~/src/pytorch4$ bazelisk build --define=cuda=true //:c10

INFO: Analyzed target //:c10 (6 packages loaded, 246 targets configured).

INFO: Found 1 target...

Target //:c10 up-to-date:

bazel-bin/libc10.lo

bazel-bin/libc10.so

INFO: Elapsed time: 0.617s, Critical Path: 0.04s

INFO: 0 processes.

INFO: Build completed successfully, 1 total action

```

The `//:c10` target is a good testing one for this, because it has such cases where the [glob is different](https://github.com/pytorch/pytorch/blob/

075024b9a34904ec3ecdab3704c3bcaa329bdfea/BUILD.bazel#L76-L81), based on do we compile for CUDA or not.

## What is out of scope of this PR

This PR is a first in a series of providing the comprehensive GPU bazel build support. Namely, we don't tackle the [cu_library](https://github.com/pytorch/pytorch/blob/

11a40ad915d4d3d8551588e303204810887fcf8d/tools/rules/cu.bzl#L2) implementation here. This would be a separate large chunk of work.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63604

Reviewed By: soulitzer

Differential Revision:

D30442083

Pulled By: malfet

fbshipit-source-id:

b2a8e4f7e5a25a69b960a82d9e36ba568eb64595

Hanton Yang [Fri, 27 Aug 2021 16:23:45 +0000 (09:23 -0700)]

[OSS] Enable Metal in PyTorch MacOS nightly builds (#63718)

Summary:

Build on https://github.com/pytorch/pytorch/pull/63825

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63718

Test Plan:

1.Add `ci/binaries` label to PR, so the CI will build those nightly builds

2.Make sure the following CI jobs build with `USE_PYTORCH_METAL_EXPORT` option is `ON`:

```

ci/circleci: binary_macos_arm64_conda_3_8_cpu_nightly_build

ci/circleci: binary_macos_arm64_conda_3_9_cpu_nightly_build

ci/circleci: binary_macos_arm64_wheel_3_8_cpu_nightly_build

ci/circleci: binary_macos_arm64_wheel_3_9_cpu_nightly_build

ci/circleci: binary_macos_conda_3_6_cpu_nightly_build

ci/circleci: binary_macos_conda_3_7_cpu_nightly_build

ci/circleci: binary_macos_conda_3_8_cpu_nightly_build

ci/circleci: binary_macos_conda_3_9_cpu_nightly_build

ci/circleci: binary_macos_libtorch_3_7_cpu_nightly_build

ci/circleci: binary_macos_wheel_3_6_cpu_nightly_build

ci/circleci: binary_macos_wheel_3_7_cpu_nightly_build

ci/circleci: binary_macos_wheel_3_8_cpu_nightly_build

ci/circleci: binary_macos_wheel_3_9_cpu_nightly_build

```

3.Test `conda` and `wheel` builds locally on [HelloWorld-Metal](https://github.com/pytorch/ios-demo-app/tree/master/HelloWorld-Metal) demo with [(Prototype) Use iOS GPU in PyTorch](https://pytorch.org/tutorials/prototype/ios_gpu_workflow.html)

(1) conda

```

conda install https://

15667941-

65600975-gh.circle-artifacts.com/0/Users/distiller/project/final_pkgs/pytorch-1.10.0.dev20210826-py3.8_0.tar.bz2

```

(2) wheel

```

pip3 install https://

15598647-

65600975-gh.circle-artifacts.com/0/Users/distiller/project/final_pkgs/torch-1.10.0.dev20210824-cp38-none-macosx_10_9_x86_64.whl

```

Reviewed By: xta0

Differential Revision:

D30593167

Pulled By: hanton

fbshipit-source-id:

471da204e94b29c11301c857c50501307a5f0785

Aswin Murali [Fri, 27 Aug 2021 16:02:22 +0000 (09:02 -0700)]

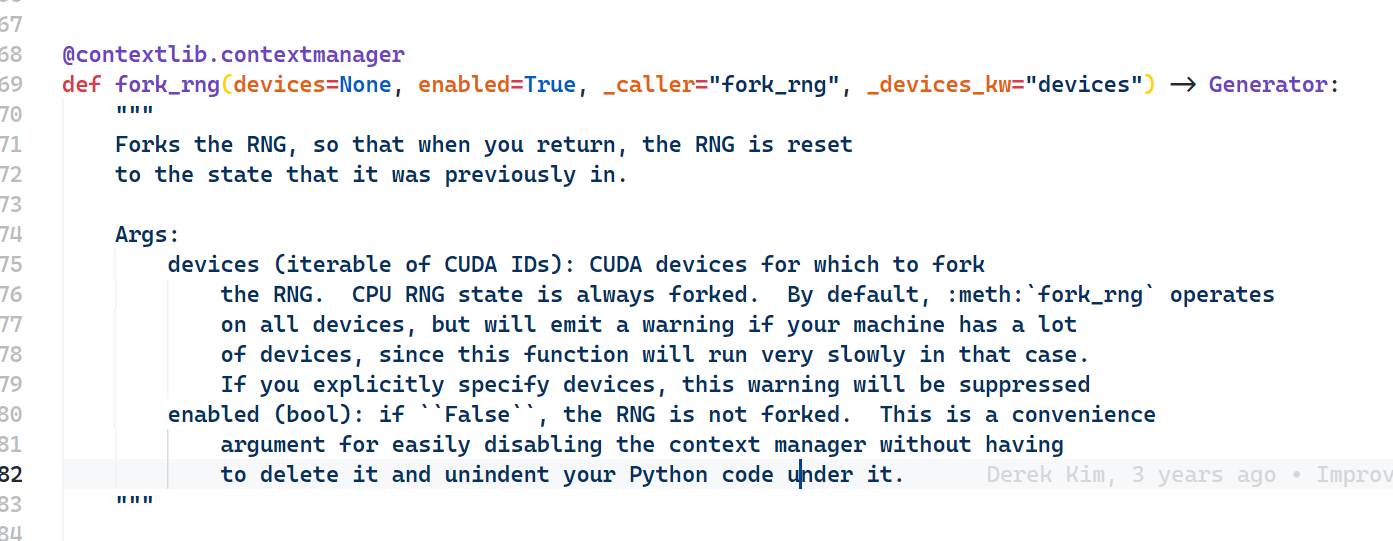

Adds return type annotation for fork_rng function (#63724)

Summary:

Fixes https://github.com/pytorch/pytorch/issues/63723

Since it's a generator function the type annotation shall be `Generator`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63724

Reviewed By: iramazanli

Differential Revision:

D30543098

Pulled By: heitorschueroff

fbshipit-source-id:

ebdd34749defe1e26c899146786a0357ab4b4b9b

gmagogsfm [Fri, 27 Aug 2021 15:49:54 +0000 (08:49 -0700)]

More robust check of whether a class is defined in torch (#64083)

Summary:

This would prevent bugs for classes that

1) Is defined in a module that happens to start with `torch`, say `torchvision`

2) Is defined in torch but with an import alias like `import torch as th`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64083

Reviewed By: soulitzer

Differential Revision:

D30598369

Pulled By: gmagogsfm

fbshipit-source-id:

9d3a7135737b2339c9bd32195e4e69a9c07549d4

Harut Movsisyan [Fri, 27 Aug 2021 10:03:32 +0000 (03:03 -0700)]

[Static Runtime] Out version for fmod (#64046)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/64046

Test Plan:

Confirm out variant is used:

```

> //caffe2/benchmarks/static_runtime:static_runtime_cpptest -- --v=1

V0826 23:31:30.321382 193428 impl.cpp:1395] Switch to out variant for node: %4 : Tensor = aten::fmod(%a.1, %b.1)

```

Reviewed By: mikeiovine

Differential Revision:

D30581228

fbshipit-source-id:

dfab9a16ff8afd40b29338037769f938f154bf74

Don Jang [Fri, 27 Aug 2021 09:43:22 +0000 (02:43 -0700)]

[Static Runtime] Manage temporary Tensors for aten::layer_norm (#64078)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64078

This change converts `aten::layer_norm -> output Tensor` to `static_runtime::layer_norm -> (output Tensor, temp1 Tensor, tmp2 Tensor)` to manage `tmp1` and `tmp2` Tensors by the static runtime.

Currently the out-variant of `aten::layer_norm` creates two temporary Tensors inside it:

```

at::Tensor mean = create_empty_from({M}, *X);

at::Tensor rstd = create_empty_from({M}, *X);

```

that the static runtime misses an opportunity to manage.

This change puts them into (unused) output Tensors of a new placeholder op `static_runtime::layer_norm` so that the static runtime can mange them since the static runtime as of now chooses to manage only output tensors.

Test Plan:

- Enhanced `StaticRuntime.LayerNorm` to ensure that `static_runtime::layer_norm` gets activated.

- Confirmed that the new op gets activated during testing:

```

V0825 12:51:50.017890 2265227 impl.cpp:1396] Switch to out variant for node: %8 : Tensor, %9 : Tensor, %10 : Tensor = static_runtime::layer_norm(%input.1, %normalized_shape.1, %4, %4, %5, %3)

```

Reviewed By: hlu1

Differential Revision:

D30486475

fbshipit-source-id:

5121c44ab58c2d8a954aa0bbd9dfeb7468347a2d

Hao Lu [Fri, 27 Aug 2021 08:39:14 +0000 (01:39 -0700)]

[Static Runtime] Use F14FastMap/F14FastSet (#63999)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63999

Use folly::F14FastMap/F14FastSet instead of std::unordered_map/unordered_set in the Static Runtime code base. folly::F14FastMap/F14FastSet implements the same APIs as std::unordered_map/unordered_set but faster. For details see https://github.com/facebook/folly/blob/master/folly/container/F14.md

Reviewed By: d1jang

Differential Revision:

D30566149

fbshipit-source-id:

20a7fa2519e4dde96fb3fc61ef6c92bf6d759383

Ansha Yu [Fri, 27 Aug 2021 06:17:42 +0000 (23:17 -0700)]

[static runtime] port c2 argmin kernel (#63632)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63632

Local benchmarking with 1 input repeated 10k iter on 290331537_4 local net. Reduces argmin runtime by about 80% and and local net execution by about ~0.71-0.77ms.

Before:

```

I0826 17:25:53.972786 1104614 PyTorchPredictorBenchLib.cpp:313] PyTorch run finished. Milliseconds per iter: 7.37599. Iters per second: 135.57

```

```

Static runtime ms per iter: 8.22086. Iters per second: 121.642

Time per node type:

4.13527 ms. 50.9157%. fb::sigrid_transforms_torch_bind (1 nodes, out variant)

0.868506 ms. 10.6935%. aten::argmin (1 nodes, out variant)

...

```

After:

```

I0826 17:17:54.165174 1064079 PyTorchPredictorBenchLib.cpp:313] PyTorch run finished. Milliseconds per iter: 6.66724. Iters per second: 149.987

```

```

Static runtime ms per iter: 7.68172. Iters per second: 130.179

Time per node type:

4.1452 ms. 54.0612%. fb::sigrid_transforms_torch_bind (1 nodes, out variant)

0.656778 ms. 8.56562%. fb::quantized_linear (8 nodes)

0.488229 ms. 6.36741%. static_runtime::to_copy (827 nodes, out variant)

0.372678 ms. 4.86042%. aten::argmin (1 nodes, out variant)

...Time per node type:

3.39387 ms. 53.5467%. fb::sigrid_transforms_torch_bind (1 nodes, out variant)

0.636216 ms. 10.0379%. fb::quantized_linear (8 nodes, out variant)

0.410535 ms. 6.47721%. fb::clip_ranges_to_gather_to_offsets (304 nodes, out variant)

0.212721 ms. 3.3562%. fb::clip_ranges_gather_sigrid_hash_precompute_v3 (157 nodes, out variant)

0.173736 ms. 2.74111%. aten::matmul (1 nodes, out variant)

0.150514 ms. 2.37474%. aten::argmin (1 nodes, out variant)

```

P447422384

Test Plan:

Test with local replayer sending traffic to `ansha_perf_test_0819.test`, and compare outputs to jit interpreter.

Start compute tier:

```

RUN_UUID=ansha_perf_test_0819.test.storage JOB_EXPIRE_TIME=864000 MODEL_ID=290331537_4 PREDICTOR_TAG= PREDICTOR_VERSION=405 PREDICTOR_TYPE=CPU ADDITIONAL_FLAGS="--enable_disagg_file_split=true --enable_adx=false --load_remote_file_locally=true --pytorch_predictor_static_runtime_whitelist_by_id=

290331537" GFLAGS_CONFIG_PATH=sigrid/predictor/gflags/predictor_gflags_ads_perf_cpu_pyper SMC_TIER_NAME=sigrid.predictor.perf.ansha_per_test_0819.test.storage CLUSTER=tsp_rva ENTITLEMENT_NAME=ads_ranking_infra_test_t6 PREDICTOR_LOCAL_DIRECTORY= ICET_CONFIG_PATH= NNPI_COMPILATION_CONFIG_FILE= NUM_TASKS=1 NNPI_NUM_WORKERS=0 tw job start /data/users/ansha/fbsource/fbcode/tupperware/config/admarket/sigrid/predictor/predictor_perf_canary.tw

```

Start nnpi tier:

```

RUN_UUID=ansha_perf_test_0819.test JOB_EXPIRE_TIME=247200 MODEL_ID=290331537_4 PREDICTOR_TAG= PREDICTOR_VERSION=343 PREDICTOR_TYPE=NNPI_TWSHARED ADDITIONAL_FLAGS="--torch_glow_min_fusion_group_size=30 --pytorch_storage_tier_replayer_sr_connection_options=overall_timeout:1000000,processing_timeout:1000000 --predictor_storage_smc_tier=sigrid.predictor.perf.ansha_perf_test_0819.test.storage --pytorch_predictor_static_runtime_whitelist_by_id=

290331537" GFLAGS_CONFIG_PATH=sigrid/predictor/gflags/predictor_gflags_ads_perf_glow_nnpi_pyper_v1 SMC_TIER_NAME=sigrid.predictor.perf.ansha_perf_test_0819.test CLUSTER=tsp_rva ENTITLEMENT_NAME=ads_ranking_infra_test_t17 PREDICTOR_LOCAL_DIRECTORY= ICET_CONFIG_PATH= NNPI_COMPILATION_CONFIG_FILE= NUM_TASKS=1 NNPI_NUM_WORKERS=0 tw job start /data/users/ansha/fbsource/fbcode/tupperware/config/admarket/sigrid/predictor/predictor_perf_canary.tw

```

```buck test caffe2/benchmarks/static_runtime:static_runtime_cpptest -- StaticRuntime.IndividualOps_Argmin --print-passing-details```

Compared outputs to jit interpreter to check for no differences greater than 1e-3 (with nnc on) https://www.internalfb.com/intern/diff/view-version/

136824794/

Reviewed By: hlu1

Differential Revision:

D30445635

fbshipit-source-id:

048de8867ac72f764132295d1ebfa843cde2fa27

Supriya Rao [Fri, 27 Aug 2021 04:05:56 +0000 (21:05 -0700)]

[quant] Add support for linear_relu fusion for FP16 dynamic quant (#63826)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63826

Support the conversion of the intrinsic linearRelu module to the quantized dynamic LinearReLU module

Verify the support works for both linear module and functional linear fusion

Test Plan:

python test/test_quantization.py test_dynamic_with_fusion

Imported from OSS

Reviewed By: iramazanli

Differential Revision:

D30503513

fbshipit-source-id:

70446797e9670dfef7341cba2047183d6f88b70f

Supriya Rao [Fri, 27 Aug 2021 04:05:56 +0000 (21:05 -0700)]

[quant] Add op support for linear_relu_dynamic_fp16 (#63824)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63824

Add a fused operator implementation that will work with the quantization fusion APIs.

Once FBGEMM FP16 kernel supports relu fusion natively we can remove the addition from the PT operator.

Test Plan:

python test/test_quantization.py

Imported from OSS

Reviewed By: heitorschueroff

Differential Revision:

D30503514

fbshipit-source-id:

6bf3bd53f47ffaa3f1d178eaad8cc980a7f5258a

Supriya Rao [Fri, 27 Aug 2021 04:05:56 +0000 (21:05 -0700)]

[quant] support linear_relu_dynamic for qnnpack backend (#63820)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63820

Adds support in the operator directly to call relu operator if relu fusion is enabled.

Once QNNPACK natively supports relu fusion in the linear_dynamic this can be removed

Test Plan:

python test/test_quantization.py TestDynamicQuantizedLinear.test_qlinear

Imported from OSS

Reviewed By: vkuzo

Differential Revision:

D30502813

fbshipit-source-id:

3352ee5f73e482b6d1941f389d720a461b84ba23

Supriya Rao [Fri, 27 Aug 2021 04:05:56 +0000 (21:05 -0700)]

[quant][fx] Add support for dynamic linear + relu fusion (INT8) (#63799)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63799

Add a new module that can be used for module swap with the nni.LinearReLU module in convert function.

Supports INT8 currently (since FP16 op doesn't have relu fusion yet).

Fixes #55393

Test Plan:

python test/test_quantization.py test_dynamic_fusion

Imported from OSS

Reviewed By: heitorschueroff

Differential Revision:

D30502812

fbshipit-source-id:

3668e4f001a0626d469e17ac323acf582ee28a51

Michael Suo [Fri, 27 Aug 2021 03:54:54 +0000 (20:54 -0700)]

[torch/deploy] add torch.distributed to build (#63918)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63918

Previously we were building with `USE_DISTRIBUTED` off, because c10d was built as a separately library for historical reasons. Since then, lw has merged the c10d build into libtorch, so this is fairly easy to turn on.

Differential Revision:

D30492442

**NOTE FOR REVIEWERS**: This PR has internal Facebook specific changes or comments, please review them on [Phabricator](https://our.intern.facebook.com/intern/diff/

D30492442/)!

D30492442

D30492442

Test Plan: added a unit test

Reviewed By: wconstab

Pulled By: suo

fbshipit-source-id:

843b8fcf349a72a7f6fcbd1fcc8961268690fb8c

Can Balioglu [Fri, 27 Aug 2021 03:16:10 +0000 (20:16 -0700)]

Introduce the torchrun entrypoint (#64049)

Summary:

This PR introduces a new `torchrun` entrypoint that simply "points" to `python -m torch.distributed.run`. It is shorter and less error-prone to type and gives a nicer syntax than a rather cryptic `python -m ...` command line. Along with the new entrypoint the documentation is also updated and places where `torch.distributed.run` are mentioned are replaced with `torchrun`.

cc pietern mrshenli pritamdamania87 zhaojuanmao satgera rohan-varma gqchen aazzolini osalpekar jiayisuse agolynski SciPioneer H-Huang mrzzd cbalioglu gcramer23

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64049

Reviewed By: cbalioglu

Differential Revision:

D30584041

Pulled By: kiukchung

fbshipit-source-id:

d99db3b5d12e7bf9676bab70e680d4b88031ae2d

nikithamalgi [Fri, 27 Aug 2021 01:54:51 +0000 (18:54 -0700)]

Merge script and _script_pdt API (#62420)

Summary:

Merge `torch.jit.script` and `torch.jit._script_pdt` API. This PR merges profile directed typing with script api

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62420

Reviewed By: iramazanli

Differential Revision:

D30579015

Pulled By: nikithamalgifb

fbshipit-source-id:

99ba6839d235d61b2dd0144b466b2063a53ccece

Maksim Levental [Fri, 27 Aug 2021 00:59:59 +0000 (17:59 -0700)]

port glu to use structured kernel approach (#61800)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61800

resubmitting because the [last one](https://github.com/pytorch/pytorch/pull/61433) was unrecoverable due to making changes incorrectly in the stack

Test Plan: Imported from OSS

Reviewed By: iramazanli

Differential Revision:

D29812492

Pulled By: makslevental

fbshipit-source-id:

c3dfeacd1e00a526e24fbaab02dad48069d690ef

Jane Xu [Fri, 27 Aug 2021 00:36:56 +0000 (17:36 -0700)]

Run through failures on trunk (#64063)

Summary:

This PR runs all the tests on trunk instead of stopping on first failure.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64063

Reviewed By: malfet, seemethere

Differential Revision:

D30592020

Pulled By: janeyx99

fbshipit-source-id:

318b225cdf918a98f73e752d1cc0227d9227f36c

Paul Johnson [Fri, 27 Aug 2021 00:28:35 +0000 (17:28 -0700)]

[pytorch] add per_sample_weights support for embedding_bag_4bit_rowwise_offsets (#63605)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/63605

Reviewed By: houseroad

Differential Revision:

D30434664

fbshipit-source-id:

eb4cbae3c705f9dec5c073a56f0f23daee353bc1

Michael Dagitses [Fri, 27 Aug 2021 00:26:52 +0000 (17:26 -0700)]

document that `torch.triangular_solve` has optional out= parameter (#63253)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63253

Fixes #57955

Test Plan: Imported from OSS

Reviewed By: malfet

Differential Revision:

D30312134

Pulled By: dagitses

fbshipit-source-id:

4f484620f5754f4324a99bbac1ff783c64cee6b8

Jiewen Tan [Thu, 26 Aug 2021 23:49:13 +0000 (16:49 -0700)]

Enable test_api IMethodTest in OSS (#63345)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63345

This diff did the following few things to enable the tests:

1. Exposed IMethod as TORCH_API.

2. Linked torch_deploy to test_api if USE_DEPLOY == 1.

3. Generated torch::deploy examples when building torch_deploy library.

Test Plan: ./build/bin/test_api --gtest_filter=IMethodTest.*

Reviewed By: ngimel

Differential Revision:

D30346257

Pulled By: alanwaketan

fbshipit-source-id:

932ae7d45790dfb6e00c51893933a054a0fad86d

Don Jang [Thu, 26 Aug 2021 23:28:35 +0000 (16:28 -0700)]

[Static Runtime] Remove unnecessary fb::equally_split nodes (#64022)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/64022

Test Plan: - Added unittest `StaticRuntime.RemoveEquallySplitListUnpack`.

Reviewed By: hlu1

Differential Revision:

D30472189

fbshipit-source-id:

36040b0146f4be9d0d0fda293f7205f43aad0b87

Shijun Kong [Thu, 26 Aug 2021 23:06:17 +0000 (16:06 -0700)]

[pytorch][quant][oss] Support 2-bit embedding_bag op "embedding_bag_2bit_rowwise_offsets" (#63658)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63658

Support 2-bit embedding_bag op "embedding_bag_2bit_rowwise_offsets"

Reviewed By: jingsh, supriyar

Differential Revision:

D30454994

fbshipit-source-id:

7aa7bfe405c2ffff639d5658a35181036e162dc9

soulitzer [Thu, 26 Aug 2021 23:00:21 +0000 (16:00 -0700)]

Move variabletype functions around (#63330)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63330

- This is in preparation for templated/boxed autograd-not-implemented fallback

- Make sure VariableTypeUtils does not depend on generated code

- Lift `isFwGradDefined` into `autograd/functions/utils.cpp` so it's available to mobile builds

- Removes `using namespace at` from VariableTypeUtils, previously we needed this for Templated version, but now its not strictly necessary but still a good change to avoid name conflicts if this header is included elsewhere in the future.

Test Plan: Imported from OSS

Reviewed By: heitorschueroff

Differential Revision:

D30518573

Pulled By: soulitzer

fbshipit-source-id:

a0fb904baafc9713de609fffec4b813f6cfcc000

Bo Wang [Thu, 26 Aug 2021 23:00:16 +0000 (16:00 -0700)]

More sharded_tensor creation ops: harded_tensor.zeros, sharded_tensor.full, sharded_tensor.rand (#63732)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/63732

Test Plan:

$ python test/distributed/_sharded_tensor/test_sharded_tensor.py --v

$ python test/distributed/_sharded_tensor/test_sharded_tensor.py TestCreateTensorFromParams --v

$ python test/distributed/_sharded_tensor/test_sharded_tensor.py TestShardedTensorChunked --v

Imported from OSS

Differential Revision:

D30472621

D30472621

Reviewed By: pritamdamania87

Pulled By: bowangbj

fbshipit-source-id:

fd8ebf9b815fdc292ad1aad521f9f4f454163d0e

Jane Xu [Thu, 26 Aug 2021 22:42:00 +0000 (15:42 -0700)]

Add shard number to print_test_stats.py upload name (#64055)

Summary:

Now that the render test results job is gone, each shard on GHA is uploading a JSON test stats report. To ensure differentiation, this PR includes the shard number in the report name.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64055

Reviewed By: iramazanli

Differential Revision:

D30586869

Pulled By: janeyx99

fbshipit-source-id:

fd19f347131deec51486bb0795e4e13ac19bc71a

MengeTM [Thu, 26 Aug 2021 22:32:06 +0000 (15:32 -0700)]

Derivatives of relu (#63027) (#63089)

Summary:

Optimization of relu and leaky_relu derivatives for reduction of VRAM needed for the backward-passes

Fixes https://github.com/pytorch/pytorch/issues/63027

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63089

Reviewed By: iramazanli

Differential Revision:

D30582049

Pulled By: albanD

fbshipit-source-id:

a9481fe8c10cbfe2db485e28ce80cabfef501eb8

Facebook Community Bot [Thu, 26 Aug 2021 22:18:37 +0000 (15:18 -0700)]

Automated submodule update: FBGEMM (#62879)

Summary:

This is an automated pull request to update the first-party submodule for [pytorch/FBGEMM](https://github.com/pytorch/FBGEMM).

New submodule commit: https://github.com/pytorch/FBGEMM/commit/

ce5470385723b0262b47250d6af05f1b734e4509

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62879

Test Plan: Ensure that CI jobs succeed on GitHub before landing.

Reviewed By: jspark1105

Differential Revision:

D30154801

fbshipit-source-id:

b2ce185da6f6cadf5128f82b15097d9e13e9e6a0

Mike Iovine [Thu, 26 Aug 2021 21:09:10 +0000 (14:09 -0700)]

[JIT] UseVariadicOp takes list_idx parameter (#63915)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63915

Previously, this function only worked for variadic op substitutions of the form `op(list, args) -> variadic_op(list_1, ..., list_n, args)`. This change allows for transformations of the form `op(args_0, list, args_1) -> variadic_op(args_0, list_1, ..., list_n, args_1)`.

Test Plan:

`buck test caffe2/test/cpp/jit:jit -- Stack Concat`

(tests exercising `list_idx != 0` will be added further up in this diff stack)

Reviewed By: navahgar

Differential Revision:

D30529729

fbshipit-source-id:

568080679c3b40bdaedee56bef2e8a5ce7985d2f

Can Balioglu [Thu, 26 Aug 2021 20:55:08 +0000 (13:55 -0700)]

[torch/elastic] Pretty print the failure message captured by @record (#64036)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64036

This PR slightly revises the implementation of the internal `_format_failure()` method in order to pretty print the error message captured in a subprocess by the `record` annotation.

With this PR a failure log is formatted as below:

```

Root Cause:

[0]:

time: 2021-08-26_17:12:07

rank: 0 (local_rank: 0)

exitcode: 1 (pid: 8045)

error_file: /tmp/torchelastic_6cj9eppm/

6d9d844a-6ce4-4838-93ed-1639a9525b00_rec9kuv3/attempt_0/0/error.json

msg:

{

"message": "ValueError: Test",

"extraInfo": {

"py_callstack": [

" File \"/data/home/balioglu/fail.py\", line 7, in <module>\n main()\n",

" File \"/fsx/users/balioglu/repos/pytorch/torch/distributed/elastic/multiprocessing/errors/__init__.py\", line 373, in wrapper\n error_handler.record_exception(e)\n",

" File \"/fsx/users/balioglu/repos/pytorch/torch/distributed/elastic/multiprocessing/errors/error_handler.py\", line 86, in record_exception\n _write_error(e, self._get_error_file_path())\n",

" File \"/fsx/users/balioglu/repos/pytorch/torch/distributed/elastic/multiprocessing/errors/error_handler.py\", line 26, in _write_error\n \"py_callstack\": traceback.format_stack(),\n"

],

"timestamp": "

1629997927"

}

}

```

in contrast to the old formatting:

```

Root Cause:

[0]:

time: 2021-08-26_17:15:50

rank: 0 (local_rank: 0)

exitcode: 1 (pid: 9417)

error_file: /tmp/torchelastic_22pwarnq/

19f22638-848c-4b8f-8379-677f34fc44e7_u43o9vs7/attempt_0/0/error.json

msg: "{'message': 'ValueError: Test', 'extraInfo': {'py_callstack': 'Traceback (most recent call last):\n File "/fsx/users/balioglu/repos/pytorch/torch/distributed/elastic/multiprocessing/errors/__init__.py", line 351, in wrapper\n return f(*args, **kwargs)\n File "/data/home/balioglu/fail.py", line 5, in main\n raise ValueError("BALIOGLU")\nValueError: BALIOGLU\n', 'timestamp': '

1629998150'}}"

```

ghstack-source-id:

136761768

Test Plan: Run the existing unit tests.

Reviewed By: kiukchung

Differential Revision:

D30579025

fbshipit-source-id:

37df0b7c7ec9b620355766122986c2c77e8495ae

Ilqar Ramazanli [Thu, 26 Aug 2021 20:29:03 +0000 (13:29 -0700)]

To add Chained Scheduler to the list of PyTorch schedulers. (#63491)

Summary:

In this PR we are introducing ChainedScheduler which initially proposed in the discussion https://github.com/pytorch/pytorch/pull/26423#discussion_r329976246 .

The idea is to provide a user friendly chaining method for schedulers, especially for the cases many of them are involved and we want to have a clean and easy to read interface for schedulers. This method will be even more crucial once CompositeSchedulers and Schedulers for different type of parameters are involved.

The immediate application of Chained Scheduler is expected to happen in TorchVision Library to combine WarmUpLR and MultiStepLR https://github.com/pytorch/vision/blob/master/references/video_classification/scheduler.py#L5 . However, it can be expected that in many other use cases also this method could be applied.

### Example

The usage is as simple as below:

```python

sched=ChainedScheduler([ExponentialLR(self.opt, gamma=0.9),

WarmUpLR(self.opt, warmup_factor=0.2, warmup_iters=4, warmup_method="constant"),

StepLR(self.opt, gamma=0.1, step_size=3)])

```

Then calling

```python

sched.step()

```

would trigger step function for all three schedulers consecutively

Partially resolves https://github.com/pytorch/vision/issues/4281

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63491

Reviewed By: datumbox, mruberry

Differential Revision:

D30576180

Pulled By: iramazanli

fbshipit-source-id:

b43f0749f55faab25079641b7d91c21a891a87e4

Shiyan Deng [Thu, 26 Aug 2021 20:06:46 +0000 (13:06 -0700)]

[fx_acc] [fx2trt] add acc op mapper for argmin and converter for topk (#63823)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63823

Add mapper for `torch.argmin` which maps it to `acc_ops.flatten` (optional) + `acc_ops.topk` + `acc_ops.getitem` + `acc_ops.squeeze` (optional). This diff doesn't allow mapping if `dim=None && keepdim=True` in `torch.argmin`.

Add fx2trt converter for `acc_ops.topk`.

Test Plan:

buck test mode/opt glow/fb/fx/oss_acc_tracer:test_acc_tracer -- test_argmin

buck run mode/opt caffe2/torch/fb/fx2trt:test_topk

Reviewed By: jfix71

Differential Revision:

D30501771

fbshipit-source-id:

0babc45e69bac5e61ff0b9b4dfb98940398e3e57

Don Jang [Thu, 26 Aug 2021 19:58:05 +0000 (12:58 -0700)]

[Static Runtime] Add native op for aten::expand_as (#64024)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64024

`aten::expand_as` creates a view of the input tensor. This change adds its native op implementation for the static runtime.

Test Plan: - Added `StaticRuntime.IndividualOps_ExpandAs`

Reviewed By: hlu1

Differential Revision:

D30546851

fbshipit-source-id:

e53483048af890bc41b6192a1ab0c5ba0ee2bdc0

Meghan Lele [Thu, 26 Aug 2021 19:48:01 +0000 (12:48 -0700)]

Back out "[ONNX] Fix an issue that optimizations might adjust graph inputs unexpectedly. (#61280)" (#64004)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64004

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63904

Fixes T98808160

Test Plan: T98808160

Reviewed By: msaroufim

Differential Revision:

D30527450

fbshipit-source-id:

6262901a78ca929cecda1cf740893139aa26f1b4

Ansley Ussery [Thu, 26 Aug 2021 19:14:32 +0000 (12:14 -0700)]

Allow uncompiled strings as input to `checkScriptRaisesRegex` (#63901)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63901

cc gmagogsfm

Test Plan: Imported from OSS

Reviewed By: gmagogsfm

Differential Revision:

D30579472

Pulled By: ansley

fbshipit-source-id:

59ee09c1f25278d4f6e51f626588251bd095c6ea

Luca Wehrstedt [Thu, 26 Aug 2021 19:08:00 +0000 (12:08 -0700)]

Leverage TensorPipe's automatic SHM address selection (#63028)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63028

TensorPipe until now required PyTorch to come up and provide a unique identifier to use as address for the UNIX domain socket used in the SHM transport. However the Linux kernel can automatically assign an available address (like it does with IP ports), and TensorPipe now supports it, so we can remove that useless PyTorch logic.

Test Plan: CI

Reviewed By: mrshenli

Differential Revision:

D30220352

fbshipit-source-id:

78e8a6ef5916b2a72df26cdc9cd367b9d083e821

Erjia Guan [Thu, 26 Aug 2021 17:21:48 +0000 (10:21 -0700)]

Rename IterableAsDataPipe to IterableWrapper (#63981)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63981

Rename `IterableAsDataPipe` to `IterableWrapper` based on our naming convention `Op-er`

Test Plan: Imported from OSS

Reviewed By: VitalyFedyunin

Differential Revision:

D30554197

Pulled By: ejguan

fbshipit-source-id:

c2eacb20df5645d83ca165d6a1591f7e4791990f

Cheng Chang [Thu, 26 Aug 2021 16:52:42 +0000 (09:52 -0700)]

[NNC] Add C++ codegen backend to NNC (#62869)

Summary:

Adds a C++ codegen backend to NNC to generate C++ for CPU instead of generating LLVM IR.

Tensors are represented as blobs of float. Vector operations are devectorized/unrolled.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62869

Test Plan:

https://github.com/pytorch/pytorch/tree/mvz-nnc-aot-prototype makes it able to AOT compile the whole MobileNetV3 model into binary code through LLVM codegen in NNC.

I forked that branch to https://github.com/cheng-chang/pytorch/tree/cc-aot-cpp, merged this PR into it, and modified `fancy_compile` to compile MobileNetV3 into C++ through

```

import torch

m = torch.jit.load('mobnet.pt')

m.eval()

f = torch.jit.freeze(m)

torch._C._fancy_compile(f.graph, [1, 3, 224, 224])

```

The generated C++ file `mobnet.cc` can be found at https://gist.github.com/cheng-chang/

e2830cc6920b39204ebf368035b2bcec.

I manually compiled the generated C++ through `g++ -o mobnet -std=c++14 -L./build/lib -ltorch_cpu -ltorch mobnet.cc`, and it succeeded.

Reviewed By: ZolotukhinM

Differential Revision:

D30149482

Pulled By: cheng-chang

fbshipit-source-id:

e77b189f0353e37cd309423a48a513e668d07675

Raghavan Raman [Thu, 26 Aug 2021 16:49:44 +0000 (09:49 -0700)]

[nnc] Sanitized the names of constants in the input graph. (#63990)

Summary:

Fixes https://github.com/pytorch/pytorch/issues/63923

The input graph can contain constants whose names contain special characters. So, all names of constants in the input graph need to be sanitized.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63990

Reviewed By: ZolotukhinM

Differential Revision:

D30558432

Pulled By: navahgar

fbshipit-source-id:

de5b0c23d50ee8997f40f2c0fc605dda3719186f

Bert Maher [Thu, 26 Aug 2021 16:41:58 +0000 (09:41 -0700)]

[nnc] Fix dtype promotion involving scalars (#64002)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64002

Fixes https://github.com/pytorch/vision/issues/4315

Test Plan: Imported from OSS

Reviewed By: navahgar

Differential Revision:

D30566979

Pulled By: bertmaher

fbshipit-source-id:

eaa98b9534a926be7fcd337d46c5a0acb3243179

Jane Xu [Thu, 26 Aug 2021 16:27:47 +0000 (09:27 -0700)]

run_test.py: add option to run only core tests (#63976)

Summary:

This is in response to a feature request from some folks in the core team to have a local command that would only run relevant "core" tests. The idea is to have a local smoke test option for developers to run locally before making a PR in order to verify their changes did not break core functionality. These smoke tests are not targeted to be short but rather relevant.

This PR enables that by allowing developers to run `python test/run_test.py --core` or `python test/run_test.py -core` in order to run the CORE_TEST_LIST, which is currently test_nn.py, test_torch.py, and test_ops.py.

I am not the best person to judge what should be considered "core", so please comment which tests should be included and/or excluded from the CORE_TEST_LIST!

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63976

Test Plan:

```

(pytorch) janeyx@janeyx-mbp test % python run_test.py --core -v

Selected tests: test_nn, test_ops, test_torch

Running test_nn ... [2021-08-25 14:48:28.865078]

Executing ['/Users/janeyx/miniconda3/envs/pytorch/bin/python', 'test_nn.py', '-v'] ... [2021-08-25 14:48:28.865123]

test_to (__main__.PackedSequenceTest) ... ok

test_to_memory_format (__main__.PackedSequenceTest) ... ok

```

Reviewed By: walterddr

Differential Revision:

D30575560

Pulled By: janeyx99

fbshipit-source-id:

3f151982c1e315e50e60cb0d818adaea34556a04

Don Jang [Thu, 26 Aug 2021 15:08:53 +0000 (08:08 -0700)]

[Static Runtime] Disable out variant of aten::clone (#63980)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63980

The out variant implementation of `aten::clone` causes a crash, which needs further investigation. This change disables it until the problem gets fixed.

Note that `inline_cvr` doesn't use `aten::clone` as of now, so no perf implication: https://www.internalfb.com/phabricator/paste/view/P446858755?lines=121

Test Plan: N/A

Reviewed By: hlu1

Differential Revision:

D30544149

fbshipit-source-id:

facb334d67473f622b36862fbdb2633358556fdf

Rong Rong (AI Infra) [Thu, 26 Aug 2021 15:00:48 +0000 (08:00 -0700)]

[CI] move distributed test into its own CI job (#62896)

Summary:

Moving distributed to its own job.

- [x] ensure there should be a distributed test job for every default test job matrix (on GHA)

- [x] ensure that circleci jobs works for distributed as well

- [x] waiting for test distributed to have its own run_test.py launch options, see https://github.com/pytorch/pytorch/issues/63147

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62896

Reviewed By: seemethere

Differential Revision:

D30230856

Pulled By: walterddr

fbshipit-source-id:

0cad620f6cd9e56c727c105458d76539a5ae976f

albanD [Thu, 26 Aug 2021 14:48:20 +0000 (07:48 -0700)]

remove special grad_mode tls handling (#63116)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63116

This PR removes the special flag to disable grad mode tracking on the ThreadLocalState and replaces it with an explicit setter that users can use.

This allows to reduce complexity of ThreadLocalState.

Test Plan: Imported from OSS

Reviewed By: ngimel

Differential Revision:

D30388098

Pulled By: albanD

fbshipit-source-id:

85641b3d711179fb78ff6a41ed077548dc821a2f

Heitor Schueroff [Thu, 26 Aug 2021 14:17:24 +0000 (07:17 -0700)]

Added API tests to ReductionOpInfo and ported amax/amin/nansum tests (#62899)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/62899

Test Plan: Imported from OSS

Reviewed By: mruberry

Differential Revision:

D30408816

Pulled By: heitorschueroff

fbshipit-source-id:

6cb0aa7fa7edba93549ef873baa2fb8a003bd91d

Edward Yang [Thu, 26 Aug 2021 13:58:12 +0000 (06:58 -0700)]

Deify opmath_t into its own header, align with accscalar_t (#63986)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63986

Fixes #63985

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Test Plan: Imported from OSS

Reviewed By: malfet

Differential Revision:

D30555996

Pulled By: ezyang

fbshipit-source-id:

b6e4d56a5658ed028ffc105cc4b479faa6882b65

Heitor Schueroff [Thu, 26 Aug 2021 13:05:28 +0000 (06:05 -0700)]

[OpInfo] Added ReductionOpInfo subclass of OpInfo and ported sum test (#62737)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62737

ReductionOpInfo is a specialization of OpInfo for reduction operators. For now, it is designed to work with reductions that return a single tensor and that reduce all elements along one or more dimensions to a single value. In particular this excludes operators such as `max` and `min` that return multiple tensors and `quantile` that can return multiple values.

fixes https://github.com/pytorch/pytorch/issues/49746

Test Plan: Imported from OSS

Reviewed By: ejguan

Differential Revision:

D30406568

Pulled By: heitorschueroff

fbshipit-source-id:

218b1da1902f67bcf4c3681e2a0f0029a25d51f1

Luca Wehrstedt [Thu, 26 Aug 2021 12:43:05 +0000 (05:43 -0700)]

Update TensorPipe submodule

Summary: The bot failed to do it.

Test Plan:

D30542677

Reviewed By: beauby

Differential Revision:

D30573500

fbshipit-source-id:

50abd6fc415cead0a6b6d9290fa0e5f97d0e4989

Michael Dagitses [Thu, 26 Aug 2021 11:42:36 +0000 (04:42 -0700)]

use `const auto&` as type for grad alias (#63949)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63949

This is an extension of the discussion in

https://github.com/pytorch/pytorch/pull/63040#discussion_r687793027.

Test Plan: Imported from OSS

Reviewed By: albanD

Differential Revision:

D30546789

Pulled By: dagitses

fbshipit-source-id:

3046aff4f129d5492d73dfb67717a824e16ffee8

Kefei Lu [Thu, 26 Aug 2021 07:51:53 +0000 (00:51 -0700)]

Add logging for _MinimizerBase

Summary: Add logging so we know which nodes are currently being visited

Test Plan: lint & SC tests

Reviewed By:

842974287

Differential Revision:

D30509865

fbshipit-source-id:

09e77e44c97c825242e0b24f90463b50f3ca19c6

Rohan Varma [Thu, 26 Aug 2021 06:48:58 +0000 (23:48 -0700)]

Fix issue re: DDP and create_graph=True (#63831)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63831

Closes https://github.com/pytorch/pytorch/issues/63812

`at::mul_out` is not supported when `grad` itself requires grad, which is useful for computing higher order derivatives.

In this case, fall back to a mul + copy instead of mul_out.

ghstack-source-id:

136614644

Test Plan: UT

Reviewed By: SciPioneer

Differential Revision:

D30505573

fbshipit-source-id:

83532b6207b3d80116fcc4dff0e5520d73b3454f

Marjan Fariborz [Thu, 26 Aug 2021 06:40:09 +0000 (23:40 -0700)]

Adding BFP16 quantization/dequantization support to OSS (#63059)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63059

Supporting BFP16 quantization method to OSS. Currently only support CPU

ghstack-source-id:

136639528

Test Plan: Imported from OSS

Reviewed By: wanchaol

Differential Revision:

D30194538

fbshipit-source-id:

ac248567ad8028457c2a91b77ef2ce81709fce53

Kiuk Chung [Thu, 26 Aug 2021 05:56:33 +0000 (22:56 -0700)]

(torch.distributed) Add torch.distributed.is_torchelastic_launched() util method + make init_method=tcp:// compatible with torchelastic (#63910)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63910

Addresses the current issue that `init_method=tcp://` is not compatible with `torch.distributed.run` and `torch.distributed.launch`. When running with a training script that initializes the process group with `init_method=tcp://localhost:$port` as such:

```

$ python -u -m torch.distributed.run --max_restarts 0 --nproc_per_node 1 --nnodes 1 --master_addr $(hostname) --master_port 6000 ~/tmp/test.py

```

An `Address in use` error is raised since the training script tries to create a TCPStore on port 6000, which is already taken since the elastic agent is already running a TCPStore on that port.

For details see: https://github.com/pytorch/pytorch/issues/63874.

This change does a couple of things:

1. Adds `is_torchelastic_launched()` check function that users can use in the training scripts to see whether the script is launched via torchelastic.

1. Update the `torch.distributed` docs page to include the new `is_torchelastic_launched()` function.

1. Makes `init_method=tcp://` torchelastic compatible by modifying `_tcp_rendezvous_handler` in `torch.distributed.rendezvous` (this is NOT the elastic rendezvous, it is the old rendezvous module which is slotted for deprecation in future releases) to check `is_torchelastic_launched()` AND `torchelastic_use_agent_store()` and if so, only create TCPStore clients (no daemons, not even for rank 0).

1. Adds a bunch of unittests to cover the different code paths

NOTE: the issue mentions that we should fail-fast with an assertion on `init_method!=env://` when `is_torchelastic_launched()` is `True`. There are three registered init_methods in pytorch: env://, tcp://, file://. Since this diff makes tcp:// compatible with torchelastic and I've validated that file is compatible with torchelastic. There is no need to add assertions. I did update the docs to point out that env:// is the RECOMMENDED init_method. We should probably deprecate the other init_methods in the future but this is out of scope for this issue.

Test Plan: Unittests.

Reviewed By: cbalioglu

Differential Revision:

D30529984

fbshipit-source-id:

267aea6d4dad73eb14a2680ac921f210ff547cc5

Joseph Spisak [Thu, 26 Aug 2021 05:49:22 +0000 (22:49 -0700)]

Update persons_of_interest.rst (#63907)

Summary:

Fixes #{issue number}

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63907

Reviewed By: jspisak

Differential Revision:

D30534972

Pulled By: dzhulgakov

fbshipit-source-id:

ba726fc53e292a362c387cc8b5f7776ca2a2544c

Philip Meier [Thu, 26 Aug 2021 05:04:44 +0000 (22:04 -0700)]

enable equal_nan for complex values in isclose (#63571)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/63571

Test Plan: Imported from OSS

Reviewed By: malfet, ngimel

Differential Revision:

D30560127

Pulled By: mruberry

fbshipit-source-id:

8958121ca24e7c139d869607903aebbe87bc0740

nikithamalgi [Thu, 26 Aug 2021 04:47:50 +0000 (21:47 -0700)]

Clean up related to type refinements (#62444)

Summary:

Creates a helper function to refine the types into a torchScript compatible format in the monkeytype config for profile directed typing

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62444

Reviewed By: malfet

Differential Revision:

D30548159

Pulled By: nikithamalgifb

fbshipit-source-id:

7c09ce5f5e043d069313b87112837d7e226ade1f

Zeina Migeed [Thu, 26 Aug 2021 03:42:14 +0000 (20:42 -0700)]

inference for algebraic expressions (#63822)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63822

Infer algebraic expressions and add it to our symbolic inferencer. Works for conv2D and can be extended to other operations.

Test Plan: Imported from OSS

Reviewed By: jamesr66a

Differential Revision:

D30518469

Pulled By: migeed-z

fbshipit-source-id:

b92dfa40b2d834a535177da42b851701b8f7178c

Zafar Takhirov [Thu, 26 Aug 2021 03:37:56 +0000 (20:37 -0700)]

[quant] Fixing the conversion of the quantizable RNN (#63879)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63879

Quantizable RNN had a bug, where the `from_observed` was an instance method, instead of a class method. This caused the `tq.convert` to fail. This fixes the issue by making the `from_observed` a classmethod.

The tests were passing before because the unittests were not using the custom module path, but a conventional `from_float`, which is also supported.

Test Plan:

`buck test mode/dev //caffe2/test:quantization -- test_custom_module_lstm`

```

buck test mode/dev //caffe2/test:quantization -- test_custom_module_lstm

Parsing buck files: finished in 0.5 sec

Downloaded 0/2 artifacts, 0.00 bytes, 100.0% cache miss (for updated rules)

Building: finished in 9.2 sec (100%) 12622/12622 jobs, 2/12622 updated

Total time: 9.7 sec

More details at https://www.internalfb.com/intern/buck/build/

0d87b987-649f-4d06-b0e2-97b5077

Tpx test run coordinator for Facebook. See https://fburl.com/tpx for details.

Running with tpx session id:

cb99305f-65c9-438b-a99f-

a0a2a3089778

Trace available for this run at /tmp/tpx-

20210824-115652.540356/trace.log

Started reporting to test run: https://www.internalfb.com/intern/testinfra/testrun/

5066549645030046

✓ ListingSuccess: caffe2/test:quantization - main (12.550)

✓ Pass: caffe2/test:quantization - test_custom_module_lstm (quantization.core.test_quantized_op.TestQuantizedOps) (174.867)

Summary

Pass: 1

ListingSuccess: 1

If you need help understanding your runs, please follow the wiki: https://fburl.com/posting_in_tpx_users

Finished test run: https://www.internalfb.com/intern/testinfra/testrun/

5066549645030046

```

Reviewed By: jerryzh168, mtl67

Differential Revision:

D30520473

fbshipit-source-id:

bc5d0b5bb079fd146e2614dd42526fc7d4d4f3c6

Zhengxu Chen [Thu, 26 Aug 2021 03:09:12 +0000 (20:09 -0700)]

Make frozen symbol name customizable in torch deploy. (#63817)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63817

ghstack-source-id:

136699671

Test Plan: eyes

Reviewed By: wconstab

Differential Revision:

D29571559

fbshipit-source-id:

8e3caa4932ef8d7c8559f264f0e9bb5474ad2237

Natalia Gimelshein [Thu, 26 Aug 2021 01:17:10 +0000 (18:17 -0700)]

Compute cuda reduction buffer size in elements (#63969)

Summary:

Resubmit of https://github.com/pytorch/pytorch/issues/63885

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63969

Reviewed By: mruberry

Differential Revision:

D30549423

Pulled By: ngimel

fbshipit-source-id:

b16d25030d44ced789c125a333d72b02a8f45067

Jerry Zhang [Thu, 26 Aug 2021 00:50:48 +0000 (17:50 -0700)]

Back out "Revert

D30384746: [fx2trt] Add a test for quantized resnet18" (#63973)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63973

Original commit changeset:

b93235323e22

Test Plan: buck run mode/opt -c python.package_style=inplace caffe2:fx2trt_quantized_resnet_test

Reviewed By:

842974287

Differential Revision:

D30546036

fbshipit-source-id:

2c8302456f072d04da00cf9ad97aa8304bc5e43e

Philip Meier [Wed, 25 Aug 2021 23:42:14 +0000 (16:42 -0700)]

replace `self.assertTrue(torch.allclose(..))` with `self.assertEqual(…)` (#63637)

Summary:

Fixes https://github.com/pytorch/pytorch/issues/63565

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63637

Reviewed By: malfet

Differential Revision:

D30541266

Pulled By: mruberry

fbshipit-source-id:

ab461949782c6908a589ea098fcfcf5c3e081ee6

David Riazati [Wed, 25 Aug 2021 22:54:31 +0000 (15:54 -0700)]

Remove render_test_results job (#63877)

Summary:

This removes the `render_test_results` job we had before which had been causing some confusion among devs when it failed and isn't really necessary now that we can actually render test results on the PR HUD.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63877

Reviewed By: walterddr, janeyx99

Differential Revision:

D30546705

Pulled By: driazati

fbshipit-source-id:

55fdafdb6f80924d941ffc15ee10787cb54f34a1

John Clow [Wed, 25 Aug 2021 22:27:37 +0000 (15:27 -0700)]

[EASY] Update the clang-tidy error message (#63370)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63370

As shown by this CI run, the actual thing that is incorrect is the prompt.

https://github.com/pytorch/pytorch/actions/runs/

1137298261

The CI runs the below command instead of the original command.

The original command errors out when importing another file on line 1.

Trying to fix the code to work with the original command causes the CI to error out.

We should actually ask the user to run

`python3 -m tools.linter.install.clang_tidy`

Test Plan: Imported from OSS

Reviewed By: janeyx99, heitorschueroff

Differential Revision:

D30530216

Pulled By: Gamrix

fbshipit-source-id:

2a2b8d539dcc2839e4000c13e82c207fa89bfc9f

Peter Bell [Wed, 25 Aug 2021 22:05:14 +0000 (15:05 -0700)]

Shard python_torch_functions.cpp (#62187)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62187

This file can take 3 minutes on its own to compile, and after

python_functions.cpp is the second limiting factor for compile time of

`libtorch_python` on a 32-core threadripper. This splits it into 3 files that

take around 1 minute each to compile.

Test Plan: Imported from OSS

Reviewed By: H-Huang

Differential Revision:

D29962048

Pulled By: albanD

fbshipit-source-id:

99016d75912bff483fe21b130cef43a6882f8c0e

Jithun Nair [Wed, 25 Aug 2021 22:00:47 +0000 (15:00 -0700)]

Add note on ifdefing based on CUDA_VERSION for ROCm path (#62850)

Summary:

CUDA_VERSION and HIP_VERSION follow very unrelated versioning schemes, so it does not make sense to use CUDA_VERSION to determine the ROCm path. This note explicitly addresses it.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62850

Reviewed By: mruberry

Differential Revision:

D30547562

Pulled By: malfet

fbshipit-source-id:

02990fa66a88466c2330ab85f446b25b78545150

John Clow [Wed, 25 Aug 2021 21:49:06 +0000 (14:49 -0700)]

Small fixes to the Contributing.txt (#63385)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63385

Correcting a mistake for the pytorch uninstall, and

adding an extra note for Darwin.

Test Plan: Imported from OSS

Reviewed By: janeyx99, heitorschueroff

Differential Revision:

D30530234

fbshipit-source-id:

e0f88a1725eeadabfb4b28c1da11e369ee878ab4

Rong Rong (AI Infra) [Wed, 25 Aug 2021 21:34:40 +0000 (14:34 -0700)]

Back out "Temporary fix for remote gpu execution issue" (#63983)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63983

Test for fixes in

D30545351. it should resolve the remote execution flag being populated incorrectly issue.

Test Plan: CI

Reviewed By: malfet, seemethere

Differential Revision:

D30549443

fbshipit-source-id:

b3895909f5cd654ba163b77950872b332fbad3fe

Priya Ramani [Wed, 25 Aug 2021 20:08:12 +0000 (13:08 -0700)]

Shape Propagation Pass: Fix AdaptiveAveragePooling2d (#63629)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/63629

Test Plan: Imported from OSS

Reviewed By: ZolotukhinM

Differential Revision:

D30461727

Pulled By: priyaramani

fbshipit-source-id:

3873d1d636f79185680b82de06174d8de288c941

driazati [Wed, 25 Aug 2021 19:58:24 +0000 (12:58 -0700)]

Move existing target determinator to tools (#63809)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63809

This moves out the modulefinder determinator to `tools/testing` since it is supposed to be CI-only. This also simplifies run_test.py a little bit.

Test Plan: Imported from OSS

Reviewed By: malfet, seemethere, janeyx99

Differential Revision:

D30497438

Pulled By: driazati

fbshipit-source-id:

1d203037af5af6a20c1e7812da935e7cbb5cd82f

Yi Wang [Wed, 25 Aug 2021 19:46:09 +0000 (12:46 -0700)]

Add a comment on the potential implicit type up-casting (#63905)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63905

as title

ghstack-source-id:

136590703

Test Plan: N/A

Reviewed By: mrshenli

Differential Revision:

D30527929

fbshipit-source-id:

69402bbfa87cfd8fc166ce313cde9736ee072589

mingfeima [Wed, 25 Aug 2021 18:53:52 +0000 (11:53 -0700)]

add BFloat16 support for bernoulli and Dropout on CPU (#56372)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/56372

Test Plan: Imported from OSS

Reviewed By: heitorschueroff

Differential Revision:

D28836792

Pulled By: VitalyFedyunin

fbshipit-source-id:

ede951d172a59276e11383fd767778ab959b5a6b

Howard Huang [Wed, 25 Aug 2021 18:53:24 +0000 (11:53 -0700)]

Update torch.distributed.run OMP_NUM_THREADS message to log.warning (#63953)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63953

Closes #61138

Test:

`python -m torch.distributed.run --nproc_per_node 2 test.py`

Still outputs message

`LOGLEVEL=ERROR python -m torch.distributed.run --nproc_per_node 2 test.py`

Does not output message anymore

cc pietern mrshenli pritamdamania87 zhaojuanmao satgera rohan-varma gqchen aazzolini osalpekar jiayisuse agolynski SciPioneer H-Huang mrzzd cbalioglu gcramer23

Test Plan: Imported from OSS

Reviewed By: malfet

Differential Revision:

D30542997

Pulled By: H-Huang

fbshipit-source-id:

e7da30dcda51516abf4e56f1f510132e44397027

zhouzhuojie [Wed, 25 Aug 2021 18:30:28 +0000 (11:30 -0700)]

Fix ciflow/all label generation (#63954)

Summary:

the `ciflow/all` is automatically added but need to be added before we call `gen_root_job_condition`.

- fix the order of adding `ciflow/all`

- refactor all the string into global constants

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63954

Reviewed By: malfet

Differential Revision:

D30545596

Pulled By: zhouzhuojie

fbshipit-source-id:

83ab668f0234488afb855a72e3ebd4503f7f1a78

driazati [Wed, 25 Aug 2021 18:19:49 +0000 (11:19 -0700)]

Reformat run_test.py (#63808)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63808

`black run_test.py`

Test Plan: Imported from OSS

Reviewed By: seemethere

Differential Revision:

D30497437

Pulled By: driazati

fbshipit-source-id:

41b29b73f41fa4bb15fce5eaa69f8efe614e02f7

Raghavan Raman [Wed, 25 Aug 2021 18:12:57 +0000 (11:12 -0700)]

[Static Runtime] Added caching for the NNC code generated for Logit. (#63840)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63840

Added NNC generated code for Logit to the cache.

```

Logit NNC Benchmark Time (ns)

w/o cache w/ cache

logit_nnc_sleef/64 543 536

logit_nnc_sleef/512 3517 3465

logit_nnc_sleef/8192 88483 85881

logit_nnc_sleef/32768 337016 323090

logit_nnc_fast/64 167 163

logit_nnc_fast/512 866 817

logit_nnc_fast/8192 13069 12801

logit_nnc_fast/32768 53429 52530

logit_nnc_vml/64 164 151

logit_nnc_vml/512 783 769

logit_nnc_vml/8192 11563 11674

logit_nnc_vml/32768 46720 46452

```

Test Plan: Unit tests and inline_cvr model.

Reviewed By: hlu1

Differential Revision:

D30405424

fbshipit-source-id:

938b1b74758e2612ae151bac890c5f8ebbc42d50

Raghavan Raman [Wed, 25 Aug 2021 18:12:57 +0000 (11:12 -0700)]

[Static Runtime] Added a variable for clamp in the NNC code for Logit. (#63839)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63839

Replaced the use of a constant for clamp in the NNC code for Logit

with a variable. This makes it easier to enable caching for Logit.

There is no performance difference with this change, as shown in the micro-benchmarks below.

```

Logit NNC Benchmark Time (ns)

const-clamp var-clamp

logit_nnc_sleef/64 550 543

logit_nnc_sleef/512 3514 3517

logit_nnc_sleef/8192 85537 82900

logit_nnc_sleef/32768 347635 337016

logit_nnc_fast/64 173 167

logit_nnc_fast/512 829 866

logit_nnc_fast/8192 13286 13069

logit_nnc_fast/32768 51116 53429

logit_nnc_vml/64 146 164

logit_nnc_vml/512 773 783

logit_nnc_vml/8192 11556 11563

logit_nnc_vml/32768 44815 46720

```

Test Plan: SR unit tests and the inline_cvr model.

Reviewed By: bertmaher

Differential Revision:

D30405466

fbshipit-source-id:

adb891fdae5746439931ce5f43165291fec08f52

Raghavan Raman [Wed, 25 Aug 2021 18:12:57 +0000 (11:12 -0700)]

[Static Runtime] Moved NNC operator definitions to separate files. (#63838)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63838

Refactored NNC operator definitions code into separate files.

Made `TEWrapper` a class with a fixed set of methods and added separate definitions for them based on `TORCH_ENABLE_LLVM` to keep the same functionality as before.

Test Plan: Build and ran Static Runtime tests.

Reviewed By: hlu1

Differential Revision:

D30405467

fbshipit-source-id:

606ef852bb820d5e23a0f8af1bf5dc122e90bceb

Aayush Prakash [Wed, 25 Aug 2021 18:11:08 +0000 (11:11 -0700)]

[Reland] Replacing the p.data acccess in utils with tensor.set_ . Passes both test_post_localSGD_optimizer_pari and test_periodic_model_averager tests (#63895)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63895

When updating the model parameter, updating `parameter.data` is no longer recommended, because this `data` field will be deprecated in the future.

The replacement is `tensor.set_`.

ghstack-source-id:

136593433

Test Plan:

buck test mode/dev-nosan //caffe2/test/distributed:distributed_nccl_spawn -- test_periodic_model_averager

buck test mode/dev-nosan //caffe2/test/distributed:distributed_nccl_spawn -- test_post_localSGD_optimizer_parity

Reviewed By: SciPioneer

Differential Revision:

D30526178

fbshipit-source-id:

a1ac0ec3665d8623edd5bf94f01c1132daff5c00

albanD [Wed, 25 Aug 2021 18:07:24 +0000 (11:07 -0700)]

clean up engine.cpp thread state (#63115)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63115

This actually changes:

- callbacks now run with proper grad mode even in worker threads

- graphtask's Future callbacks now run with proper TLS when erroring

out from a worker thread

Test Plan: Imported from OSS

Reviewed By: ngimel

Differential Revision:

D30388100

Pulled By: albanD

fbshipit-source-id:

7ae9c461c2f0040548dd9e1e314f25e8da0c2e67

Shiyan Deng [Wed, 25 Aug 2021 17:22:17 +0000 (10:22 -0700)]

[fx2trt] Check input device in TRTModule (#63893)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63893

Add a check to ensure all the inputs are on cuda device.

Test Plan: CI

Reviewed By: kflu, houseroad

Differential Revision:

D30525265

fbshipit-source-id:

6e50b70fd535defc1f802d51e8bb991b2dd73741

riship [Wed, 25 Aug 2021 16:56:41 +0000 (09:56 -0700)]

bf16 Error message cleanup as well as addition of is_bf16_supported (#63798)

Summary:

ngimel

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63798

Reviewed By: heitorschueroff

Differential Revision:

D30526187

Pulled By: ngimel

fbshipit-source-id:

c484aec14638097c96c720095d3491249b6b2d14

Karen Zhou [Wed, 25 Aug 2021 16:55:02 +0000 (09:55 -0700)]

[pruner] add getter for pruned outputs in base pruner (#63520)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63520

Rather than having to call `module.parametrizations.weight[0].pruned_outputs` each time we need to access the set of pruned indices, we add a getter `get_module_pruned_outputs` which takes the module as an argument and returns the set.

This is used for testing.

ghstack-source-id:

136561130

Test Plan:

` buck test mode/dev-nosan //caffe2/test:ao -- TestBasePruner`

https://pxl.cl/1N4gK

Reviewed By: z-a-f

Differential Revision:

D30374558

fbshipit-source-id:

e38dfee0879cadde52b942e899a3d8d7151ee493

Karen Zhou [Wed, 25 Aug 2021 16:55:02 +0000 (09:55 -0700)]

[pruner] add support for pruning BatchNorm2d (#63519)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63519

If the pruner should be pruning biases along with weights, then if the model has BatchNorm2d following pruned Conv2d layers, then the corresponding channels of the BatchNorm must also be pruned.

Specifically, they need to zeroed out, rather than fully removed, since in eager mode, the dimensions between layers need to be preserved.

To do this, we add a pruning parametrization called `ZeroesParametrization` which zeroes out pruned channels, rather than removing them.

The user must provide in the config, a tuple of the Conv2d and BatchNorm layers that go together. The `prepare` method will add the tuple to the `module_groups`; then it will add a PruningParametrization to the Conv2d layer, and a ZeroesParametrization to BatchNorm, and then set their pruned sets to be the same set. That way, during `step`, both masks are updated with the same pruned indices.

ghstack-source-id:

136562278

Test Plan:

`buck test mode/dev-nosan //caffe2/test:ao -- TestBasePruner`

https://pxl.cl/1N1P6

Reviewed By: z-a-f

Differential Revision:

D30349855

fbshipit-source-id:

3199d3688d5a70963f9b32d7a8fdac3962ae6a65

Peter Bell [Wed, 25 Aug 2021 16:35:26 +0000 (09:35 -0700)]

Minor OptionalTensorRef updates (#63611)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63611

A few minor updates to `OptionalTensorRef`:

1. use `Tensor`'s `unsafe_borrow_t` constructor which avoids an unnecesary `nullptr` check.

2. copy constructor cannot defer to the `const Tensor&` constructor because it checks the tensor is

defined, and so would fail for disengaged optionals.

3. use copy-swap idiom to avoid issues with self-assignment. `x = x` should be a no-op, but the old

version would clear `x`.

4. Add pointer-like access for consistency with `optional` and `MaybeOwned`

Test Plan: Imported from OSS

Reviewed By: bdhirsh

Differential Revision:

D30484704

Pulled By: ezyang

fbshipit-source-id:

738f4bd22359eaecd0a519a04e89a4b44d92da5b

Nikita Shulga [Wed, 25 Aug 2021 16:24:27 +0000 (09:24 -0700)]

Update CMake minimum version to 3.10 (#63660)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/63660

Test Plan: Imported from OSS

Reviewed By: janeyx99, mruberry

Differential Revision:

D30543878

fbshipit-source-id:

a7d938807653f39727f2cc7d7ca167200567b6a0

Rong Rong (AI Infra) [Wed, 25 Aug 2021 16:04:28 +0000 (09:04 -0700)]

Temporary fix for remote gpu execution issue (#63899)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63899

See: T99020845

Test Plan: sandcastle

Reviewed By: heitorschueroff

Differential Revision:

D30527384

fbshipit-source-id:

ce9933e5e181322c02d4ed17f3fdaabe4c5ba29e

Ansley Ussery [Wed, 25 Aug 2021 16:01:50 +0000 (09:01 -0700)]

Fix bug in `check_empty_containers` (#63492)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/63492

Test Plan: Imported from OSS

Reviewed By: bdhirsh

Differential Revision:

D30402749

Pulled By: ansley

fbshipit-source-id:

7de533355fe91ca4f45b2bafc3bfb205a028c1ed

Jane Xu [Wed, 25 Aug 2021 16:00:13 +0000 (09:00 -0700)]

Swap CUDA 11.1 and 11.3 in CI to make 11.1 periodic (#63900)

Summary:

Preparing for supporting 11.3 in the next release.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63900

Reviewed By: malfet

Differential Revision:

D30541437

Pulled By: janeyx99

fbshipit-source-id:

a7297da7f7818a4291b1c321d62d76fc2c0f1f90

zhouzhuojie [Wed, 25 Aug 2021 15:50:00 +0000 (08:50 -0700)]

[skip ci] Add generated comment to ruleset json (#63896)

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/63896

Reviewed By: heitorschueroff

Differential Revision:

D30529820

Pulled By: zhouzhuojie

fbshipit-source-id:

7529803af23ea36a7bcb673cd399da80da8e3feb

Alban Desmaison [Wed, 25 Aug 2021 14:15:18 +0000 (07:15 -0700)]

Revert

D30526034: [pytorch][PR] compute reduction intermediate buffer size in elements

Test Plan: revert-hammer

Differential Revision:

D30526034 (https://github.com/pytorch/pytorch/commit/

e69a1398cbe534874060460faf36af21d24ce6e7)

Original commit changeset:

0aca7f887974

fbshipit-source-id:

a22472723818d6fe0c11a6e134080df1ac408038

Linbin Yu [Wed, 25 Aug 2021 07:42:03 +0000 (00:42 -0700)]

Revert

D30384746: [fx2trt] Add a test for quantized resnet18

Test Plan: revert-hammer

Differential Revision:

D30384746 (https://github.com/pytorch/pytorch/commit/

10dfa58eba055a1bbc1cc89df033cd2815cbb403)

Original commit changeset:

1a8638777116

fbshipit-source-id:

b93235323e229b391f5456f6e3543988062dd0d4

Jerry Zhang [Wed, 25 Aug 2021 04:33:12 +0000 (21:33 -0700)]

[fx2trt] Add a test for quantized resnet18 (#63446)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63446

Add a test for quantized resnet18 running in TensorRT

Test Plan: buck run mode/opt -c python.package_style=inplace caffe2:fx2trt_quantized_resnet_test

Reviewed By:

842974287

Differential Revision:

D30384746

fbshipit-source-id:

1a863877711618cd23d887694269ed9e44ee606c

Jerry Zhang [Wed, 25 Aug 2021 04:28:40 +0000 (21:28 -0700)]

[quant][graphmode][fx] Make maxpool and flatten produce the reference pattern (#63501)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63501

Currently some of the ops are considered as working with both float and quantized input,

so we may have things like "quant - some_op - dequant" this might not work well with the backend,

we may consider change everything to produce "quant - dequant - some_op - quant - dequant" instead

in the future, this PR fixes it for maxpool and flatten only to unblock resnet benchmarking on TensorRT

Test Plan:

python test/test_quantization.py TestQuantizeFxOps

Imported from OSS

Reviewed By: mruberry

Differential Revision:

D30402788

fbshipit-source-id:

892c5ff6552775070e2c1453f65846590fb12735

Mikhail Zolotukhin [Wed, 25 Aug 2021 04:21:57 +0000 (21:21 -0700)]

[TensorExpr] LLVMCodegen: Use addFnAttr instead of addAttribute which was deleted. (#63886)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63886

cc gmagogsfm

Test Plan: Imported from OSS

Reviewed By: bertmaher

Differential Revision:

D30523135

Pulled By: ZolotukhinM

fbshipit-source-id:

62e125f917b2a0153eb30879d93cf956587a05e0