Clément Pinard [Fri, 19 Apr 2019 14:17:09 +0000 (07:17 -0700)]

Mention packed accessors in tensor basics doc (#19464)

Summary:

This is a continuation of efforts into packed accessor awareness.

A very simple example is added, along with the mention that the template can hold more arguments.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19464

Differential Revision:

D15012564

Pulled By: soumith

fbshipit-source-id:

a19ed536e016fae519b062d847cc58aef01b1b92

Gregory Chanan [Fri, 19 Apr 2019 13:58:41 +0000 (06:58 -0700)]

Rename 'not_differentiable' to 'non_differentiable'. (#19272)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19272

ghimport-source-id:

755e91efa68c5a1c4377a6853f21b3eee3f8cab5

Differential Revision:

D15003381

Pulled By: gchanan

fbshipit-source-id:

54db27c5c5e65acf65821543db3217de9dd9bdb5

Lu Fang [Fri, 19 Apr 2019 06:56:32 +0000 (23:56 -0700)]

Clean the onnx constant fold code a bit (#19398)

Summary:

This is a follow up PR of https://github.com/pytorch/pytorch/pull/18698 to lint the code using clang-format.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19398

Differential Revision:

D14994517

Pulled By: houseroad

fbshipit-source-id:

2ae9f93e66ce66892a1edc9543ea03932cd82bee

Eric Faust [Fri, 19 Apr 2019 06:48:59 +0000 (23:48 -0700)]

Allow passing dicts as trace inputs. (#18092)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18092

Previously, tracing required all inputs to be either tensors,

or tuples of tensor. Now, we allow users to pass dicts as well.

Differential Revision:

D14491795

fbshipit-source-id:

7a2df218e5d00f898d01fa5b9669f9d674280be3

Lu Fang [Fri, 19 Apr 2019 06:31:32 +0000 (23:31 -0700)]

skip test_trace_c10_ops if _caffe2 is not built (#19099)

Summary:

fix https://github.com/pytorch/pytorch/issues/18142

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19099

Differential Revision:

D15010452

Pulled By: houseroad

fbshipit-source-id:

5bf158d7fce7bfde109d364a3a9c85b83761fffb

Gemfield [Fri, 19 Apr 2019 05:34:52 +0000 (22:34 -0700)]

remove needless ## in REGISTER_ALLOCATOR definition. (#19261)

Summary:

remove needless ## in REGISTER_ALLOCATOR definition.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19261

Differential Revision:

D15002025

Pulled By: soumith

fbshipit-source-id:

40614b1d79d1fe05ccf43f0ae5aab950e4c875c2

Lara Haidar-Ahmad [Fri, 19 Apr 2019 05:25:04 +0000 (22:25 -0700)]

Strip doc_string from exported ONNX models (#18882)

Summary:

Strip the doc_string by default from the exported ONNX models (this string has the stack trace and information about the local repos and folders, which can be confidential).

The users can still generate the doc_string by specifying add_doc_string=True in torch.onnx.export().

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18882

Differential Revision:

D14889684

Pulled By: houseroad

fbshipit-source-id:

26d2c23c8dc3f484544aa854b507ada429adb9b8

Natalia Gimelshein [Fri, 19 Apr 2019 05:20:44 +0000 (22:20 -0700)]

improve dim sort performance (#19379)

Summary:

We are already using custom comparators for sorting (for a good reason), but are still making 2 sorting passes - global sort and stable sorting to bring values into their slices. Using a custom comparator to sort within a slice allows us to avoid second sorting pass and brings up to 50% perf improvement.

t-vi I know you are moving sort to ATen, and changing THC is discouraged, but #18350 seems dormant. I'm fine with #18350 landing first, and then I can put in these changes.

cc umanwizard for review.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19379

Differential Revision:

D15011019

Pulled By: soumith

fbshipit-source-id:

48e5f5aef51789b166bb72c75b393707a9aed57c

SsnL [Fri, 19 Apr 2019 05:16:05 +0000 (22:16 -0700)]

Fix missing import sys in pin_memory.py (#19419)

Summary:

kostmo pointed this out in #15331. Thanks :)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19419

Differential Revision:

D15002846

Pulled By: soumith

fbshipit-source-id:

c600fab3f7a7a5147994b9363910af4565c7ee65

Ran [Fri, 19 Apr 2019 05:10:34 +0000 (22:10 -0700)]

update documentation of PairwiseDistance#19241 (#19412)

Summary:

Fix the documentation of PairwiseDistance [#19241](https://github.com/pytorch/pytorch/issues/19241)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19412

Differential Revision:

D14998271

Pulled By: soumith

fbshipit-source-id:

bcb2aa46d3b3102c4480f2d24072a5e14b049888

Soumith Chintala [Fri, 19 Apr 2019 05:10:30 +0000 (22:10 -0700)]

fixes link in TripletMarginLoss (#19417)

Summary:

Fixes https://github.com/pytorch/pytorch/issues/19245

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19417

Differential Revision:

D15001610

Pulled By: soumith

fbshipit-source-id:

1b85ebe196eb5a3af5eb83d914dafa83b9b35b31

Mingzhe Li [Fri, 19 Apr 2019 02:56:50 +0000 (19:56 -0700)]

make separate operators as independent binaries (#19450)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19450

We want to make each operator benchmark as a separate binary. The previous way to run the benchmark is by collecting all operators into a single binary, it is unnecessary when we want to filter a specific operator. This diff aims to resolve that issue.

Reviewed By: ilia-cher

Differential Revision:

D14808159

fbshipit-source-id:

43cd25b219c6e358d0cd2a61463b34596bf3bfac

svcscm [Fri, 19 Apr 2019 01:26:20 +0000 (18:26 -0700)]

Updating submodules

Reviewed By: cdelahousse

fbshipit-source-id:

a727513842c0a240b377bda4e313fbedbc54c2e8

Xiang Gao [Fri, 19 Apr 2019 01:24:50 +0000 (18:24 -0700)]

Step 4: add support for unique with dim=None (#18651)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18651

ghimport-source-id:

e11988130a3f9a73529de0b0d08b4ec25fbc639c

Differential Revision:

D15000463

Pulled By: VitalyFedyunin

fbshipit-source-id:

9e258e473dea6a3fc2307da2119b887ba3f7934a

Michael Suo [Fri, 19 Apr 2019 01:06:21 +0000 (18:06 -0700)]

allow bools to be used as attributes (#19440)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19440

ghimport-source-id:

9c962054d760526bf7da324b114455fcb1038521

Differential Revision:

D15005723

Pulled By: suo

fbshipit-source-id:

75fc87ae33894fc34d3b913881defb7e6b8d7af0

David Riazati [Fri, 19 Apr 2019 01:02:14 +0000 (18:02 -0700)]

Fix test build (#19444)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19444

ghimport-source-id:

c85db00e8037e7f6f0424eb8bd17f957d20b7247

Reviewed By: eellison

Differential Revision:

D15008679

Pulled By: driazati

fbshipit-source-id:

0987035116d9d0069794d96395c8ad458ba7c121

Thomas Viehmann [Fri, 19 Apr 2019 00:52:33 +0000 (17:52 -0700)]

pow scalar exponent / base autodiff, fusion (#19324)

Summary:

Fixes: #19253

Fixing pow(Tensor, float) is straightforward.

The breakage for pow(float, Tensor) is a bit more subtle to trigger, and fixing needs `torch.log` (`math.log` didn't work) from the newly merged #19115 (Thanks ngimel for pointing out this has landed.)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19324

Differential Revision:

D15003531

Pulled By: ailzhang

fbshipit-source-id:

8b22138fa27a43806b82886fb3a7b557bbb5a865

Gao, Xiang [Fri, 19 Apr 2019 00:46:43 +0000 (17:46 -0700)]

Improve unique CPU performance for returning counts (#19352)

Summary:

Benchmark on a tensor of shape `torch.Size([15320, 2])`. Benchmark code:

```python

print(torch.__version__)

print()

a = tensor.flatten()

print('cpu, sorted=False:')

%timeit torch._unique2_temporary_will_remove_soon(a, sorted=False)

%timeit torch._unique2_temporary_will_remove_soon(a, sorted=False, return_inverse=True)

%timeit torch._unique2_temporary_will_remove_soon(a, sorted=False, return_counts=True)

%timeit torch._unique2_temporary_will_remove_soon(a, sorted=False, return_inverse=True, return_counts=True)

print()

print('cpu, sorted=True:')

%timeit torch._unique2_temporary_will_remove_soon(a)

%timeit torch._unique2_temporary_will_remove_soon(a, return_inverse=True)

%timeit torch._unique2_temporary_will_remove_soon(a, return_counts=True)

%timeit torch._unique2_temporary_will_remove_soon(a, return_inverse=True, return_counts=True)

print()

```

Before

```

1.1.0a0+36854fe

cpu, sorted=False:

340 µs ± 4.05 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each)

724 µs ± 6.28 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each)

54.3 ms ± 469 µs per loop (mean ± std. dev. of 7 runs, 10 loops each)

54.6 ms ± 659 µs per loop (mean ± std. dev. of 7 runs, 10 loops each)

cpu, sorted=True:

341 µs ± 7.21 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each)

727 µs ± 7.05 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each)

54.7 ms ± 795 µs per loop (mean ± std. dev. of 7 runs, 10 loops each)

54.3 ms ± 647 µs per loop (mean ± std. dev. of 7 runs, 10 loops each)

```

After

```

1.1.0a0+261d9e8

cpu, sorted=False:

350 µs ± 865 ns per loop (mean ± std. dev. of 7 runs, 1000 loops each)

771 µs ± 598 ns per loop (mean ± std. dev. of 7 runs, 1000 loops each)

1.09 ms ± 6.86 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each)

1.09 ms ± 4.74 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each)

cpu, sorted=True:

324 µs ± 4.99 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each)

705 µs ± 3.18 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each)

1.09 ms ± 5.22 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each)

1.09 ms ± 5.63 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each)

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19352

Differential Revision:

D14984717

Pulled By: VitalyFedyunin

fbshipit-source-id:

3c56f85705ab13a92ec7406f4f30be77226a3210

Pieter Noordhuis [Fri, 19 Apr 2019 00:44:37 +0000 (17:44 -0700)]

Revert

D14909203: Remove usages of TypeID

Differential Revision:

D14909203

Original commit changeset:

d716179c484a

fbshipit-source-id:

992ff1fcd6d35d3f2ae768c7e164b7a0ba871914

Sebastian Messmer [Fri, 19 Apr 2019 00:16:58 +0000 (17:16 -0700)]

Add tests for argument types (#19290)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19290

Add test cases for the supported argument types

And TODOs for some unsupported ones that we might want to support.

Reviewed By: dzhulgakov

Differential Revision:

D14931920

fbshipit-source-id:

c47bbb295a54ac9dc62569bf5c273368c834392c

David Riazati [Fri, 19 Apr 2019 00:06:09 +0000 (17:06 -0700)]

Allow optionals arguments from C++ (#19311)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19311

ghimport-source-id:

699f62eb2bbad53ff2045fb2e217eb1402f2cdc5

Reviewed By: eellison

Differential Revision:

D14983059

Pulled By: driazati

fbshipit-source-id:

442f96d6bd2a8ce67807ccad2594b39aae489ca5

Mingzhe Li [Fri, 19 Apr 2019 00:03:56 +0000 (17:03 -0700)]

Enhance front-end to add op (#19433)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19433

For operator benchmark project, we need to cover a lot of operators, so the interface for adding operators needs to be very clean and simple. This diff is implementing a new interface to add op.

Here is the logic to add new operator to the benchmark:

```

long_config = {}

short_config = {}

map_func

add_test(

[long_config, short_config],

map_func,

[caffe2 op]

[pt op]

)

```

Reviewed By: zheng-xq

Differential Revision:

D14791191

fbshipit-source-id:

ac6738507cf1b9d6013dc8e546a2022a9b177f05

Dmytro Dzhulgakov [Thu, 18 Apr 2019 23:34:20 +0000 (16:34 -0700)]

Fix cpp_custom_type_hack variable handling (#19400)

Summary:

My bad - it might be called in variable and non-variable context. So it's better to just inherit variable-ness from the caller.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19400

Reviewed By: ezyang

Differential Revision:

D14994781

Pulled By: dzhulgakov

fbshipit-source-id:

cb9d055b44a2e1d7bbf2e937d558e6bc75037f5b

Ailing Zhang [Thu, 18 Apr 2019 22:58:45 +0000 (15:58 -0700)]

fix hub doc formatting issues (#19434)

Summary:

minor fixes for doc

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19434

Differential Revision:

D15003903

Pulled By: ailzhang

fbshipit-source-id:

400768d9a5ee24f9183faeec9762b688c48c531b

Pieter Noordhuis [Thu, 18 Apr 2019 21:51:37 +0000 (14:51 -0700)]

Recursively find tensors in DDP module output (#19360)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19360

We'll return the output object verbatim since it is a freeform object.

We need to find any tensors in this object, though, because we need to

figure out which parameters were used during this forward pass, to

ensure we short circuit reduction for any unused parameters.

Before this commit only lists were handled and the functionality went

untested. This commit adds support for dicts and recursive structures,

and also adds a test case.

Closes #19354.

Reviewed By: mrshenli

Differential Revision:

D14978016

fbshipit-source-id:

4bb6999520871fb6a9e4561608afa64d55f4f3a8

Sebastian Messmer [Thu, 18 Apr 2019 21:07:30 +0000 (14:07 -0700)]

Moving at::Tensor into caffe2::Tensor without bumping refcount (#19388)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19388

The old implementation forced a refcount bump when converting at::Tensor to caffe2::Tensor.

Now, it is possible to move it without a refcount bump.

Reviewed By: dzhulgakov

Differential Revision:

D14986815

fbshipit-source-id:

92b4b0a6f323ed38376ffad75f960cad250ecd9b

Ailing Zhang [Thu, 18 Apr 2019 19:07:17 +0000 (12:07 -0700)]

Fix pickling torch.float32 (#18045)

Summary:

Attempt fix for #14057 . This PR fixes the example script in the issue.

The old behavior is a bit confusing here. What happened to pickling is python2 failed to recognize `torch.float32` is in module `torch`, thus it's looking for `torch.float32` in module `__main__`. Python3 is smart enough to handle it.

According to the doc [here](https://docs.python.org/2/library/pickle.html#object.__reduce__), it seems `__reduce__` should return `float32` instead of the old name `torch.float32`. In this way python2 is able to find `float32` in `torch` module.

> If a string is returned, it names a global variable whose contents are pickled as normal. The string returned by __reduce__() should be the object’s local name relative to its module

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18045

Differential Revision:

D14990638

Pulled By: ailzhang

fbshipit-source-id:

816b97d63a934a5dda1a910312ad69f120b0b4de

David Riazati [Thu, 18 Apr 2019 18:07:45 +0000 (11:07 -0700)]

Respect order of Parameters in rnn.py (#18198)

Summary:

Previously to get a list of parameters this code was just putting them in the reverse order in which they were defined, which is not always right. This PR allows parameter lists to define the order themselves. To do this parameter lists need to have a corresponding function that provides the names of the parameters.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18198

Differential Revision:

D14966270

Pulled By: driazati

fbshipit-source-id:

59331aa59408660069785906304b2088c19534b2

Nikolay Korovaiko [Thu, 18 Apr 2019 16:56:02 +0000 (09:56 -0700)]

Refactor EmitLoopCommon to make it more amenable to future extensions (#19341)

Summary:

This PR paves the way for support more iterator types in for-in loops.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19341

Differential Revision:

D14992749

Pulled By: Krovatkin

fbshipit-source-id:

e2d4c9465c8ec3fc74fbf23006dcb6783d91795f

Kutta Srinivasan [Thu, 18 Apr 2019 16:31:03 +0000 (09:31 -0700)]

Cleanup init_process_group (#19033)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19033

torch.distributed.init_process_group() has had many parameters added, but the contract isn't clear. Adding documentation, asserts, and explicit args should make this clearer to callers and more strictly enforced.

Reviewed By: mrshenli

Differential Revision:

D14813070

fbshipit-source-id:

80e4e7123087745bed436eb390887db9d1876042

peterjc123 [Thu, 18 Apr 2019 13:57:41 +0000 (06:57 -0700)]

Sync FindCUDA/select_computer_arch.cmake from upstream (#19392)

Summary:

1. Fixes auto detection for Turing cards.

2. Adds Turing Support

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19392

Differential Revision:

D14996142

Pulled By: soumith

fbshipit-source-id:

3cd45c58212cf3db96e5fa19b07d9f1b59a1666a

Alexandros Metsai [Thu, 18 Apr 2019 13:33:18 +0000 (06:33 -0700)]

Update module.py documentation. (#19347)

Summary:

Added the ">>>" python interpreter sign(three greater than symbols), so that the edited lines will appear as code, not comments/output, in the documentation. Normally, the interpreter would display "..." when expecting a block, but I'm not sure how this would work on the pytorch docs website. It seems that in other code examples the ">>>" sign is used as well, therefore I used with too.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19347

Differential Revision:

D14986154

Pulled By: soumith

fbshipit-source-id:

8f4d07d71ff7777b46c459837f350eb0a1f17e84

Tongzhou Wang [Thu, 18 Apr 2019 13:33:08 +0000 (06:33 -0700)]

Add device-specific cuFFT plan caches (#19300)

Summary:

Fixes https://github.com/pytorch/pytorch/issues/19224

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19300

Differential Revision:

D14986967

Pulled By: soumith

fbshipit-source-id:

8c31237db50d6924bba1472434c10326610d9255

Mingfei Ma [Thu, 18 Apr 2019 13:31:24 +0000 (06:31 -0700)]

Improve bmm() performance on CPU when input tensor is non-contiguous (#19338)

Summary:

This PR aims to improve Transformer performance on CPU, `bmm()` is one of the major bottlenecks now.

Current logic of `bmm()` on CPU only uses MKL batch gemm when the inputs `A` and `B` are contiguous or transposed. So when `A` or `B` is a slice of a larger tensor, it falls to a slower path.

`A` and `B` are both 3D tensors. MKL is able to handle the batch matrix multiplication on occasion that `A.stride(1) == 1 || A.stride(2) == 1` and `B.stride(1) == || B.stride(2) == 1`.

From [fairseq](https://github.com/pytorch/fairseq) implementation of Transformer, multi-head attention has two places to call bmm(), [here](https://github.com/pytorch/fairseq/blob/master/fairseq/modules/multihead_attention.py#L167) and [here](https://github.com/pytorch/fairseq/blob/master/fairseq/modules/multihead_attention.py#L197), `q`, `k`, `v` are all slices from larger tensor. So the `bmm()` falls to slow path at the moment.

Results on Xeon 6148 (20*2 cores 2.5GHz) indicate this PR improves Transformer training performance by **48%** (seconds per iteration reduced from **5.48** to **3.70**), the inference performance should also be boosted.

Before:

```

| epoch 001: 0%| | 27/25337 [02:27<38:31:26, 5.48s/it, loss=16.871, nll_loss=16.862, ppl=119099.70, wps=865, ups=0, wpb=4715.778, bsz=129.481, num_updates=27, lr=4.05e-06, gnorm=9.133,

```

After:

```

| epoch 001: 0%| | 97/25337 [05:58<25:55:49, 3.70s/it, loss=14.736, nll_loss=14.571, ppl=24339.38, wps=1280, ups=0, wpb=4735.299, bsz=131.134, num_updates=97, lr=1.455e-05, gnorm=3.908,

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19338

Differential Revision:

D14986346

Pulled By: soumith

fbshipit-source-id:

827106245af908b8a4fda69ed0288d322b028f08

Sebastian Messmer [Thu, 18 Apr 2019 09:00:51 +0000 (02:00 -0700)]

Optional inputs and outputs (#19289)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19289

Allow optional inputs and outputs in native c10 operators

Reviewed By: dzhulgakov

Differential Revision:

D14931927

fbshipit-source-id:

48f8bec009c6374345b34d933f148c08bb4f7118

Sebastian Messmer [Thu, 18 Apr 2019 09:00:51 +0000 (02:00 -0700)]

Add some tests (#19288)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19288

-

Reviewed By: dzhulgakov

Differential Revision:

D14931924

fbshipit-source-id:

6c53b5d1679080939973d33868e58ca4ad70361d

Sebastian Messmer [Thu, 18 Apr 2019 09:00:49 +0000 (02:00 -0700)]

Use string based schema for exposing caffe2 ops (#19287)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19287

Since we now have a string-schema-based op registration API, we can also use it when exposing caffe2 operators.

Reviewed By: dzhulgakov

Differential Revision:

D14931925

fbshipit-source-id:

ec162469d2d94965e8c99d431c801ae7c43849c8

Sebastian Messmer [Thu, 18 Apr 2019 09:00:49 +0000 (02:00 -0700)]

Allow registering ops without specifying the full schema (#19286)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19286

The operator registration API now allows registering an operator by only giving the operator name and not the full operator schema,

as long as the operator schema can be inferred from the kernel function.

Reviewed By: dzhulgakov

Differential Revision:

D14931921

fbshipit-source-id:

3776ce43d4ce67bb5a3ea3d07c37de96eebe08ba

Sebastian Messmer [Thu, 18 Apr 2019 09:00:49 +0000 (02:00 -0700)]

Add either type (#19285)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19285

The either type is a tagged union with two members.

This is going to be used in a diff stacked on top to allow a function to return one of two types.

Also, generally, either<Error, Result> is a great pattern for returning value_or_error from a function without using exceptions and we could use this class for that later.

Reviewed By: dzhulgakov

Differential Revision:

D14931923

fbshipit-source-id:

7d1dd77b3e5b655f331444394dcdeab24772ab3a

Sebastian Messmer [Thu, 18 Apr 2019 09:00:49 +0000 (02:00 -0700)]

Allow ops without tensor args if only fallback kernel exists (#19284)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19284

Instantiating a dispatch table previously only worked when the op had a tensor argument we could dispatch on.

However, the legacy API for custom operators didn't have dispatch and also worked for operators without tensor arguments, so we need to continue supporting that.

It probably generally makes sense to support this as long as there's only a fallback kernel and no dispatched kernel registered.

This diff adds that functionality.

Reviewed By: dzhulgakov

Differential Revision:

D14931926

fbshipit-source-id:

38fadcba07e5577a7329466313c89842d50424f9

Sebastian Messmer [Thu, 18 Apr 2019 07:57:44 +0000 (00:57 -0700)]

String-based schemas in op registration API (#19283)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19283

Now that the function schema parser is available in ATen/core, we can use it from the operator registration API to register ops based on string schemas.

This does not allow registering operators based on only the name yet - the full schema string needs to be defined.

A diff stacked on top will add name based registration.

Reviewed By: dzhulgakov

Differential Revision:

D14931919

fbshipit-source-id:

71e490dc65be67d513adc63170dc3f1ce78396cc

Sebastian Messmer [Thu, 18 Apr 2019 07:57:44 +0000 (00:57 -0700)]

Move function schema parser to ATen/core build target (#19282)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19282

This is largely a hack because we need to use the function schema parser from ATen/core

but aren't clear yet on how the final software architecture should look like.

- Add function schema parser files from jit to ATen/core build target.

- Also move ATen/core build target one directory up to allow this.

We only change the build targets and don't move the files yet because this is likely

not the final build set up and we want to avoid repeated interruptions

for other developers. cc zdevito

Reviewed By: dzhulgakov

Differential Revision:

D14931922

fbshipit-source-id:

26462e2e7aec9e0964706138edd3d87a83b964e3

Lu Fang [Thu, 18 Apr 2019 07:33:00 +0000 (00:33 -0700)]

update of fbcode/onnx to

ad7313470a9119d7e1afda7edf1d654497ee80ab (#19339)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19339

Previous import was

971311db58f2fa8306d15e1458b5fd47dbc8d11c

Included changes:

- **[

ad731347](https://github.com/onnx/onnx/commit/

ad731347)**: Fix shape inference for matmul (#1941) <Bowen Bao>

- **[

3717dc61](https://github.com/onnx/onnx/commit/

3717dc61)**: Shape Inference Tests for QOps (#1929) <Ashwini Khade>

- **[

a80c3371](https://github.com/onnx/onnx/commit/

a80c3371)**: Prevent unused variables from generating warnings across all platforms. (#1930) <Pranav Sharma>

- **[

be9255c1](https://github.com/onnx/onnx/commit/

be9255c1)**: add title (#1919) <Prasanth Pulavarthi>

- **[

7a112a6f](https://github.com/onnx/onnx/commit/

7a112a6f)**: add quantization ops in onnx (#1908) <Ashwini Khade>

- **[

6de42d7d](https://github.com/onnx/onnx/commit/

6de42d7d)**: Create working-groups.md (#1916) <Prasanth Pulavarthi>

Reviewed By: yinghai

Differential Revision:

D14969962

fbshipit-source-id:

5ec64ef7aee5161666ed0c03e201be0ae20826f9

Roy Li [Thu, 18 Apr 2019 07:18:35 +0000 (00:18 -0700)]

Remove copy and copy_ special case on Type (#18972)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18972

ghimport-source-id:

b5d3012b00530145fa24ab0cab693a7e80cb5989

Differential Revision:

D14816530

Pulled By: li-roy

fbshipit-source-id:

9c7a166abb22d2cd1f81f352e44d9df1541b1774

Spandan Tiwari [Thu, 18 Apr 2019 07:06:59 +0000 (00:06 -0700)]

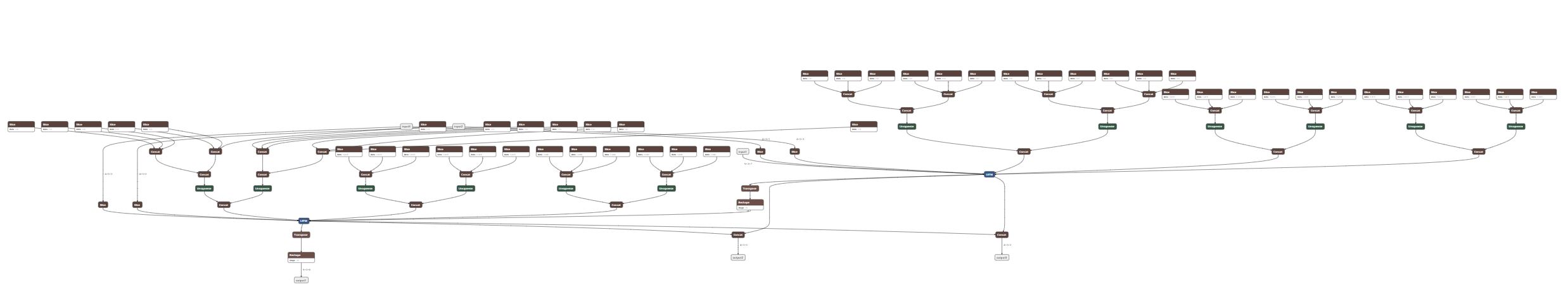

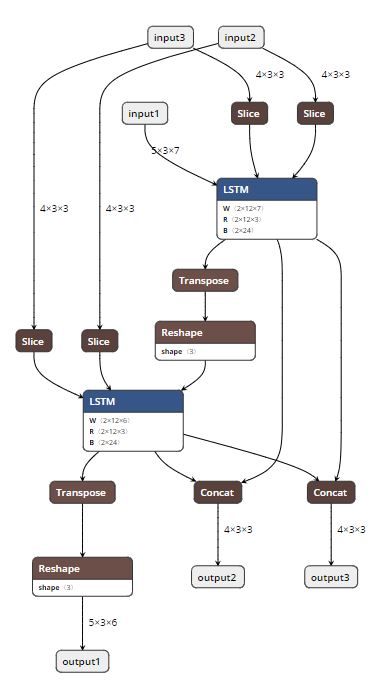

Add constant folding to ONNX graph during export (Resubmission) (#18698)

Summary:

Rewritten version of https://github.com/pytorch/pytorch/pull/17771 using graph C++ APIs.

This PR adds the ability to do constant folding on ONNX graphs during PT->ONNX export. This is done mainly to optimize the graph and make it leaner. The two attached snapshots show a multiple-node LSTM model before and after constant folding.

A couple of notes:

1. Constant folding is by default turned off for now. The goal is to turn it on by default once we have validated it through all the tests.

2. Support for folding in nested blocks is not in place, but will be added in the future, if needed.

**Original Model:**

**Constant-folded model:**

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18698

Differential Revision:

D14889768

Pulled By: houseroad

fbshipit-source-id:

b6616b1011de9668f7c4317c880cb8ad4c7b631a

Roy Li [Thu, 18 Apr 2019 06:52:44 +0000 (23:52 -0700)]

Remove usages of TypeID (#19183)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19183

ghimport-source-id:

9af190b072523459fa61e5e79419b88ac8586a4d

Differential Revision:

D14909203

Pulled By: li-roy

fbshipit-source-id:

d716179c484aebfe3ec30087c5ecd4a11848ffc3

Sebastian Messmer [Thu, 18 Apr 2019 06:41:22 +0000 (23:41 -0700)]

Fixing function schema parser for Android (#19281)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19281

String<->Number conversions aren't available in the STL used in our Android environment.

This diff adds workarounds for that so that the function schema parser can be compiled for android

Reviewed By: dzhulgakov

Differential Revision:

D14931649

fbshipit-source-id:

d5d386f2c474d3742ed89e52dff751513142efad

Sebastian Messmer [Thu, 18 Apr 2019 06:41:21 +0000 (23:41 -0700)]

Split function schema parser from operator (#19280)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19280

We want to use the function schema parser from ATen/core, but with as little dependencies as possible.

This diff moves the function schema parser into its own file and removes some of its dependencies.

Reviewed By: dzhulgakov

Differential Revision:

D14931651

fbshipit-source-id:

c2d787202795ff034da8cba255b9f007e69b4aea

Ailing Zhang [Thu, 18 Apr 2019 06:38:26 +0000 (23:38 -0700)]

fix hub doc format

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/19396

Differential Revision:

D14993859

Pulled By: ailzhang

fbshipit-source-id:

bdf94e54ec35477cfc34019752233452d84b6288

Mikhail Zolotukhin [Thu, 18 Apr 2019 05:05:49 +0000 (22:05 -0700)]

Clang-format torch/csrc/jit/passes/quantization.cpp. (#19385)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19385

ghimport-source-id:

67f808db7dcbcb6980eac79a58416697278999b0

Differential Revision:

D14991917

Pulled By: ZolotukhinM

fbshipit-source-id:

6c2e57265cc9f0711752582a04d5a070482ed1e6

Shen Li [Thu, 18 Apr 2019 04:18:49 +0000 (21:18 -0700)]

Allow DDP to wrap multi-GPU modules (#19271)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19271

allow DDP to take multi-gpu models

Reviewed By: pietern

Differential Revision:

D14822375

fbshipit-source-id:

1eebfaa33371766d3129f0ac6f63a573332b2f1c

Jiyan Yang [Thu, 18 Apr 2019 04:07:42 +0000 (21:07 -0700)]

Add validator for optimizers when parameters are shared

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18497

Reviewed By: kennyhorror

Differential Revision:

D14614738

fbshipit-source-id:

beddd8349827dcc8ccae36f21e5d29627056afcd

Ailing Zhang [Thu, 18 Apr 2019 04:01:36 +0000 (21:01 -0700)]

hub minor fixes (#19247)

Summary:

A few improvements while doing bert model

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19247

Differential Revision:

D14989345

Pulled By: ailzhang

fbshipit-source-id:

f4846813f62b6d497fbe74e8552c9714bd8dc3c7

Elias Ellison [Thu, 18 Apr 2019 02:52:16 +0000 (19:52 -0700)]

fix wrong schema (#19370)

Summary:

Op was improperly schematized previously. Evidently checkScript does not test if the outputs are the same type.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19370

Differential Revision:

D14985159

Pulled By: eellison

fbshipit-source-id:

feb60552afa2a6956d71f64801f15e5fe19c3a91

Mikhail Zolotukhin [Thu, 18 Apr 2019 01:34:50 +0000 (18:34 -0700)]

Fix printing format in examples in jit/README.md. (#19323)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19323

ghimport-source-id:

74a01917de70c9d59099cf601b24f3cb484ab7be

Differential Revision:

D14990100

Pulled By: ZolotukhinM

fbshipit-source-id:

87ede08c8ca8f3027b03501fbce8598379e8b96c

Eric Faust [Wed, 17 Apr 2019 23:59:34 +0000 (16:59 -0700)]

Allow for single-line deletions in clang_tidy.py (#19082)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19082

When you have just one line of deletions, just as with additions, there is no count printed.

Without this fix, we ignore all globs with single-line deletions when selecting which lines were changed.

When all the changes in the file were single-line, this meant no line-filtering at all!

Differential Revision:

D14860426

fbshipit-source-id:

c60e9d84f9520871fc0c08fa8c772c227d06fa27

Michael Suo [Wed, 17 Apr 2019 23:48:28 +0000 (16:48 -0700)]

Revert

D14901379: [jit] Add options to Operator to enable registration of alias analysis passes

Differential Revision:

D14901379

Original commit changeset:

d92a497e280f

fbshipit-source-id:

51d31491ab90907a6c95af5d8a59dff5e5ed36a4

Michael Suo [Wed, 17 Apr 2019 23:48:28 +0000 (16:48 -0700)]

Revert

D14901485: [jit] Only require python print on certain namespaces

Differential Revision:

D14901485

Original commit changeset:

4b02a66d325b

fbshipit-source-id:

93348056c00f43c403cbf0d34f8c565680ceda11

Yinghai Lu [Wed, 17 Apr 2019 23:40:58 +0000 (16:40 -0700)]

Remove unused template parameter in OnnxifiOp (#19362)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19362

`float` type is never used in OnnxifiOp....

Reviewed By: bddppq

Differential Revision:

D14977970

fbshipit-source-id:

8fee02659dbe408e5a3e0ff95d74c04836c5c281

Jerry Zhang [Wed, 17 Apr 2019 23:10:05 +0000 (16:10 -0700)]

Add empty_quantized (#18960)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18960

empty_affine_quantized creates an empty affine quantized Tensor from scratch.

We might need this when we implement quantized operators.

Differential Revision:

D14810261

fbshipit-source-id:

f07d8bf89822d02a202ee81c78a17aa4b3e571cc

Elias Ellison [Wed, 17 Apr 2019 23:01:41 +0000 (16:01 -0700)]

Cast not expressions to bool (#19361)

Summary:

As part of implicitly casting condition statements, we should be casting not expressions as well.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19361

Differential Revision:

D14984275

Pulled By: eellison

fbshipit-source-id:

f8dae64f74777154c25f7a6bcdac03cf44cbb60b

Owen Anderson [Wed, 17 Apr 2019 22:33:07 +0000 (15:33 -0700)]

Eliminate type dispatch from copy_kernel, and use memcpy directly rather than implementing our own copy. (#19198)

Summary:

It turns out that copying bytes is the same no matter what type

they're interpreted as, and memcpy is already vectorized on every

platform of note. Paring this down to the simplest implementation

saves just over 4KB off libtorch.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19198

Differential Revision:

D14922656

Pulled By: resistor

fbshipit-source-id:

bb03899dd8f6b857847b822061e7aeb18c19e7b4

Bram Wasti [Wed, 17 Apr 2019 21:17:01 +0000 (14:17 -0700)]

Only require python print on certain namespaces (#18846)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18846

ghimport-source-id:

b211e15d24c88fdc32d79222d9fce2fa9c291541

Differential Revision:

D14901485

Pulled By: bwasti

fbshipit-source-id:

4b02a66d325ba5391d1f838055aea13b5e4f6485

Bram Wasti [Wed, 17 Apr 2019 20:07:12 +0000 (13:07 -0700)]

Add options to Operator to enable registration of alias analysis passes (#18589)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18589

ghimport-source-id:

dab203f6be13bf41963848f5315235b6bbe45c08

Differential Revision:

D14901379

Pulled By: bwasti

fbshipit-source-id:

d92a497e280f1b0a63b11a9fd8ae9b48bf52e6bf

Richard Zou [Wed, 17 Apr 2019 19:58:04 +0000 (12:58 -0700)]

Error out on in-place binops on tensors with internal overlap (#19317)

Summary:

This adds checks for `mul_`, `add_`, `sub_`, `div_`, the most common

binops. See #17935 for more details.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19317

Differential Revision:

D14972399

Pulled By: zou3519

fbshipit-source-id:

b9de331dbdb2544ee859ded725a5b5659bfd11d2

Vitaly Fedyunin [Wed, 17 Apr 2019 19:49:37 +0000 (12:49 -0700)]

Update for #19326

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/19367

Differential Revision:

D14981835

Pulled By: VitalyFedyunin

fbshipit-source-id:

e8a97986d9669ed7f465a7ba771801bdd043b606

Zafar Takhirov [Wed, 17 Apr 2019 18:19:19 +0000 (11:19 -0700)]

Decorator to make sure we can import `core` from caffe2 (#19273)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19273

Some of the CIs are failing if the protobuf is not installed. Protobuf is imported as part of the `caffe2.python.core`, and this adds a skip decorator to avoid running tests that depend on `caffe2.python.core`

Reviewed By: jianyuh

Differential Revision:

D14936387

fbshipit-source-id:

e508a1858727bbd52c951d3018e2328e14f126be

Yinghai Lu [Wed, 17 Apr 2019 16:37:37 +0000 (09:37 -0700)]

Eliminate AdjustBatch ops (#19083)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19083

As we have discussed, there are too many of AdjustBatch ops and they incur reallocation overhead and affects the performance. We will eliminate these ops by

- inling the input adjust batch op into Glow

- inling the output adjust batch op into OnnxifiOp and do that only conditionally.

This is the C2 part of the change and requires change from Glow side to work e2e.

Reviewed By: rdzhabarov

Differential Revision:

D14860582

fbshipit-source-id:

ac2588b894bac25735babb62b1924acc559face6

Bharat123rox [Wed, 17 Apr 2019 11:31:46 +0000 (04:31 -0700)]

Add rst entry for nn.MultiheadAttention (#19346)

Summary:

Fix #19259 by adding the missing `autoclass` entry for `nn.MultiheadAttention` from [here](https://github.com/pytorch/pytorch/blob/master/torch/nn/modules/activation.py#L676)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19346

Differential Revision:

D14971426

Pulled By: soumith

fbshipit-source-id:

ceaaa8ea4618c38fa2bff139e7fa0d6c9ea193ea

Sebastian Messmer [Wed, 17 Apr 2019 06:59:02 +0000 (23:59 -0700)]

Delete C10Tensor (#19328)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19328

Plans changed and we don't want this class anymore.

Reviewed By: dzhulgakov

Differential Revision:

D14966746

fbshipit-source-id:

09ea4c95b352bc1a250834d32f35a94e401f2347

Junjie Bai [Wed, 17 Apr 2019 04:44:22 +0000 (21:44 -0700)]

Fix python lint (#19331)

Summary:

VitalyFedyunin jerryzh168

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19331

Differential Revision:

D14969435

Pulled By: bddppq

fbshipit-source-id:

c1555c52064758ecbe668f92b837f2d7524f6118

Nikolay Korovaiko [Wed, 17 Apr 2019 04:08:38 +0000 (21:08 -0700)]

Profiling pipeline part1

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18772

Differential Revision:

D14952781

Pulled By: Krovatkin

fbshipit-source-id:

1e99fc9053c377291167f0b04b0f0829b452dbc4

Tongzhou Wang [Wed, 17 Apr 2019 03:25:11 +0000 (20:25 -0700)]

Fix lint

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/19310

Differential Revision:

D14952046

Pulled By: soumith

fbshipit-source-id:

1bbaaad6f932a832ea8e5e804d0d9cd9140a5071

Jerry Zhang [Wed, 17 Apr 2019 03:02:04 +0000 (20:02 -0700)]

Add slicing and int_repr() to QTensor (#19296)

Summary:

Stack:

:black_circle: **#19296 [pt1][quant] Add slicing and int_repr() to QTensor** [:yellow_heart:](https://our.intern.facebook.com/intern/diff/

D14756833/)

:white_circle: #18960 [pt1][quant] Add empty_quantized [:yellow_heart:](https://our.intern.facebook.com/intern/diff/

D14810261/)

:white_circle: #19312 Use the QTensor with QReLU [:yellow_heart:](https://our.intern.facebook.com/intern/diff/

D14819460/)

:white_circle: #19319 [RFC] Quantized SumRelu [:yellow_heart:](https://our.intern.facebook.com/intern/diff/

D14866442/)

Methods added to pytorch python frontend:

- int_repr() returns a CPUByte Tensor which copies the data of QTensor.

- Added as_strided for QTensorImpl which provides support for slicing a QTensor(see test_torch.py)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19296

Differential Revision:

D14756833

Pulled By: jerryzh168

fbshipit-source-id:

6f4c92393330e725c4351d6ff5f5fe9ac7c768bf

Jerry Zhang [Wed, 17 Apr 2019 02:55:48 +0000 (19:55 -0700)]

move const defs of DeviceType to DeviceType.h (#19185)

Summary:

Stack:

:black_circle: **#19185 [c10][core][ez] move const defs of DeviceType to DeviceType.h** [:yellow_heart:](https://our.intern.facebook.com/intern/diff/

D14909415/)

att

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19185

Differential Revision:

D14909415

Pulled By: jerryzh168

fbshipit-source-id:

876cf999424d8394f5ff20e6750133a4e43466d4

Xiaoqiang Zheng [Wed, 17 Apr 2019 02:22:13 +0000 (19:22 -0700)]

Add a fast path for batch-norm CPU inference. (#19152)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19152

Adding a fast path for batch-norm CPU inference when all tensors are contiguous.

* Leverage vectorization through smiple loops.

* Folding linear terms before computation.

* For resnext-101, this version gets 18.95 times faster.

* Add a microbenchmark:

* (buck build mode/opt -c python.package_style=inplace --show-output //caffe2/benchmarks/operator_benchmark:batchnorm_benchmark) && \

(OMP_NUM_THREADS=1 MKL_NUM_THREADS=1 buck-out/gen/caffe2/benchmarks/operator_benchmark/batchnorm_benchmark#binary.par)

* batch_norm: data shape: [1, 256, 3136], bandwidth: 22.26 GB/s

* batch_norm: data shape: [1, 65536, 1], bandwidth: 5.57 GB/s

* batch_norm: data shape: [128, 2048, 1], bandwidth: 18.21 GB/s

Reviewed By: soumith, BIT-silence

Differential Revision:

D14889728

fbshipit-source-id:

20c9e567e38ff7dbb9097873b85160eca2b0a795

Jerry Zhang [Wed, 17 Apr 2019 01:57:13 +0000 (18:57 -0700)]

Testing for folded conv_bn_relu (#19298)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19298

Proper testing for conv_bn_relu folding

Differential Revision:

D13998891

fbshipit-source-id:

ceb58ccec19885cbbf38964ee0d0db070e098b4a

Ilia Cherniavskii [Wed, 17 Apr 2019 01:21:36 +0000 (18:21 -0700)]

Initialize intra-op threads in JIT thread pool (#19058)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19058

ghimport-source-id:

53e87df8d93459259854a17d4de3348e463622dc

Differential Revision:

D14849624

Pulled By: ilia-cher

fbshipit-source-id:

5043a1d4330e38857c8e04c547526a3ba5b30fa9

Mikhail Zolotukhin [Tue, 16 Apr 2019 22:38:08 +0000 (15:38 -0700)]

Fix ASSERT_ANY_THROW. (#19321)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19321

ghimport-source-id:

9efffc36950152105bd0dc13f450161367101410

Differential Revision:

D14962184

Pulled By: ZolotukhinM

fbshipit-source-id:

22d602f50eb5e17a3e3f59cc7feb59a8d88df00d

David Riazati [Tue, 16 Apr 2019 22:03:47 +0000 (15:03 -0700)]

Add len() for strings (#19320)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19320

ghimport-source-id:

62131cb24e9bf65f0ef3e60001cb36509a1f4163

Reviewed By: bethebunny

Differential Revision:

D14961078

Pulled By: driazati

fbshipit-source-id:

08b9a4b10e4a47ea09ebf55a4743defa40c74698

Xiang Gao [Tue, 16 Apr 2019 20:55:37 +0000 (13:55 -0700)]

Step 3: Add support for return_counts to torch.unique for dim not None (#18650)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18650

ghimport-source-id:

75759c95e6c48e27c172b919097dbc40c6bfb5e6

Differential Revision:

D14892319

Pulled By: VitalyFedyunin

fbshipit-source-id:

ec5d1b80fc879d273ac5a534434fd648468dda1e

Karl Ostmo [Tue, 16 Apr 2019 19:59:27 +0000 (12:59 -0700)]

invoke NN smoketests from a python loop instead of a batch file (#18756)

Summary:

I tried first to convert the `.bat` script to a Bash `.sh` script, but I got this error:

```

[...]/build/win_tmp/ci_scripts/test_python_nn.sh: line 3: fg: no job control

```

Line 3 was where `%TMP_DIR%/ci_scripts/setup_pytorch_env.bat` was invoked.

I found a potential workaround on stack overflow of adding the `monitor` (`-m`) flag to the script, but hat didn't work either:

```

00:58:00 /bin/bash: cannot set terminal process group (3568): Inappropriate ioctl for device

00:58:00 /bin/bash: no job control in this shell

00:58:00 + %TMP_DIR%/ci_scripts/setup_pytorch_env.bat

00:58:00 /c/Jenkins/workspace/pytorch-builds/pytorch-win-ws2016-cuda9-cudnn7-py3-test1/build/win_tmp/ci_scripts/test_python_nn.sh: line 3: fg: no job control

```

So instead I decided to use Python to replace the `.bat` script. I believe this is an improvement in that it's both "table-driven" now and cross-platform.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18756

Differential Revision:

D14957570

Pulled By: kostmo

fbshipit-source-id:

87794e64b56ffacbde4fd44938045f9f68f7bc2a

Vitaly Fedyunin [Tue, 16 Apr 2019 17:50:48 +0000 (10:50 -0700)]

Adding pin_memory kwarg to zeros, ones, empty, ... tensor constructors (#18952)

Summary:

Make it possible to construct a pinned memory tensor without creating a storage first and without calling pin_memory() function. It is also faster, as copy operation is unnecessary.

Supported functions:

```python

torch.rand_like(t, pin_memory=True)

torch.randn_like(t, pin_memory=True)

torch.empty_like(t, pin_memory=True)

torch.full_like(t, 4, pin_memory=True)

torch.zeros_like(t, pin_memory=True)

torch.ones_like(t, pin_memory=True)

torch.tensor([10,11], pin_memory=True)

torch.randn(3, 5, pin_memory=True)

torch.rand(3, pin_memory=True)

torch.zeros(3, pin_memory=True)

torch.randperm(3, pin_memory=True)

torch.empty(6, pin_memory=True)

torch.ones(6, pin_memory=True)

torch.eye(6, pin_memory=True)

torch.arange(3, 5, pin_memory=True)

```

Part of the bigger: `Remove Storage` plan.

Now compatible with both torch scripts:

` _1 = torch.zeros([10], dtype=6, layout=0, device=torch.device("cpu"), pin_memory=False)`

and

` _1 = torch.zeros([10], dtype=6, layout=0, device=torch.device("cpu"))`

Same checked for all similar functions `rand_like`, `empty_like` and others

It is fixed version of #18455

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18952

Differential Revision:

D14801792

Pulled By: VitalyFedyunin

fbshipit-source-id:

8dbc61078ff7a637d0ecdb95d4e98f704d5450ba

J M Dieterich [Tue, 16 Apr 2019 17:50:28 +0000 (10:50 -0700)]

Enable unit tests for ROCm 2.3 (#19307)

Summary:

Unit tests that hang on clock64() calls are now fixed.

test_gamma_gpu_sample is now fixed.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19307

Differential Revision:

D14953420

Pulled By: bddppq

fbshipit-source-id:

efe807b54e047578415eb1b1e03f8ad44ea27c13

Jerry Zhang [Tue, 16 Apr 2019 17:41:03 +0000 (10:41 -0700)]

Fix type conversion in dequant and add a test (#19226)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19226

Type conversoin was wrong previously. Thanks zafartahirov for finding it!

Differential Revision:

D14926610

fbshipit-source-id:

6824f9813137a3d171694d743fbb437a663b1f88

Alexandr Morev [Tue, 16 Apr 2019 17:19:04 +0000 (10:19 -0700)]

math module support (#19115)

Summary:

This PR refer to issue [#19026](https://github.com/pytorch/pytorch/issues/19026)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19115

Differential Revision:

D14936053

Pulled By: driazati

fbshipit-source-id:

68d5f33ced085fcb8c10ff953bc7e99df055eccc

Shen Li [Tue, 16 Apr 2019 16:35:36 +0000 (09:35 -0700)]

Revert replicate.py to disallow replicating multi-device modules (#19278)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19278

Based on discussion in https://github.com/pytorch/pytorch/pull/19278 and https://github.com/pytorch/pytorch/pull/18687, changes to replicate.py will be reverted to disallow replicating multi-device modules.

Reviewed By: pietern

Differential Revision:

D14940018

fbshipit-source-id:

7504c0f4325c2639264c52dcbb499e61c9ad2c26

Zachary DeVito [Tue, 16 Apr 2019 16:01:03 +0000 (09:01 -0700)]

graph_for based on last_optimized_executed_graph (#19142)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19142

ghimport-source-id:

822013fb7e93032c74867fc77c6774c680aef6d1

Differential Revision:

D14888703

Pulled By: zdevito

fbshipit-source-id:

a2ad65a042d08b1adef965c2cceef37bb5d26ba9

Richard Zou [Tue, 16 Apr 2019 15:51:01 +0000 (08:51 -0700)]

Enable half for CUDA dense EmbeddingBag backward. (#19293)

Summary:

I audited the relevant kernel and saw it accumulates a good deal into float

so it should be fine.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19293

Differential Revision:

D14942274

Pulled By: zou3519

fbshipit-source-id:

36996ba0fbb29fbfb12b27bfe9c0ad1eb012ba3c

Mingzhe Li [Tue, 16 Apr 2019 15:47:25 +0000 (08:47 -0700)]

calculate execution time based on final iterations (#19299)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19299

I saw larger than 5% performance variation with small operators, this diff aims to reduce the variation by avoiding python overhead. Previously, in the benchmark, we run the main loop for 100 iterations then look at the time. If it's not significant, we will double the number of iterations to rerun and look at the result. We continue this process until it becomes significant. We calculate the time by total_time / number of iterations. The issue is that we are including multiple python trigger overhead.

Now, I change the logic to calculate execution time based on the last run instead of all runs, the equation is time_in_last_run/number of iterations.

Reviewed By: hl475

Differential Revision:

D14925287

fbshipit-source-id:

cb646298c08a651e27b99a5547350da367ffff47

Ilia Cherniavskii [Tue, 16 Apr 2019 07:13:50 +0000 (00:13 -0700)]

Move OMP/MKL thread initialization into ATen/Parallel (#19011)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19011

ghimport-source-id:

432e31eccfd0e59fa21a790f861e6b2ff4fdbac6

Differential Revision:

D14846034

Pulled By: ilia-cher

fbshipit-source-id:

d9d03c761d34bac80e09ce776e41c20fd3b04389

Mark Santaniello [Tue, 16 Apr 2019 06:40:21 +0000 (23:40 -0700)]

Avoid undefined symbol error when building AdIndexer LTO (#19009)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19009

Move the definition of `MulFunctor<>::Backward()` into a header file.

Reviewed By: BIT-silence

Differential Revision:

D14823230

fbshipit-source-id:

1efaec01863fcc02dcbe7e788d376e72f8564501

Nikolay Korovaiko [Tue, 16 Apr 2019 05:05:20 +0000 (22:05 -0700)]

Ellipsis in subscript

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/17763

Differential Revision:

D14893533

Pulled By: Krovatkin

fbshipit-source-id:

c46b4e386d3aa30e6dc03e3052d2e5ff097fa74b

Ilia Cherniavskii [Tue, 16 Apr 2019 03:24:10 +0000 (20:24 -0700)]

Add input information in RecordFunction calls (#18717)

Summary:

Add input information into generated RecordFunction calls in

VariableType wrappers, JIT operators and a few more locations

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18717

Differential Revision:

D14729156

Pulled By: ilia-cher

fbshipit-source-id:

811ac4cbfd85af5c389ef030a7e82ef454afadec

Summer Deng [Mon, 15 Apr 2019 23:43:58 +0000 (16:43 -0700)]

Add NHWC order support in the cost inference function of 3d conv (#19170)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19170

As title

The quantized resnext3d model in production got the following failures without the fix:

```

Caffe2 operator Int8ConvRelu logging error: [enforce fail at conv_pool_op_base.h:463] order == StorageOrder::NCHW. 1 vs 2. Conv3D only supports NCHW on the production quantized model

```

Reviewed By: jspark1105

Differential Revision:

D14894276

fbshipit-source-id:

ef97772277f322ed45215e382c3b4a3702e47e59

Jongsoo Park [Mon, 15 Apr 2019 21:35:25 +0000 (14:35 -0700)]

unit test with multiple op invocations (#19118)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19118

A bug introduced by

D14700576 reported by Yufei (fixed by

D14778810 and

D14785256) was not detected by our units tests.

This diff improves unit tests to catch such errors (with this diff and without

D14778810, we can reproduce the bug Yufei reported).

This improvement also revealed a bug that affects the accuracy when we pre-pack weight and bias together and the pre-packed weight/bias are used by multiple nets. We were modifying the pre-packed bias in-place which was supposed to be constants.

Reviewed By: csummersea

Differential Revision:

D14806077

fbshipit-source-id:

aa9049c74b6ea98d21fbd097de306447a662a46d

Karl Ostmo [Mon, 15 Apr 2019 19:26:50 +0000 (12:26 -0700)]

Run shellcheck on Jenkins scripts (#18874)

Summary:

closes #18873

Doesn't fail the build on warnings yet.

Also fix most severe shellcheck warnings

Limited to `.jenkins/pytorch/` at this time

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18874

Differential Revision:

D14936165

Pulled By: kostmo

fbshipit-source-id:

1ee335695e54fe6c387ef0f6606ea7011dad0fd4

Pieter Noordhuis [Mon, 15 Apr 2019 19:24:43 +0000 (12:24 -0700)]

Make DistributedDataParallel use new reducer (#18953)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18953

This removes Python side bucketing code from DistributedDataParallel

and replaces it with calls to the new C++ based bucketing and reducing

code. To confirm this is working well, we ran a test with both the

previous implementation and the new implementation, and confirmed they

are numerically equivalent.

Performance is improved by a couple percent or more, including the

single machine multiple GPU runs.

Closes #13273.

Reviewed By: mrshenli

Differential Revision:

D14580911

fbshipit-source-id:

44e76f8b0b7e58dd6c91644e3df4660ca2ee4ae2

Gemfield [Mon, 15 Apr 2019 19:23:36 +0000 (12:23 -0700)]

Fix the return value of ParseFromString (#19262)

Summary:

Fix the return value of ParseFromString.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19262

Differential Revision:

D14937605

Pulled By: ezyang

fbshipit-source-id:

3f441086517186a075efb3d74f09160463b696b3

vishwakftw [Mon, 15 Apr 2019 18:53:44 +0000 (11:53 -0700)]

Modify Cholesky derivative (#19116)

Summary:

The derivative of the Cholesky decomposition was previously a triangular matrix.

Changelog:

- Modify the derivative of Cholesky from a triangular matrix to symmetric matrix

Pull Request resolved: https://github.com/pytorch/pytorch/pull/19116

Differential Revision:

D14935470

Pulled By: ezyang

fbshipit-source-id:

1c1c76b478c6b99e4e16624682842cb632e8e8b9