- Add NNStreamer tutorials.

- Change sitemap of the nnstreamer.github.io

Signed-off-by: gichan <gichan2.jang@samsung.com>

Signed-off-by: Gichan Jang <gichan2.jang@samsung.com>

mkdir -p tmp_doc

cp *.md tmp_doc

cp Documentation/*.md .

-cp Documentation/hotdoc/doc-index.md .

+cp -r Documentation/tutorials .

+cp Documentation/hotdoc/*.md .

sed -i 's+](\.\./+](+g; s+](Documentation/+](+g' *.md

sed -i '\+img src\=\"+s+\./media+Documentation/media+g' *.md

fi

rm *.md

+rm -r tutorials

cp tmp_doc/*.md .

rm -r tmp_doc

rm -rf Documentation/nnst-exam

--- /dev/null

+---

+title: Installing NNStreamer

+...

+

+## Installing NNStreamer

index.md

- doc-index.md

- coding-convention.md

- component-description.md

- doxygen-documentation.md

- edge-ai.md

- features-per-distro.md

- getting-started.md

+ getting-started.md

+ installing-nnstreamer.md

getting-started-android.md

getting-started-macos.md

getting-started-meson-build.md

getting-started-tizen.md

getting-started-ubuntu-debuild.md

getting-started-ubuntu-ppa.md

+ tutorials.md

+ tutorials/tutorial1_playing_video.md

+ tutorials/tutorial2_object_detection.md

+ tutorials/tutorial3_pubsub_mqtt.md

+ tutorials/tutorial4_query.md

+ tutorials/tutorial5_gstreamer_api.md

+ doc-index.md

+ coding-convention.md

+ component-description.md

+ doxygen-documentation.md

+ edge-ai.md

+ features-per-distro.md

profiling-android-pipeline.md

how-to-archive-large-data.md

how-to-run-examples.md

<a href="gst/nnstreamer/README.html">Elements</a>

</li>

<li>

- <a href="nnstreamer-example/index.html">Examples</a>

+ <a href="tutorials.html">Tutorials</a>

</li>

<li>

<a href="API-reference.html">API reference</a>

--- /dev/null

+---

+title: Tutorials

+...

+

+# Tutorials

+

+## Welcome to the NNStreamer tutorials.

+### Prerequisites

+Before starting the tutorial, you should install GStreamer and NNStreamer in advance.

+Refer to [here](installing-nnstreamer.md) for detailed installation steps.

+If you're an Ubuntu user, the packages needed in each tutorial will be described in the tutorial.

+So even if you haven't prepared, let's go to tutorial one.

+

+### Basic Tutorials

+Tutorials introduce how to use NNStreamer step by step.

+Basic tutorials let you know a method of creating an application in various ways using object detection examples.

+1. Playing video using GStreamer without NNStreamer.

+2. Object detection on the single device.

+3. Object detection on the connected device - pub/sub

+4. Object detection on the connected device - query

+5. Create an app using GStreamer API

--- /dev/null

+---

+title: T1. Playing Video

+...

+

+# Tutorial 1. Playing video

+It's very simple. One line is enough to play the video.

+We will simply run the pipeline using the `gst-launch` tool.

+

+## Prerequisites

+```

+# Install GStreamer packages for Tutorial 1 and later use.

+$ sudo apt install gstreamer1.0-tools gstreamer1.0-plugins-base gstreamer1.0-plugins-good gstreamer1.0-plugins-bad

+```

+

+## Playing video!

+```

+$ gst-launch-1.0 v4l2src ! videoconvert ! videoscale ! video/x-raw, width=640, height=480, framerate=30/1 ! autovideosink

+# If you don't have a camera device, use `videotestsrc`.

+$ gst-launch-1.0 videotestsrc ! videoconvert ! videoscale ! video/x-raw, width=640, height=480, framerate=30/1 ! autovideosink

+```

+

+That's all!

+Change the width, height, and frame rate of the video.

+Now that we're close to GStreamer, let's move on to [nnstreamer](tutorials/tutorial2_object_detection.md) tutorials.

+

+## Additional description for used elements.

+Use the `gst-inspect-1.0` tool for more information of the element.

+```

+$ gst-inspect-1.0 videoconvert

+Factory Details:

+ Rank none (0)

+ Long-name Colorspace converter

+ Klass Filter/Converter/Video

+ Description Converts video from one colorspace to another

+...

+```

+ - v4l2src: Reads frames from a linux video device.

+ - videotestsrc: Creates a test video stream.

+ - videoconvert: Converts video format.

+ - videoscale: Resizes video.

+ - autovideosink: Automatically detects video sink.

+

--- /dev/null

+---

+title: T2. Object Detection

+...

+

+# Tutorial 2. Object detection

+This example passes camera video stream to a neural network using tensor_filter.

+Then the given neural network predicts multiple objects with bounding boxes.

+

+

+## Prerequisites

+```

+# install nnstreamer and nnstreamer example

+$ sudo add-apt-repository ppa:nnstreamer/ppa

+$ sudo apt-get update

+$ sudo apt install wget nnstreamer nnstreamer-example

+```

+

+## Run pipeline.

+```

+# Move to default install directory.

+$ cd /usr/lib/nnstreamer/bin

+$ gst-launch-1.0 \

+ v4l2src ! videoconvert ! videoscale ! video/x-raw,width=640,height=480,format=RGB,framerate=30/1 ! tee name=t \

+ t. ! queue leaky=2 max-size-buffers=2 ! videoscale ! video/x-raw,width=300,height=300,format=RGB ! tensor_converter ! \

+ tensor_transform mode=arithmetic option=typecast:float32,add:-127.5,div:127.5 ! \

+ tensor_filter framework=tensorflow-lite model=tflite_model/ssd_mobilenet_v2_coco.tflite ! \

+ tensor_decoder mode=bounding_boxes option1=mobilenet-ssd option2=tflite_model/coco_labels_list.txt option3=tflite_model/box_priors.txt option4=640:480 option5=300:300 ! \

+ compositor name=mix sink_0::zorder=2 sink_1::zorder=1 ! videoconvert ! autovideosink \

+ t. ! queue leaky=2 max-size-buffers=10 ! mix.

+```

+

+If there is any problem running the pipeline, please post the issue [here](https://github.com/nnstreamer/nnstreamer/issues).

+Is the pipeline a little complicated? That's because you're not familiar with the pipeline yet.

+If you follow these tutorials, you will understand well.

+

+

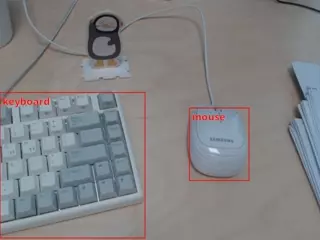

+This is a graphical representation of the pipeline. tee divides one input into multiple outputs.

+One flow from the tee is converted into tensor stream (tensor_converter) and tensor filter predicts objects.

+The result of the tensor stream (bounding box) is combined with the video stream and autovideosink displays it.

+

+Tutorial 2 is the most basic operation of the NNStreamer.

+#### See [here](https://nnstreamer.github.io/nnstreamer-example) for more examples.

+Tutorials 3 and 4 show how to connect between multiple devices. If you only use one device, you may skip next tutorial. If you want to know how to connect between multiple devices, let's go [next](tutorials/tutorial3_pubsub_mqtt.md).

--- /dev/null

+---

+title: T3. Edge pipeline - MQTT

+...

+

+# Tutorial 3. Edge pipeline - MQTT

+The publisher sends a video stream to the broker (in this tutorial, use mosquitto) with the topic designated by the user.

+Subscriber receives video streams from the broker and run object detection.

+

+## Prerequisites

+```

+# install mqtt broker

+$ sudo apt install mosquitto mosquitto-clients

+# install mqtt elements

+$ sudo apt install nnstreamer-misc

+```

+

+## Run pipeline. (video streaming)

+Before starting object detection, let's construct a simple pipeline using mqtt.

+### Publisher pipeline.

+```

+$ gst-launch-1.0 videotestsrc is-live=true ! video/x-raw,format=RGB,width=640,height=480,framerate=5/1 ! mqttsink pub-topic=test/videotestsrc

+```

+### Subscriber pipeline.

+```

+$ gst-launch-1.0 mqttsrc sub-topic=test/videotestsrc ! video/x-raw,format=RGB,width=640,height=480,framerate=5/1 ! videoconvert ! ximagesink

+```

+

+If you succeeded in streaming the video using mqtt, let's run the object detection.

+## Run pipeline. (Object detection)

+### Publisher pipeline.

+```

+$ gst-launch-1.0 v4l2src ! videoconvert ! videoscale ! video/x-raw,format=RGB,width=640,height=480,framerate=5/1 ! mqttsink pub-topic=example/objectDetection

+```

+

+### Subscriber pipeline.

+```

+$ cd /usr/lib/nnstreamer/bin

+$ gst-launch-1.0 \

+ mqttsrc sub-topic=example/objectDetection ! video/x-raw,format=RGB,width=640,height=480,framerate=5/1 ! tee name=t \

+ t. ! queue leaky=2 max-size-buffers=2 ! videoscale ! video/x-raw,width=300,height=300,format=RGB ! tensor_converter ! \

+ tensor_transform mode=arithmetic option=typecast:float32,add:-127.5,div:127.5 ! \

+ tensor_filter framework=tensorflow-lite model=tflite_model/ssd_mobilenet_v2_coco.tflite ! \

+ tensor_decoder mode=bounding_boxes option1=mobilenet-ssd option2=tflite_model/coco_labels_list.txt option3=tflite_model/box_priors.txt option4=640:480 option5=300:300 ! \

+ compositor name=mix sink_0::zorder=2 sink_1::zorder=1 ! videoconvert ! ximagesink \

+ t. ! queue leaky=2 max-size-buffers=10 ! mix.

+```

+

+

+This is a graphical representation of the pipeline.

+mqttsink publishes incoming data streams with a topic. mqttsrc subscribes the topic and pushes incoming data to the GStreamer pipeline.

+Using mqtt elements, pipelines can operate as data stream publishers or subscribers. This means lightweight device can offload heavy load task to another pipeline with high computing power.

+If you want to receive the offloading results again, use the query. Let's go to the [query](tutorial4_query.md).

--- /dev/null

+---

+title: T4. Edge pipeline - Query

+...

+

+# Tutorial 4. Edge pipeline - Query

+Tensor query allows devices which have weak AI computational power to use resources from higher-performance devices.

+Suppose you have a device at home with sufficient computing power (server) and a network of lightweight devices connected to it (clients).

+The client asks the server to handle heavy tasks and receives results from the server. Therefore, there is no need for cloud server by running AI on a local network.

+In this tutorial, the client sends a video frame to the server, then the server performs object detection and sends the result to the client.

+

+## Run pipeline. (echo server)

+Before starting object detection, let's construct a simple query pipeline.

+### Server pipeline.

+```

+$ gst-launch-1.0 tensor_query_serversrc ! other/tensors,num_tensors=1,dimensions=3:640:480:1,types=uint8,framerate=30/1 ! tensor_query_serversink

+```

+### Client pipeline.

+```

+$ gst-launch-1.0 v4l2src ! videoconvert ! videoscale ! video/x-raw,width=640,height=480,format=RGB,framerate=30/1 ! \

+ tensor_converter ! tensor_query_client ! tensor_decoder mode=direct_video ! videoconvert ! ximagesink

+```

+

+If you succeeded in streaming the video using query, let's run the object detection.

+## Run pipeline. (Object detection)

+### Server pipeline.

+```

+$ gst-launch-1.0 \

+ tensor_query_serversrc ! tensor_filter framework=tensorflow-lite model=tflite_model/ssd_mobilenet_v2_coco.tflite ! \

+ tensor_decoder mode=bounding_boxes option1=mobilenet-ssd option2=tflite_model/coco_labels_list.txt option3=tflite_model/box_priors.txt option4=640:480 option5=300:300 ! \

+ tensor_converter ! other/tensors,num_tensors=1,dimensions=4:640:480:1,types=uint8 ! tensor_query_serversink

+```

+

+### Client pipeline.

+```

+$ cd /usr/lib/nnstreamer/bin

+$ gst-launch-1.0 \

+ compositor name=mix sink_0::zorder=2 sink_1::zorder=1 ! videoconvert ! ximagesink \

+ v4l2src ! videoconvert ! videoscale ! video/x-raw,width=640,height=480,format=RGB,framerate=10/1 ! tee name=t \

+ t. ! queue ! videoscale ! video/x-raw,width=300,height=300,format=RGB ! tensor_converter ! tensor_transform mode=arithmetic option=typecast:float32,add:-127.5,div:127.5 ! queue leaky=2 max-size-buffers=2 ! \

+ tensor_query_client ! tensor_decoder mode=direct_video ! videoconvert ! video/x-raw,width=640,height=480,format=RGBA ! mix.sink_0 \

+ t. ! queue ! mix.sink_1

+```

+

+

+This is a graphical representation of the pipeline.

+Compared to the tutorial 2, only the role of the tensor filter is changed to be performed on the server.

+

+Tutorial 1 to 4 operated the pipeline using `gst-launch` tools. However, `gst-launch-1.0` is a debugging tool used to simply test pipelines.

+In order to make an application, it is better to use the GStreamer API. Tutorial 5 writes an application using the GStreamer API.

--- /dev/null

+---

+title: T5. GStreamer API

+...

+

+# Tutorial 5. Build application using GStreamer API

+The `gst-launch` tool is convenient, but it is recommended to use it simply to test pipeline description.

+In this tutorial, let's learn how to create an application. (Full example source code can be found [here](https://github.com/nnstreamer/nnstreamer-example/blob/main/native/example_object_detection_tensorflow_lite/nnstreamer_example_object_detection_tflite.cc)).

+Creating a pipeline application is simple. You can use `gst_parse_launch ()` which is GStreamer API instead of `gst-launch` tool.

+Refer to [here]((Documentation/how-to-run-examples.md)) for building nnstreamer-example.

+

+This is a part of the code explanation. The code lines below are essential for operating a pipeline application. The omitted codes are error handling and additional.

+

+Part of **nnstreamer_example_object_detection_tflite.cc**

+```

+int

+main (int argc, char ** argv)

+{

+ ...

+

+ /* Init gstreamer */

+ gst_init (&argc, &argv);

+

+ /* Same pipeline description as tutorial 2 */

+ str_pipeline =

+ g_strdup_printf

+ ("v4l2src name=src ! videoconvert ! videoscale ! video/x-raw,format=RGB,width=640,height=480,framerate=30/1 ! tee name=t "

+ "t. ! queue leaky=2 max-size-buffers=2 ! videoscale ! video/x-raw,format=RGB,width=300,height=300 ! tensor_converter ! "

+ "tensor_transform mode=arithmetic option=typecast:float32,add:-127.5,div:127.5 ! "

+ "tensor_filter framework=tensorflow-lite model=%s ! "

+ "tensor_decoder mode=bounding_boxes option1=mobilenet-ssd option2=%s option3=%s option4=640:480 option5=300:300 !"

+ "compositor name=mix sink_0::zorder=2 sink_1::zorder=1 ! videoconvert ! ximagesink "

+ "t. ! queue leaky=2 max-size-buffers=10 ! mix. ",

+ g_app.tflite_info.model_path, g_app.tflite_info.label_path, g_app.tflite_info.box_prior_path);

+

+ ...

+

+ /* Create a new pipeline */

+ g_app.pipeline = gst_parse_launch (str_pipeline, NULL);

+

+ ...

+

+ /* Start pipeline. Setting pipeline to the PLAYING state. */

+ gst_element_set_state (g_app.pipeline, GST_STATE_PLAYING);

+

+ ...

+ /* Pipeline is running */

+ ...

+

+ /* Stop and release the resources */

+ gst_element_set_state (g_app.pipeline, GST_STATE_NULL);

+ gst_object_unref (g_app.pipeline);

+

+ ...

+}

+```

+

+Then let's run the application.

+```

+# If you built tutorial 5 yourself, go to the path you specified, and if you installed it using apt, go to /usr/lib/nnstreamer/bin.

+$ cd /usr/lib/nnstreamer/bin

+$ ./nnstreamer_example_object_detection_tflite

+```

NNStreamer is a set of Gstreamer plugins that allow Gstreamer developers to adopt neural network models\r

easily and efficiently and neural network developers to manage neural network pipelines and their filters easily and efficiently.\r

</p>\r

- <a class="btn btn-default btn-xl page-scroll" href="doc-index.html" data-hotdoc-relative-link=true>Get Started</a>\r

+ <a class="btn btn-default btn-xl page-scroll" href="getting-started.html" data-hotdoc-relative-link=true>Get Started</a>\r

</div>\r

</div>\r